When Disavowing Links At The Domain Level, Don’t Let Your Machete Turn Into A Guillotine

We SEOs can sometimes get a bit overzealous when disavowing links. Columnist Glenn Gabe outlines his process for carefully reviewing your disavow file to ensure that strong domains haven't been added in error.

For websites dealing with unnatural links, it’s important to thoroughly analyze each link, determine which links are unnatural, and then take action.

That includes removing links when possible, then disavowing what you can’t get to. That sounds straightforward, but there are several ways this process can lead to serious SEO problems.

One problem that can be extremely dangerous is adding the wrong domains to a disavow file. For example, adding powerful and natural domains to a disavow file using the domain directive.

In my experience, that’s typically been a knee-jerk reaction to a severe unnatural links problem and can cause massive issues down the line. And since disavow files can sit hidden away (once submitted), they can be easy to forget about and can drag down SEO performance over the long-term.

Trouble In Disavowland

Within the past month alone, I have analyzed three large disavow files across different companies that had serious flaws — and those flaws can absolutely be negatively impacting the domains at hand. Basically, there were domains added to the disavow files that never should have been added in the first place.

The domains being disavowed unfortunately included some extremely well-known and powerful websites. Actually, some of the most powerful websites on the web were being disavowed (at the domain level) — for example, nytimes.com, forbes.com, techcrunch.com, etc. I even saw major social networks added like twitter.com, plus.google.com, facebook.com, etc.

Again, this is not just one example. I’ve seen this a number of times when helping companies evaluate their current SEO performance. Basically, their machete turned into a guillotine.

Machete Vs. Fine-Toothed Comb

Google head of web spam Matt Cutts once said that you should use the disavow tool as a machete versus a “fine-toothed comb” when disavowing links, but some webmasters have taken that too far.

If you disavow at the domain level, then you can never gain the benefit of links from that domain forever. For extremely spammy referring sites, that’s not a problem. But for powerful, large-scale sites, that can be extremely problematic. It can haunt your website forever.

Imagine disavowing The New York Times only to gain a great link one year from now. You would not benefit from that link at all. Worse, you might not even be aware it’s being disavowed in the first place. This can definitely happen, and it’s a sinister problem that can sit beneath the surface.

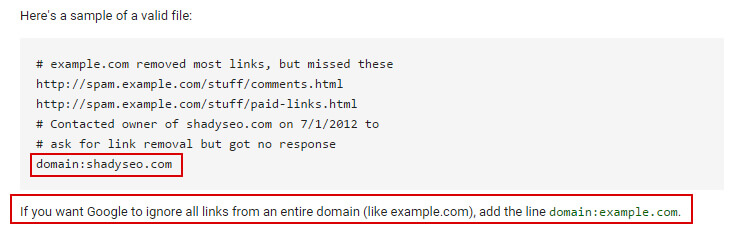

Make sure you are using the domain directive correctly:

Regarding social networks: Do not disavow major social networks at the domain level. That’s wrong and completely unnecessary. First, twitter.com, plus.google.com, etc., are not spammy domains. Second, most links are nofollowed across major social networks, so they aren’t passing PageRank anyway.

Third, if somehow you do find a spammy link on a powerful social network, then have the link removed manually if possible. If you can’t, then disavow the specific URL — not the entire domain.

How To Avoid Disavowing The Wrong Domains

Some of you might be a little nervous right now about the contents of your disavow file. I totally understand, and I’m here to help. Below, I’ll list several things you can do right now to better understand the quality of domains listed in your disavow file.

There might be domains that will be easy to identify as high quality, and these should be removed from your disavow file ASAP. For the remaining domains, the tactics listed below can help you make educated decisions about which domains to disavow, and which ones to keep.

To clarify, I’m referring to the heavy use of the domain directive in your disavow file. If you are disavowing specific links in your disavow file (at the URL level), then your risk is greatly lessened. But if you are disavowing entire domains, then you should go through the process listed below.

1. Remove Versus Disavow

My first recommendation is to try to remove the offending links before jumping to the disavow tool. If you find unnatural links and can remove them (either by taking them down yourself or convincing the webmaster to do so), then you don’t have to deal with the disavow file at all. This means you won’t have make decisions about disavowing at the URL level versus domain level.

I know it’s easier to just disavow, but I always recommend removing what you can.

2. Roll Back The Obvious

When reviewing the domains currently present in your disavow file, highlight the obvious false positives. Mark the ultra-powerful and legitimate domains as safe so you can go back and remove them from your disavow file. Using examples from earlier, nytimes.com, forbes.com, techcrunch.com, and other sites of similar authority fall into this category.

If you find unnatural links on a powerful domain, then contact the webmaster and ask for removal first. If the link cannot be removed, then disavow at the URL level. Do not disavow ultra-powerful domains at the domain level.

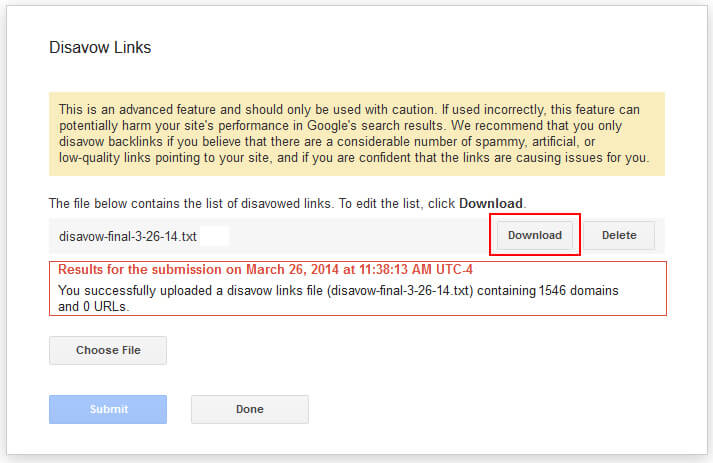

Side note: To access your latest disavow file that has been submitted, visit the disavow links page in Google Webmaster Tools, click “disavow links,” and then download the file:

You should also highlight major social networks like twitter.com, facebook.com, plus.google.com, etc. The domain directives for major social networks should be removed from your disavow file, even if they mostly contain nofollowed links anyway.

Then resubmit your disavow file after the domain directives have been removed.Note, Matt Cutts once explained that it can take a while for Google to reprocess links based on removing directives from a disavow file, so be patient. But you must get the right domains and links in your file… so make those changes as soon as possible.

3. Review Link Analysis Data With URL Profiler

I’ve been using URL Profiler more and more with SEO projects recently. There’s some great functionality that can increase your efficiency while providing strong data in bulk.

For analyzing a disavow file, URL Profiler enables you to import URLs and receive domain-level link data back (from sources such as Majestic, Ahrefs and Moz). The data can help you identify solid domains versus risky ones listed in your disavow file (top level) and get you moving in the right direction.

To do this, simply strip out “domain:” from the list of domains in your disavow file and import them into URL Profiler. Note that you might need to add both the www and non-www versions to avoid throwing off the data collection process.

Once you pull the data, you can sort by whichever metric you choose, like Majestic’s Trust Flow, Ahrefs’ Domain Rank, or Moz’s Domain Authority. You shouldn’t be making quick decisions based on these metrics, however — they are simply ways to get you moving in the right direction.

For example, enter URLs from your disavow file, receive domain-level data back, sort by {enter metric here}, flag domains to review, and then dig in manually to learn more. If you find some domains are fine, then remove them from your disavow file. If not, keep them.

4. Review Spam Scores With Majestic and Moz

Don’t roll your eyes! Both Majestic and Moz provide a quick way to gain some top-level insights when investigating domains (using Spam Score and Spam Finder). Again, I’m not saying to take one metric and run with it, but this data can help you identify domains that require further investigation.

Majestic enables you to view all referring domains and then sort by various metrics. They recently wrote a post that explains how to do this to identify spammy domains and called the process “Spam Finder.” Basically, you can enter your domain, click the referring domains tab, sort by trust flow first, and then sort by citation flow. The results will show you domains with low trust flow and high citation flow (which correlate with spammy domains).

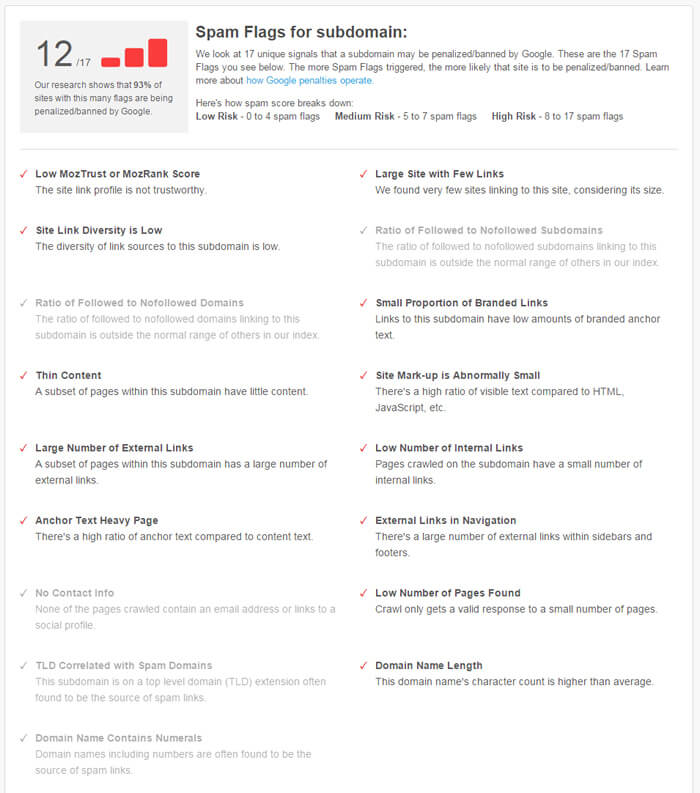

In addition, Moz recently released Spam Score, which is based on analyzing 17 different signals. Enter your domain in Open Site Explorer and then click the “Spam Analysis” tab, then organize by subdomain or root domain and view the spam scores. You can click each score for detailed information.

Again, this is not perfect, and you should not take a certain score and simply make decisions based on it. Instead, use it as a barometer and flag domains for further analysis.

5. Look At SEMrush & Searchmetrics Trending

When checking your disavow file, you can spot-check specific domains in SEMrush or Searchmetrics to view their organic search trending over time.

For our purposes, you are looking to see if any data shows up. If a domain is extremely spammy, you’ll typically see no traffic from Google organic at all (or it will sharply drop off to no traffic at some point).

SEMrush Google Organic Search Trending

Searchmetrics Google Organic Search Trending

Summary: Be Careful When Disavowing Domains

When dealing with unnatural links, you should use a disavow file as a last resort. Incorrectly adding domains that are natural can be extremely dangerous SEO-wise.

If strong domains are left in your disavow file, then your site will have no chance at benefiting from any links from that domain forever. And if that’s happening across many domains, then you could be seriously damaging your SEO performance. My recommendation is to review your disavow file today using the techniques listed above. Then refine the file to only include those links and domains that truly should be disavowed.

It’s important to be careful with that machete. You never know what you are going to cut.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories