Eye-Tracking Study: Everybody Looks At Organic Listings, But Most Ignore Paid Ads On Right

Interesting new data about searcher behavior from a recent User Centric eye-tracking study: Whether using Google or Bing, all 24 participants looked at the organic search results for their queries, but between 70% and 80% ignored the paid ads on the right side of the page. User Centric studied the search behavior of 24 “experienced […]

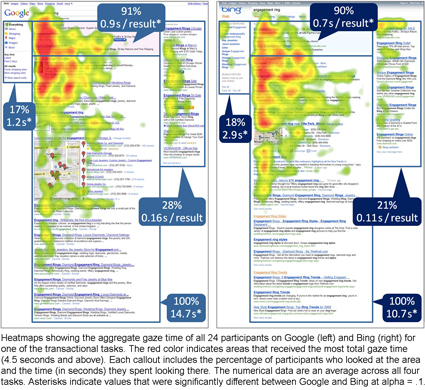

Interesting new data about searcher behavior from a recent User Centric eye-tracking study: Whether using Google or Bing, all 24 participants looked at the organic search results for their queries, but between 70% and 80% ignored the paid ads on the right side of the page.

User Centric studied the search behavior of 24 “experienced users” of both Google and Bing, all between 18 and 54 years old. They were asked to do eight searches — four on Google (with Google Instant turned off) and the other four on Bing.

The results? Here’s a table version of the diagram above.

| Bing | ||

| Organic Results | 100% viewed; 14.7 seconds total | 100% viewed; 10.7 seconds total |

| Top Paid Results | 91% viewed; 0.9 seconds/result | 90% viewed; 0.7 seconds/result |

| Right-side Paid Results | 28% viewed; 0.16 seconds/result | 21% viewed; 0.11 seconds/result |

| Left-side Column | 17% viewed; 1.2 seconds | 18% viewed; 2.9 seconds |

User Centric says there’s no significant statistical difference between the 28% of searchers who looked at Google’s right-side ads and the 21% who looked in the same place on Bing (as shown in row three above). Ads that appear above the organic results were viewed substantially more often than those in the right column and almost as often as the organic search results.

The various filters and refinements that both Google and Bing display on the left-side of the search results page were looked at even less than paid ads on the right: 18% for Bing and 17% for Google. Notably, time spent looking at Bing’s left column was more than twice on Google.

The main difference in activity was in time spent looking at organic search results; searchers on Google spent four more seconds looking there than Bing users did. The image example above is a search for “engagement ring” — both search engines provided a map with local results in the middle page along with numerous traditional “blue link” results. It looks like there may also be a news result near the top of the Google results. User Centric says one possible interpretation for the time difference is that users had more trouble finding the information they were looking for on Google, but it’s not clear what the reason was.

One other interesting stat: User Centric says only 25% of the study participants activated Bing’s automatic site previews, and each time it happened accidentally. Google also offers Instant Previews, but those require a click.

You can read more about the study on User Centric’s website.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories