How Not To Screw Up SEO On A JavaScript-Intense Site

In the search engine optimization (SEO) world, confusion abounds as to how -- and whether -- to employ JavaScript. Columnist Neil Patel argues that it can be done and discusses how JavaScript can be applied in safe, SEO-friendly ways.

The phrase “SEO-friendly JavaScript” sounds like an oxymoron. For years, webmasters, developers and SEOs have fretted and wrung their hands in worry, wondering, “Will Google index my JavaScript site?”

Google hasn’t always been very helpful in answering the question definitively. Prior to October 2014, Google’s webmaster guidelines stated that “search engine spiders may have trouble crawling your site” if using “fancy features such as JavaScript.” Though the guidelines have since been updated to reflect Google’s improved crawling and indexing capabilities, the long-standing issues surrounding JavaScript caused SEO-conscious developers to shy away from using it at all.

Full libraries of content from Google about JavaScript suggest that Google is not the enemy of JavaScript. But what about crawling and indexing? Does Google like JavaScript or not? Rather than wring our hands and wait for an answer, we need solutions. We want to have our JavaScript and SEO, too. I believe it’s possible.

You should not completely ignore the issues, however. I’ve dealt with clients who have entire sections of their site totally unindexed due to JavaScript glut. It’s extremely dangerous to go to the vast amount of work creating an entire site or Web app, only to discover that it can’t be indexed.

The approaches described below are not how-tos, but suggestions. Additionally, they include some degree of overlap. Find a method that works for your site, and go forward with confidence.

Fallback Content

A fallback page or fallback content is just what it sounds like — a fallback page that will display in lieu of your JavaScript page. It contains content — coded in HTML — that is parsed when the external receiving resource is unsupportable, as in the case of some JavaScript sites.

Doing fallback pages correctly can be tricky and time-consuming. You can choose a very basic approach to fallback content by featuring only the most essential text and formatting features.

This approach allows the user experience that you want through JavaScript, while giving the search spiders the indexable HTML content they prefer.

Unobtrusive JavaScript

Some users, and we’ll include spiders in this group, can’t access your site’s front-loading JavaScript. The problem is crappy UX and abysmal SEO.

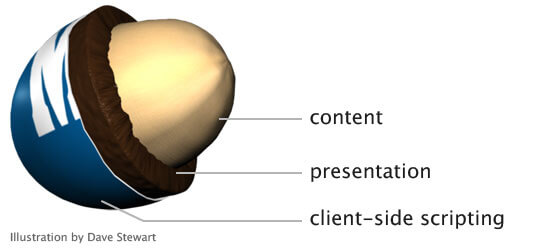

Another potential solution is unobtrusive JavaScript. With unobtrusive JavaScript, you code your JavaScript functionality separately from the site’s content. You can think of it as a three-ingredient recipe that you mix in the following order:

- HTML (content and page framework)

- CSS (style)

- JavaScript (interactivity)

Each ingredient adds an important part of the recipe but does so without obstructing any level of accessibility or crawlability.

Image from SixRevisions.com

The client accessing the site — let’s say the search spider — tastes the HTML content first. It’s SEO-rich and wonderfully digestible. The JavaScript and CSS begin loading next, allowing the human client to see the page in all its glory.

Here’s how A List Apart diagrams it:

In this diagram, the content is HTML. This content is then coated with CSS (“presentation”), and then JavaScript (“client-side scripting”) comes along to make everything better.

An alternative way to think of unobtrusive JavaScript is this: You don’t build a JavaScript page. Instead, you build an HTML page, and integrate JavaScript using external scripts for select behaviors (e.g., a menu). Armando Roggio has a great step-by-step example on GetElastic.com.

Progressive Enhancement (PE)

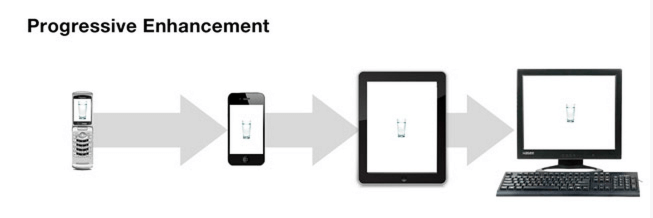

Using progressive enhancement pages allows you to create a level of UX for each level of browser functionality and bandwidth. This technique can work hand-in-hand with the unobtrusive JavaScript methodology above.

Progressive Enhancement is mostly a methodology, rather than an actual goal. One of its biggest advantages is that it is geared to be mobile-friendly.

Image from DeepBlue.com

Instead of featuring your potentially SEO-unfriendly JavaScript front and center, you would create a page version at the most basic level of HTML. Then you would create another version of the page with more advanced features, adding in your JavaScript.

The coding methodology behind PE is labor-intensive, because it requires making several complete versions of the same page. But the upside for SEO is clear. The content, regardless of the requesting client, is fully available to spiders and is indexable for the search engines. Because the basic content of the page is HTML, it’s readily picked up and indexed by any search spider.

Serving Search Engines Alternative Page Versions

In the case of JavaScript Web apps, serving different page versions to the search spiders is the only way to successfully gain SEO.

GASP! Is this cloaking? Actually, yes.

And isn’t cloaking one of the seven original sins of SEO? Yes again. But in this case, the cloaking is white-hat and healthy.

By way of disclaimer, I don’t typically recommend this methodology first. I consider it a last resort only suggested for applications that are exclusively Java-based.

When your page or app is requested by a spider, you forward that request to a separate application that pulls the site in an SEO-rendered way and serves it back to the spider. Third-party applications such as BromBone and Prerender can facilitate the delivery of status codes and headers.

Here’s how it works.

Image from Nodejsmongodb.com.

If you choose this method, make sure to slavishly adhere to Google’s guidelines. Here is the official statement of how Google’s crawlers will access dynamic content.

Conclusion

There are a lot of things to love about JavaScript. It’s beautiful, powerful, and delivers a stellar user experience. From infinite scrolling pages to powerful apps, JavaScript is still a great option for an awesome Web experience.

But unless it’s being crawled and indexed, it’s useless. If you’re going the JavaScript route — or already have — make sure you follow an SEO procedure that makes the most sense for your site.

What techniques do you prefer for SEO-friendly JavaScript?

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land