Replace (Not Provided) With Behavioral Analysis & Fuzzy Matching To Boost SEO

Worried about your site's bounce rate? Columnist Chris Liversidge details how to identify and fix problem pages using Google Analytics and Webmaster Tools.

Your search engine results page (SERP) bounce rate — the rate at which people click your website’s listing from search results, then click the “Back” button to return to the search results — can seriously impact your ability to rank in the top positions for target search terms.

Yet, Google Webmaster Tools doesn’t provide any immediately obvious ways to discover if SERP bounce is a problem for your site.

Here’s how to use a blend of Google Webmaster Tools (GWT) and Google Analytics (GA) to discover your problem pages for performing terms that cause your site visitors to bounce.

Oh, and we’ll also be replacing the data removed by (not provided) on the way…

Step 1: Do You Have A Problem?

Before you spend time building out analyses on what terms and ranking pages are causing you the most pain, let’s use a quick and easy report to identify if you’re likely to have an issue with SERP bounce in the first place.

Break out your rollup Google Analytics profile for sites and countries you want to analyse, and jump into Behaviour > Behaviour Flow.

Set a date range that will give you decent volume, but which is less than three months (to match up with GWT data later). Create a segment for organic traffic by clicking + Add Segment and selecting “Organic Traffic” from the System segment.

What we’re looking for here is a trend showing significant dropoff from non-homepage pages.Why non-homepage? Well, your homepage will rank for all sorts of terms, many of which you’re not actively targeting. However, if you have even the very basics in place for your optimisation campaign, you’ll have plenty of content on-site targeted at your mid- and long-tail key phrase targets.

These pages should show up strongly in this report. We can then check if they have a generally high starting page exit rate. This would highlight a propensity for your organic traffic to quickly exit from your landing pages.

Here’s a likely site with landing page issues in the long tail.

Disclaimer: This is a very broad-brush measure of whether or not you have a problem, but it’s quick and easy to do and will save you having to work through the below analysis to only deliver marginal returns.Step 2: Discover What’s Failing

Okay, so we have an issue with exit rates generally for long-tail organic. Let’s combine Webmaster Tools data and landing page exit rates to find our pain points and build a priority list of pages to fix.

First, in the current Behavior Flow report, you can click on your problem group and get a list of the highest problem URLs within with a weighted sort by volume and exit rate.

Regardless of the depth of analysis you’re about to undertake: these pages are basket-cases.

This is your hotlist of pages to fix. If you do nothing else with this article’s advice, make sure you review and (if necessary) update the <title>, meta description and critical render path content of these pages. (Check out QueryClick’s 101 SEO guide if you’re unsure where to start.)

Staying in Google Analytics, jump over to Site Content > Landing Pages. With your organic-only filter applied, you can bottom out this list to whatever depth you’d like. I generally pull the top 1000 URLs, filter out the homepage, then export to CSV.

Please note: At this stage, if you know your URLs don’t include key phrase targets (or are generally very messy), then you may want to stop at this stage, take your list of problem pages, and work through improving them in line with step three below. Rely on hand assessment of what the target term for the URL should be.

You will at least be working with your biggest problem pages first, so this is still an excellent exercise for improving your SERP bounce rate and therefore top three ranking ability.

If, however, you have worked hard to get concise, keyphrase-rich URLs in place, then read on for further effort-targeting accuracy.

Switch into Google Webmaster Tools and go to your Search Traffic > Search Queries report. Set the dates to the same time period as your Google Analytics report, filter out brand terms, and export the table.

Take both these data dumps and pop them into an Excel document in separate tabs. Create a new, third tab onto which you pull landing page and session data from GA, and query term data from GWT.Now use Excel’s SUBSTITUTE function to replace out URL parameters and replace them with blank space. You can find lookup libraries online for this, or simply insert the most common such as: ? / – &, etc. I nest my SUBSTITUTES to do all the replacing in one row, and also I wrap a LOWER and a TRIM around them to help normalise.

To remove search URLs, I also throw an exception IFEROR statement in replacing the string with an integer where the string is found.

Your formula will then look something like this (where “A3” is your URL cell):

=TRIM(LOWER(SUBSTITUTE(SUBSTITUTE(SUBSTITUTE(SUBSTITUTE(IFERROR(SEARCH("search",A3),A3),"/en/",""),"/"," "),"-"," "),"?q="," ")))

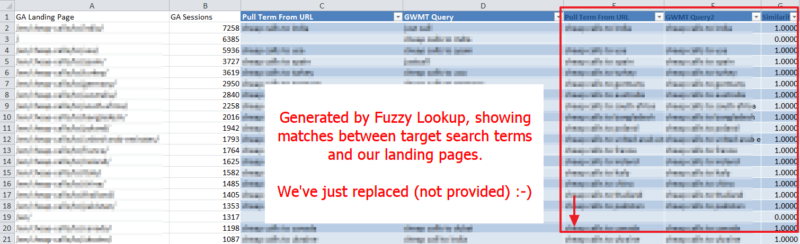

Next up, we run a “similarity” calculation against the Search term set, and our cleaned up URL terms, to find close matches. For this, You’ll need to install Excel’s “Fuzzy Lookup” add-on.

Turn both rows into tables with CTRL+L, then apply the fuzzy lookup to get something like the below.

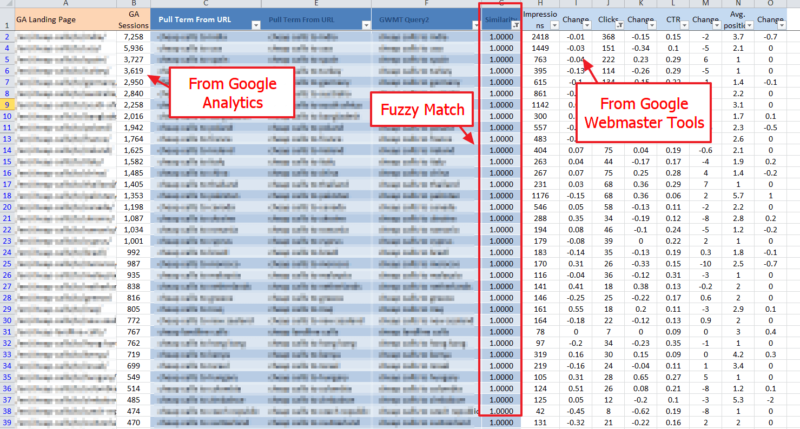

Yes, that’s right, we’ve just brought search term data back into our GA data, replacing the gap left by (not provided).We can now use a lookup to find all landing page matches for the exact search term performed for.

In addition, we can also pull in average click-through rate (CTR) expectations to build a master sheet of SERP behaviour covering the two key behavioural elements affecting SEO: SERP bounce rate and SERP CTR.

Finally, set a minimum match threshold for your Fuzzy Match that you’re happy with. I find around 0.8 to 1 gives very solid results, but this is partly dependent on the state of your URLs, so you’ll want to find a threshold that you’re happy with by reviewing your results.

Filter further by only including impressions that drove clicks, then use a weighted sort by highest SERP impression and highest bounce. This should leave you with a solid, clean and prioritised data set of problem pages to work with.

We’ve filtered out the cruft and joined GA & WMT to allow long-tail SERP bounce analysis. [CLICK TO ENLARGE]

Step 3: Solving SERP Bounce

I’ve already written about fixing SERP CTRs, so instead let’s look at the SERP bounce issue. The main cause here is a disconnect between the landing page content and the search term used.

Essentially, there’s likely to be a “broken promise” somewhere. For example, perhaps you’re implying a sale or discount via the meta description that’s either not shown on the landing page or not visible in the first three seconds or so. (Use Eyequant to test for the latter.)

In those situations, adjust one or the other (meta description or content) to ensure properly aligned messaging. This removes the broken promise and will reduce bounce rates.

Alternatively, the page may not be solely focused on a long-tail intent, and this traffic — previously hidden behind “(not provided)” — is behaving poorly on the more general page. If the search term is important, then build out a new page targeting the term and link to it from the currently performing page using appropriate anchor text. Quite quickly, you’ll find the new, better targeted page will take over the ranking for your domain.

And that’s it. Hopefully you can see how blending Webmaster data about long tail with GA behaviour rates can supercharge the performance of your site for SEO. Happy fuzzing!

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories