Bing says it has been applying BERT since April

The natural language processing capabilities are now applied to all Bing queries globally.

Bing has been using BERT to improve the quality of search results since April, Microsoft has stated. The transformer models are now applied to every Bing query globally.

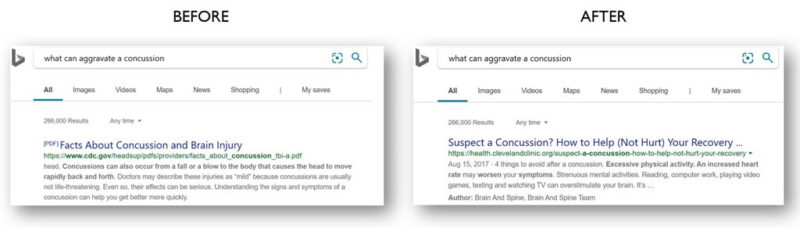

In the example above, Bing’s improved natural language processing capabilities enable it to understand that the user wants to learn about what to avoid after suffering from a concussion. Prior to the addition of these transformer models, results pertained to the symptoms of a concussion.

Why we should care

Better natural language understanding should lead to more relevant search results for users. We now know that both Bing and Google are using these advanced algorithms to inform search results, particularly those that trigger for longer queries. In fact, Bing’s implementation of BERT to improve its search results pre-dates Google’s BERT announcement by six months. Bing says this has led to the biggest improvements in search quality in the last year.

“Unlike previous deep neural network (DNN) architectures that processed words individually in order, transformers understand the context and relationship between each word and all the words around it in a sentence,” explained Jeffrey Zhu, Bing Platform program manager. “Starting from April of this year, we used large transformer models to deliver the largest quality improvements to our Bing customers in the past year.”

BERT builds on the deep learning capabilities used in Bing’s Intelligent Search features, such as Intelligent Answers that draw upon multiple sources, Intelligent Image Search with object recognition and hover-over definitions for uncommon words. These improvements can help Bing preserve or grow its share of the search market, which will continue to make it a viable platform for both organic and paid campaigns.

More on the announcement

- Microsoft implemented transformer models by using Azure N-series Virtual Machines with GPU accelerators and then performed further optimizations to execute the parallel computing at web-search scale.

Learn more about BERT

Here are some additional resources to expand your understanding of the latest breakthrough in natural language processing.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land