Google Webmaster Tools Crawl Errors: How To Get Detailed Data From the API

Earlier this week, I wrote about my disappointment that granular data (the number of URLs reported, the specifics of the errors…) was removed from Google webmaster tools. However, as I’ve been talking with Google, I’ve discovered that much of this detail is still available via the GData API. That this detail was available through the […]

Earlier this week, I wrote about my disappointment that granular data (the number of URLs reported, the specifics of the errors…) was removed from Google webmaster tools. However, as I’ve been talking with Google, I’ve discovered that much of this detail is still available via the GData API. That this detail was available through the API wasn’t at all obvious to me from reading their blog post about the changes. The post included the following:

“For those who worry that 1000 error details plus a total aggregate count will not be enough, we’re considering adding programmatic access (an API) to allow you to download every last error you have, so please give us feedback if you need more.”

And led me to believe that the current API would only provide access to the same data available from the downloads from the UI. But in any case, up to 100,000 URLs for each error and the details of most of what has gone missing is in fact available through the API now, so rejoice!

The data is a little tricky to get to and the specifics of what’s available varies based on how you retrieve it. Two different types of files are available that provide detail about crawl errors:

- A download of eight CSV files, one of which is a list of all crawl errors

- A crawl errors feed, which enables you to programatically fetch 25 errors at a time

(Thanks to Ryan Jones and Ryan Smith for help in tracking these details down.)

What this means is that different slices of data are available in four ways:

- User interface display

- User interface-based CSV download

- API-based download

- API-based feed

CSV Download

Eight CSV files are available through the API (you can download them all for a single site or for all sites in your account at once as well as just a specific CSV and a specific date range), but this support is not built into most of the available client libraries. You’ll need to build it in yourself or use the PHP client library (which seems to be the only one that has support built in). The CSV files are:

- Top Pages

- Top Queries

- Crawl Errors

- Content Errors

- Content Keywords

- Internal Links

- External Links

- Social Activity

For the topic at hand, let’s dive into the crawl errors CSV. It contains the following data:

- Up to 100,000 URLs for each type of error (rather than the 1,000 maximum available through the download link in the UI)

- The full list of URLs blocked by robots.txt (which is no longer available at all in the UI)

- Specifics of “not followed” errors (the UI reports only the status code returned by the URL, while the CSV includes what the actual problem was, such as “too many redirects”)

- Specifics site-wide server errors (the UI no longer lists the specific URLs that returned the error or the specific error)

- Specifics about “soft 404s” (the UI doesn’t include the detail of the type of soft 404)

This file does not include details on crawl error sources (but that is available through the crawl errors feed, described below).

Crawl Errors Feed

It appears that the crawl errors feed request code is built into the Java and Objective C client libraries, but you’ll have to write your own code to request this if you’re using a different library. You can fetch 25 errors at a time and programmatically loop through them all. The information returned is in the following format:

<atom:entry> <atom:id>id</atom:id> <wt:crawl-type>web-crawl</wt:crawl-type> <wt:issue-type>http-error</wt:issue-type> <wt:url>https://example.com/dir/</wt:url> <wt:detail>4xx Error</wt:detail> <wt:linked-from>https://example.com</wt:linked-from> <wt:date-detected>2008-11-17T01:06:10.000 </wt:date-detected> </atom:entry>

How the Data Differs

Let’s take a look at some real examples at how what you get in this file differs from the UI and UI-based download.

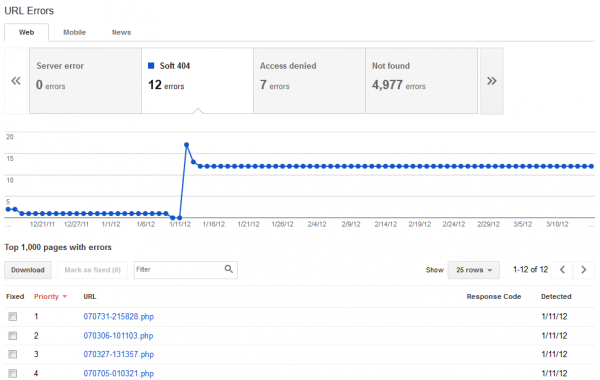

Number of Errors Shown

The UI shows the total count over time, but only lists up to 1,000 URLs for each error. The API-based CSV contains up to 100,000 URLs for each type of error. However, it gives you only the current snapshot of errors and doesn’t provide a total count of each error (if more than 100,000 exist for any given one). This is the same information you can get from the API-based crawl errors feed. The UI-based CSV also only shows you the current snapshot of errors and lists only up to 1,000 URLs (the same as the UI).

For searchengineland.com’s “not found” errors:

- The UI shows that Google encountered 4,981 URLs (down from around 8,000 in December) and displays 1,000 of them, along with the corresponding response code (and the list of sources via a popup):

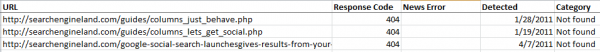

- The UI-based CSV lists 1,238 of them, along with the corresponding response code:

- The API-based CSV lists 2,867 of them, along with the corresponding response code and the number of incoming links (but not the sources):

- The API-based feed presumably would provide all 4,981 URLs (in 25 URL increments), the response code, and the list of sources.

I checked a site that the UI indicated had more than 100,000 of a particular error (257,065) and found that the corresponding API-based CSV file listed 100,005 of those. The UI-based CSV listed 1,999.

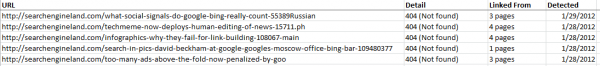

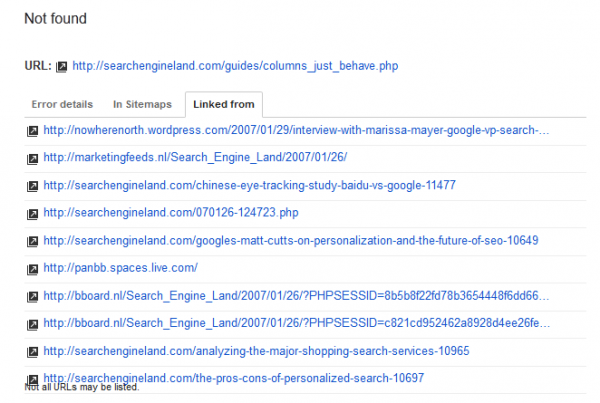

Error Sources

The UI shows error sources, but only at the individual URL level. Click a URL, then click the Linked From tab to see the list. You can’t download this list from the UI in aggregate or for an individual URL.

The API-based CSV indicates the number of sources linking to each URL (which can be useful as you can tackle issues with URLs that have a lot of links first), but doesn’t list what those sources are.

The API-based feed provides details on the source for each URL. Below is an example from searchengineland.com:

<atom:entry> <atom:id>https://www.google.com/webmasters/tools/feeds/

http%3A%2F%2Fsearchengineland.com%2F/crawlissues/27</atom:id> <atom:updated>2012-03-19T17:18:18.907Z</atom:updated> <atom:category scheme='https://schemas.google.com/g/2005#kind' term='https://schemas.google.com/webmasters/tools/ 2007#crawl_issue_entry'/> <atom:title type='text'>Crawl Issue</atom:title> <atom:link rel='self' type='application/atom+xml' href='https://www.google.com/webmasters/tools/feeds/ http%3A%2F%2Fsearchengineland.com%2F/crawlissues/27'/> <wt:crawl-type xmlns:wt='https://schemas.google.com/ webmasters/tools/2007'>web-crawl</wt:crawl-type> <wt:issue-type xmlns:wt='https://schemas.google.com/webmasters/ tools/2007'>not-found</wt:issue-type> <wt:url xmlns:wt='https://schemas.google.com/webmasters/ tools/2007'>https://searchengineland.com/10-optimization- secrets-to-drive-more-mobile-traffic-from-facebook-114316/ www.linkedin.com/in/brianklais</wt:url> <wt:date-detected xmlns:wt='https://schemas.google.com/ webmasters/tools/2007'>2012-03-17T05:58:35.000</wt:date-detected> <wt:detail xmlns:wt='https://schemas.google.com/webmasters/tools/2007'> 404 (Not found)</wt:detail> <wt:linked-from xmlns:wt= 'https://schemas.google.com/webmasters/ tools/2007'>htp://searchengineland.com/10-optimization-secrets-to- drive-more-mobile- traffic-from-facebook-114316/comment-page-1 </wt:linked-from> </atom:entry>

Date of Latest Crawl

As far as I can tell, this detail is available only in the UI (once you click on a URL):

In Sitemaps

This level of detail also seems to be only available in the UI (once you click on an individual URL and then the In Sitemaps tab). This information is also available in aggregate from the Sitemaps section of the UI (but not as a download — either from the UI or from the API).

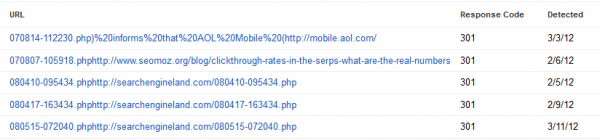

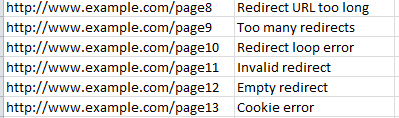

Not Followed Errors

The UI shows the URL’s response code (such as 301), which as I noted in my earlier article, is somewhat misleading and not that useful for investigating the error. The report can be misread to mean that 301 response codes are errors. What this report actually provides is a list of URLs that returned either a 301 or 302 response code that Googlebot couldn’t follow due to a problem with the redirect.

The UI-based CSV provides similar data (although not the additional details available in the UI when you click the URL).

The API-based CSV lists “redirect error” for these specific URLs.

That particular error isn’t any more helpful than the response code, but in some cases, this file lists the most specific issue (this detail is one of the pieces of data that used to display in the UI). Possible values include:

For instance, here’s a (slightly obscured) example of a URL with a “redirect URL too long” error:

https://www.example.org/A%20Category%20Page%0A/Www.Sample.Net

%0A/Topic%20Topic1%0A/A%20Category%20Page%20Topic%20Topic1

%0A/Topic%20Topic3%0A/Excercise%0A/Smoothies%0A/Topic2ix%0A/

Topic%20Trainer%0A/Topic3%20Programs%20%0A/Topic%20Assessments

%20%0A/Individual%20Or%20Group%20Topic3%20Topic1%20%0A/Topic4

%20Guidance%20%0A/Category2%20Loss%20Supervision%20%0A/Category2

%20Loss%0A/Support%20And%20Motivation%20%0A/Topic9%20Specific

%20Category4%20%0A/Cardiovascular%20Category4%20%0A/Topic9%20

Specific%20Category4%20%20%20%0A/Home%20Gym%20Selection%0A/

Home%20Gym%20Use%0A/Topic12%0A/Topic10%20Topic15%20Topic12%0A/

Workout%20Routines%0A/Category2%20Topic1%0A/Topic3%0A/Topic9%0A/

Topic11%20Topic12%0A/Lean%0A/Lean%20Category3%0A/Nutrition%0A/

Gift%20Topic13s%0A/Topic3%20And%20Wellness%0A/Category5%0A/Topic3

%20Programs%20For%20All%20Ages%20%0A/Topic%20Assessments%20%0A/

Individual%20Or%20Group%20Topic1%20%0A/Topic4%20Guidance%20%0A/

Category2%20Loss%20Supervision%20%0A/Support%20And%20Motivation

%20%20%0A/Cardio%20%0A/Topic9%20Specific%20Category4%0A/Category2

%20Topic1%0A/Category2%20Topic14%20Routines%0A/Premier%20Topic%20

Trainer%0A/Category2%20Loss%20Help%0A/Safe%20Category2%20Loss%0A/

Wellness%0A/Nutrition%0A/Category3%20Topic3%0A/Exercise%20Health%0A/

Category2%20Loss%20Product%0A/Category6ing%0A/Topic3%20Equipment%0A/

Category2%20Loss%20Supplement%0A/Loose%20Category2%0A/Health%0A/

Healthy%20Category6%0A/Fat%20Loss%0A/Category6%20Plan%0A/Online%20

Topic3%20Program%0A/Topic3%20Center%0A/Gym%20Exercise%0A/Fast%20

Category2%20Loss%0A/Lose%20Category2%20Fast%0A/6%20Topic15%20

Topic12%0A/Home%20Gym%20Equipment%0A/Gym%0A/Topic3%20Course%0A/

Topic3%20Exercise%0A/Gym%20Equipment%0A/Topic3%20Topic1%20Program%0A/

Gym%0A/Exercise%20Program%0A/Category2%20Loss%20Category6%0A/Topic%20

Topic3%0A/Exercise%0A/Sport%20And%20Topic3%0A/Topic3%20Class%0A/Topic

%20Topic3%20Trainer%0A/Topic10%20Topic15%20Exercise%0A/Health%20And%20

Topic3%0A/Topic3%20Australia%0A/Category2%20Loss%20Program%0A/Topic3%20

Trainer%0A/Topic%20Topic1%20Topic16%0A/Topic3%0A/Kick%20Topic17%0A/

6%20Topic15%0A/Category2%20Topic1%0A/Topic%20Trainers%0A/Category2%20

Loss%0A/Topic%20Trainer%0A/Home%20Gym%0A/Topic%20Topic1%0A/Category6

%0A/Quick%20Category2%20Loss%20%0A/Category2%20Loss%20Plan%0A/

Category7%20Category2%20Loss%0A/Easy%20Category2%20Loss%0A/Category2

%20Loss%20Tip%0A/Healthy%20Category2%20Loss%0A/Rapid%20Category2%20

Loss%0A/Topic8%20135%0A/Category2%201

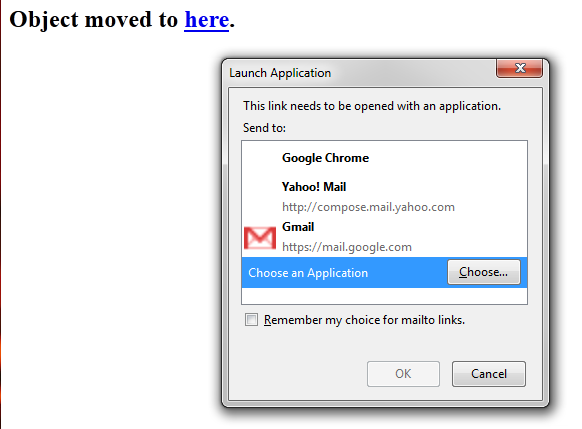

An “invalid” redirect brought me to this page (which was a 302 to a 404):

Empty redirects are those with no location information.

The API-based feed also provides these specifics as part of the feed.

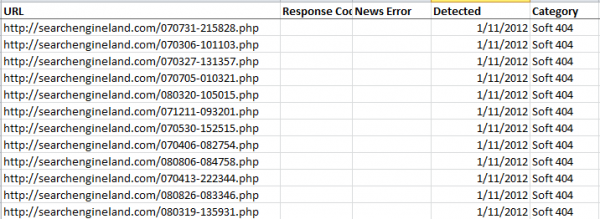

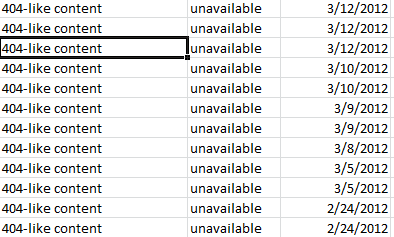

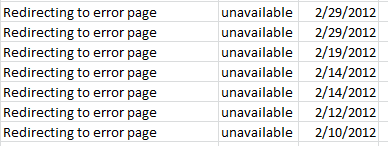

Soft 404s

Gone missing from both the UI and UI-based CSV are the specifics of soft 404s.

The API-based CSV still lists these details (as does the crawl errors feed), such as:

- 404-like content (the page returns a 200 response code, but seems to contain contain from an error page)

- Redirect to an error page

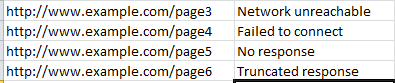

Site-Wide (Server) Errors

With the UI overhaul, Google shows the number of site-based (vs. URL based) errors encountered over time, but not the specific URLs that triggered the errors, and has simplified the error messaging into three types: DNS, server connectivity, and robots.txt fetch. There is no corresponding download. (Google says when they encounter these types of errors, it typically means they’ll receive them for any URL on the site, since the problem is at a lower level.)

There’s some interesting data to be gleaned here that’s not apparent. For instance, if you hover over the dots, you see the total number of Googlebot fetch requests per day. (Unfortunately, you can only see this graph if you Google encounters at least one site error during the reported time period and there’s no way to see this number other than hovering.) For one site I looked at, the number of URLs crawled averaged around 250,000 per day. Then one day, 2% of requests returned a DNS error (2,866 of 152,528 requests). The following day, Googlebot made only 128 requests (all crawled successfully) and only 71 the day after that. This doesn’t match up exactly what the crawl stats report shows:

Another site went from an average of 100,000 a day to 362 a day after 1% of requests returned a DNS error.

With the API-based CSV file and API-based crawl errors feed, you get:

- The URL that triggered the error

- The specifics of the error

DNS Error

Server Connectivity

Robots.txt Fetch

Pages Blocked with Robots.txt

This report is gone entirely from the UI but it’s still available (up to 100,000 URLs) from the API (both the CSV and the feed).

All in all, I’m happy to learn that most of this information is still available. It’s somewhat cumbersome and confusing that what’s available isn’t consistent across delivery mechanisms and figuring out how to access the API-based data isn’t all that straightforward. But for those power users (like me) who process this data programmatically, that Google is continuing to provide this information is great news.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories