Does Google’s Review Count Inflation Give Them An Unfair Advantage In Local Search?

We all know that monitoring online reviews is important for online marketing; but lately, it seems that not all reviews are created equal. In fact, Google+ Local reviews may have a greater impact than others on your online marketing effectiveness. According to Searchmetrics’ analysis of SEO ranking factors in 2013, Google+ had the highest correlated […]

We all know that monitoring online reviews is important for online marketing; but lately, it seems that not all reviews are created equal. In fact, Google+ Local reviews may have a greater impact than others on your online marketing effectiveness.

According to Searchmetrics’ analysis of SEO ranking factors in 2013, Google+ had the highest correlated effect on search rankings for Google UK. A 2013 study from Now Digital Marketing Works took it one step further, reporting that Google+ reviews are the most important factor when it comes to inclusion and ranking in the Carousel.

While some consider it rather bold of Google to place so much importance on its own social media platform, it’s not exactly surprising. Google is the ultimate authority in its own eyes, so it makes sense that the search engine would turn to its own subsidiary when determining rank. Your Google+ Local page is all the more important, then, when you’re looking to give your site an SEO boost.

Google+ Reviews

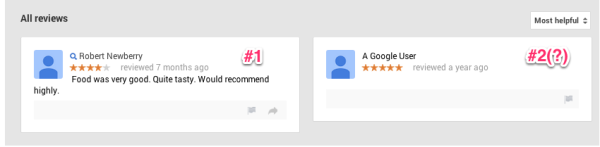

With Google+ reviews weighing as heavily as they do, one would hope that we can count on Google for accuracy. Recently, however, we noticed some discrepancies with Google+ review numbers and decided to do some research of our own. Visit the Google+ Local page of SushiYAA, a sushi restaurant in Dallas, for example. You’ll see Google boasts “2 reviews” at the top of the business page.

Yet, when we scroll down to read the actual reviews, we see Google’s definition of a “review” is fairly liberal:

How many reviews do you see? We see only one text-based review and one rating. Is this nitpicky? Perhaps, but we must keep in mind how valuable Google+ Local reviews are and how big of an impact they have on the SERPs.

Google Ranking Tactics

This issue also seems particularly noteworthy at the moment, as Google settles its antitrust case with the European Commission. After being accused — although not convicted — of abusing its power, the search giant has divulged some of its methods and committed to using more honest tactics in the future.

The case arose after a handful of European companies complained that Google downgraded their rankings because their services were too similar to Google’s — price comparison, mapping, and video hosting were on the list.

This isn’t the first time Google has been accused of pushing other sites out due to competition. Some may remember back in 2010 when TripAdvisor blocked Google from using the travel site’s reviews on Place pages because of a feared traffic decrease.

Many viewed Google’s use of TripAdvisor reviews as an attempt to devalue the travel site and increase demand for reviews on Google. TripAdvisor made it clear that it wouldn’t let Google monopolize the review market. Despite TripAdvisor’s noble attempt, Google found a way to force people into choosing its reviews over those on other sites — placing higher SEO value on Google+ reviews than others.

With the amount of influence Google+ reviews seem to have over the SERPs, it’s hard to shrug off instances like the TripAdvisor drama. And despite Google’s new pledge of transparency, we see that such review count “inflation” is rampant across the Google+ Local ecosystem.

Google+ Review Count Inflation?

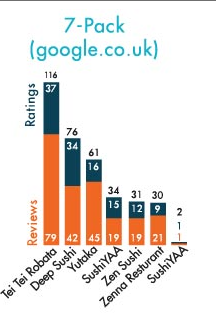

To test out the scope of this inflation, we looked at the search term [sushi dallas tx]. Google padded the review count for the first seven Carousel positions and 7-pack results in every single case. Here’s a breakdown of this inflation:

Is it fair for Google to be ignoring the distinction between “review” and “rating”? Does such conflation have an effect on Map Pack performance — and, respectively, organic? We decided to find out.

Let’s Test It Out

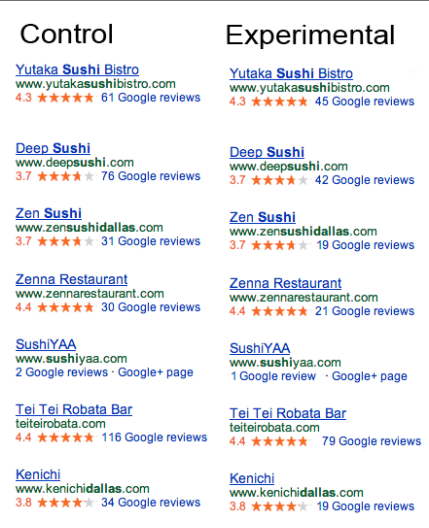

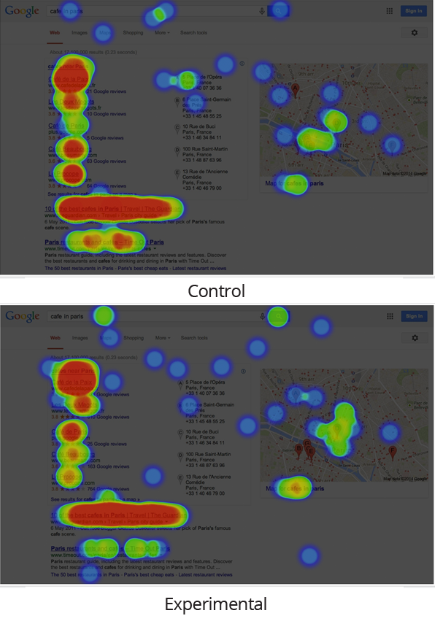

To examine these questions, we ran an experiment on Usability Hub designed to measure organic and inside-map pack performance of a “control” screen shot of [sushi dallas tx] versus a Photoshopped “experimental” image of the same SERP. (Here, the map pack refers to both the X-pack and the map result.) The experimental image adjusted the review count downward from what Google boasts to what was actually counted on 2/11/2014.

Usability Hub is a great platform for building simulations of the user search experience — by priming respondents with hypothetical “intent,” our hope was to get an approximation of the actual traffic numbers Google would see for this type of result.

For its strength as a simulation platform, Usability Hub was not able to quickly provide a large enough sample size to estimate such traffic. To address this drawback, we turned to Mechanical Turk, which has become an increasingly popular tool for social scientists to cull rapid, inexpensive and reliable data.

About 500 English-speaking Mechanical Turk workers were prompted with the following: “You’re in Dallas, Texas and you’re craving sushi. You decide to use the Internet to help with your restaurant research. You open your laptop and type ‘sushi dallas tx’ into Google, bringing you to the following screen. What’s your first click?”

Upon clicking the “Start Test” button, the workers were randomly presented with either the control or experimental image.

Upon the collection of 251 one-click responses from each image, the results were analyzed. Below are the results:

The results were inconclusive. Though we observe a 3% dip in the experimental map pack’s performance, it did not come at the cost of organic, which has virtually identical performance (78%) across the two tests.

Another Try

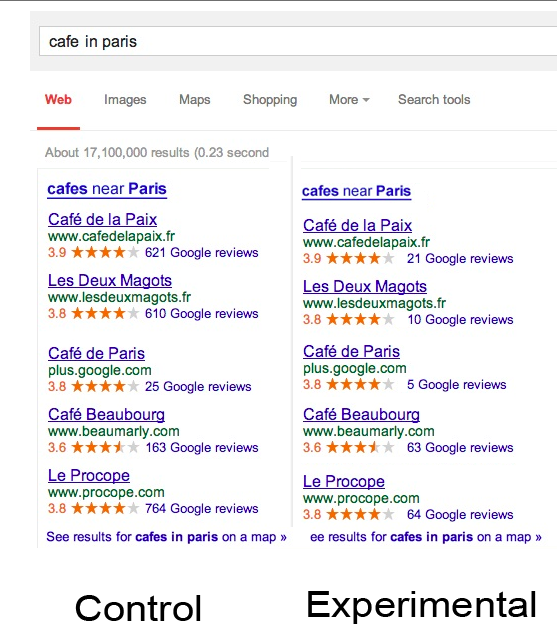

Out of curiosity, we ran a second experiment using a SERP inspired by one we’ve seen in the news lately regarding Google’s proposed settlement with the EU. We removed the rival links box and simply Photoshopped the first digit off of the review count within the map pack:

Again, either the control or experimental was presented randomly to a set of English-speaking Mechanical Turk workers until 500 responses were collected. Below are those results:

Here, we observe a much more dramatic causation between review count within the map pack and map pack engagement. Organic enjoys a 10% jump in traffic while map pack engagement plummets 10%.

While these results can’t be considered conclusive, it seems to me that deflating reviews can certainly have an impact, as users turn to natural results in light of low review numbers. Although more research is needed to prove anything, one must then wonder what other effects manipulating review numbers can have.

Conclusion

Next time you’re on a Google+ Local page, check for yourself: does the quantity of reviews boasted match the actual number of reviews? Anecdotally speaking, this seems rare.

It’s difficult to estimate what the overall inflation rate of the Google+ ecosystem (the percentage of Google+ Local reviews that are actually just one-click ratings being counted as “reviews”) might be, but it appears to be siphoning from organic results to links contained within Google+ Local’s hardwired objects.

What this means for your business depends heavily on where you’re currently ranking and whether your site is currently in the Carousel or map pack.

Share in the comments any discrepancies you find on your Google+ page and how it might be effecting your ranking.

Source data for graphs:

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories