Link building tool roundup: Site crawlers

Link building is hard work! Here's how to utilize site crawling tools to make sure your effort pays off.

If a search engine’s crawler can’t find your content to index, it’s not going to rank. It’s also not a good signal, but, most importantly, if a search engine can’t find something, a user may not be able to either. That’s why tools that mimic the actions of a search engine’s crawler can be very useful.

You can find all sorts of problems using these crawlers, problems that can drastically impact how well your site performs in search engines. They can also help you, as a link builder, to determine which sites deserve your attention.

Link building is never a magic bullet. Links take a lot of hard work, and it can be useless to build links to a site that suffers from terrible SEO.

For this article, I’ve looked at four crawlers: two are web-based and two are desktop versions. I’ve done a very light analysis of each in order to show you how they can be used to help when you’re building links, but they have many, many more uses.

I’ll go through uses for link outreach and also for making sure your own site is in good shape for building or attracting links.

For the record, here are the basics about each tool I’ll be mentioning:

- Sitebulb: offers 14-day free trial. Desktop web crawler.

- DeepCrawl: offers 7-day free trial. Web-based crawler.

- Screaming Frog: free download for light use or buy a license for additional features. Desktop web crawler.

- OnCrawl: offers 14-day free trial. Web-based crawler.

Note: these crawlers are constantly being updated so screenshots taken at the time of publication may not match the current view.

Evaluate a link-target site

Using a crawler tool can help you maximize your link building efficiency and effectiveness

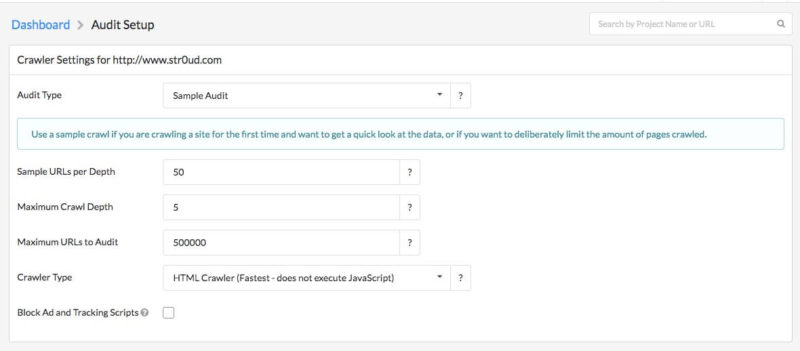

Do a sample site audit.

Before you reach out to a site on which you want a link, conduct an audit of the site so you have an “in” by pointing out any errors that you find.

Screenshot from Sitebulb’s tool

Screenshot from Sitebulb’s tool

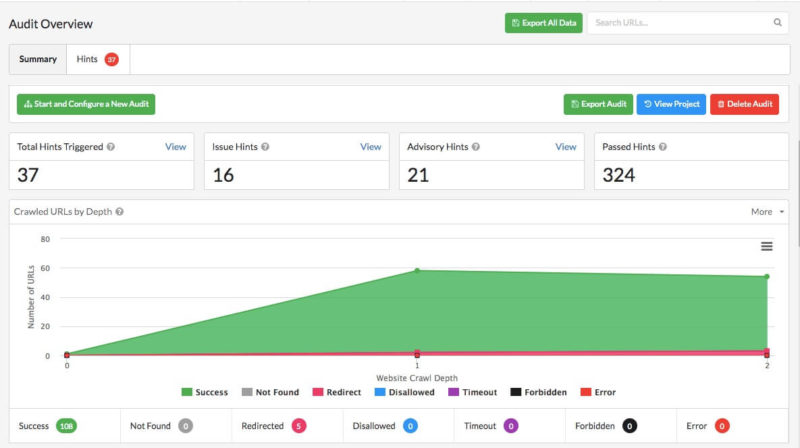

The beauty of a sample audit is the small amount of time used. I have seen some crawlers take ages to do a full crawl. so a sample audit, in my opinion, is genius!

In the example report below, you can look at just a few of the hints and easily see that there are some duplication issues, which is a great lead-in for outreach.

Screenshot from Sitebulb’s tool

Run a report using custom settings to see if a link is worth pursuing. If tons of the site’s content is inaccessible and there are errors all over the site, it may not be a good idea to invest a lot of time and effort in trying to get a link there.

Find the best pages for links.

Sitebulb has a Link Equity score that is similar in idea to internal PageRank. The higher the link equity score, the more likely the page is to rank. A link from a page with a high Link Equity score should, theoretically, with all other things being equal, be more likely to help you rank than one from a page with a much lower Link Equity score.

Screenshot from Sitebulb’s tool

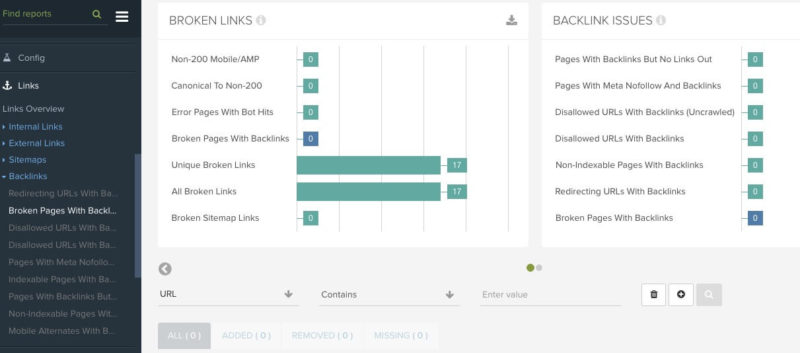

Run a report to find broken pages with backlinks.

DeepCrawl has an easy way to view these pages. Great for broken link building obviously…but even if you’re not doing broken link building, it’s a good “in” with a webmaster.

Who doesn’t want to know that they have links pointing to pages that can’t be found?

Screenshot from DeepCrawl’s tool

Make your own (or client’s) site more link-worthy

You can run the same report on your own site to see what content is inaccessible there. Always remember that there may be cases where you want some of your content to be inaccessible, but, if you want it to rank, it needs to be accessible. You don’t want to seek a link for content that’s inaccessible if you want to get any value out of it.

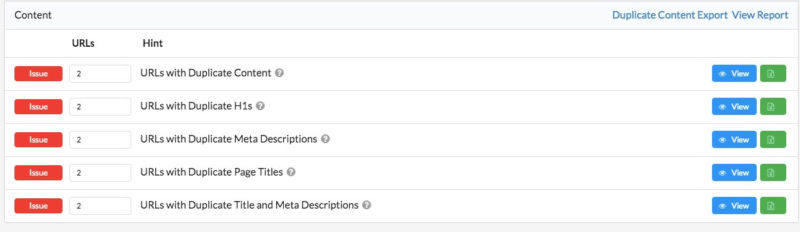

Do I have duplicate content?

Sitebulb has a handy Duplicate Content tab you can click on. Duplicate content can impact your rankings in some cases so it’s best to avoid or handle it properly. (For more on duplicate content see Dealing with Duplicate Content.)

Screenshot from Sitebulb’s tool

Are my redirects set up correctly?

As a link builder, my main concern with redirects involves making sure that if I move or remove a page with a lot of good links, the change is handled properly with a redirect. There are a lot of arguments for and against redirecting pages for things like products you no longer carry or information that is no longer relevant to your site, as much of that has to do with usability.

I just hate to get great links for a page that doesn’t get properly redirected, as the loss of links feels like such a waste of time.

Am I seeing the correct error codes?

DeepCrawl has a section on Non-200 Pages which is very helpful. You can click on and view a graphical representation of these pages.

Generally speaking, you’d expect to see most pages returning a 200 code. You’d expect to see 301 and 302 redirects. You don’t want to see over 50% of your site returning 404 codes though. Screaming Frog has a tab where you can easily view response codes for all pages crawled.

Screenshot from Screaming Frog’s tool

I would say that you need to make sure you understand which codes should be returning from various pages though, as there may be good reasons for something to return a certain code.

Screenshot from DeepCrawl’s tool

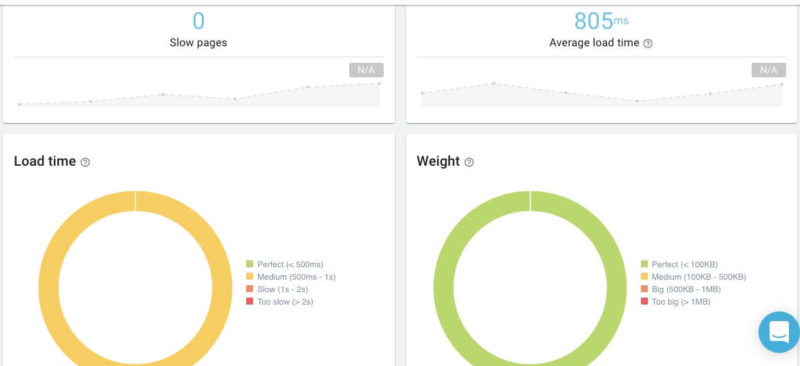

Is my load time ok?

Some people are much more patient than I am. If a page doesn’t load almost immediately, I bounce. If you’re trying to get links to a page and it takes 10 seconds to load, you’re going to have a disappointing conversion rate. You want to make sure that your critical pages load as quickly as possible.

Here’s a report from OnCrawl that can help you zero in on any pages that are loading too slowly:

Screenshot from OnCrawl’s tool

How is my internal link structure?

You don’t want to have orphaned pages or see a lot of internal broken links. If pages can’t be found and crawled, they won’t get indexed. Good site architecture is also very important from a user’s perspective. If you have critical content that can’t be found unless it’s searched for, or you have to click on ten different links to get to it, that’s not good.

Screaming Frog has an Inlinks column (accessed by clicking on the Internal tab) that tells you how many internal links are pointing to each page. You want to see the highest number of internal links pointing to your most critical pages.

In the image below, I’ve sorted my own website by highest to lowest Inlinks, making sure that the most important pages have the most Inlinks.

Screenshot from Screaming Frog’s tool

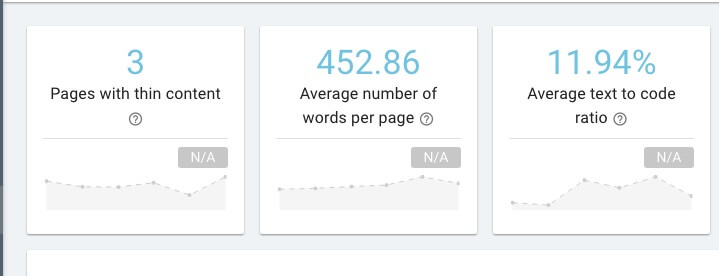

Do I have pages that are too “thin”?

Considering that you can receive a manual action for thin content, it’s best not to have any. Thin content isn’t good for search engines or for users. Thin content won’t generally attract a lot of great links. In fact, if you do have links to thin content, you run the risk of having those links replaced by link builders working with sites with better resources.

OnCrawl has a good view of thin content which is very helpful.

Screenshot from OnCrawl’s tool

As I said earlier, there are so many ways you can use these crawlers. Here’s a big warning, though! Some crawlers can use a ton of resources. I once crawled a site and the client’s hosting company called him to say they’d blocked it because it was making too many requests at once. If you’re going to run anything heavy duty, make sure the proper people know about it and can whitelist the relevant IP addresses.

Happy crawling!

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land