How Pinterest is teaching its computers to see

Pinterest has been developing computer vision technology since 2014 and is now applying it to visual search queries and ad targeting.

Picture this: You’re checking out a produce stand at the local farmer’s market and see a weird mango-like thing. It looks appealing. But you have no idea what it is or what to do with it, and the seller is busy with another customer. So you take a picture of it. In the past to identify it, you would pull up this picture later and try to come up with a string of keywords to enter into a text box or show it to someone in the produce department at your grocery store in hopes they know. But now your phone knows and shows you some recipes that incorporate it.

Pinterest is trying to make that picture a reality. Since assembling a small team to develop computer-vision technology in 2014, the search-slash-social platform — where each month 175 million people organize, share and, increasingly, find ideas ranging from food recipes to interior design to outfits to tattoos — has been working on ways to make images searchable like text. Its work has evolved from picking out images that look alike to identifying objects within images to converting images into search queries to, most recently, targeting ads based on images.

Pinterest isn’t the only major tech company teaching computers how to see. So are Amazon, Google, Facebook, IBM and others. But Pinterest believes it has an edge when it comes to not only recognizing objects in an image but the brands those objects belong to.

“When we started building visual search, it’s one of the things we noticed, that we’re able to match products and brands surprisingly well despite having, initially when we started out, a very small computer vision team,” said Pinterest’s engineering manager for visual search, Dmitry Kislyuk.

Computer vision is something of a Rorschach test. Algorithms apply what they know when attempting to understand the patchwork of pixels presented to them. And Pinterest’s computer-vision algorithm happens to know a lot about brand-related images.

Many of the images on Pinterest are high-quality stock photographs or professional product images that people pin from brands’ sites or that brands upload themselves. “Because brands come to Pinterest to upload all of their content, we tend to have very, very good, well-labeled data on ‘here are all the Nike shoes, here are all the Vans shoes’ including typically the actual brand name,” said Kislyuk. And that data set is constantly growing and being refined, by people writing out captions when pinning images, adding images to named collections containing other images (“boards”), typing keywords to search for certain images — creating a map for Pinterest’s visual search technology to navigate to surface not only lookalike images, but also complementary content and even ads.

From that data, Pinterest has been able to crowd-source (and brand-source) a visual search platform that receives more than 250 million unique visual searches each month. But Pinterest’s ambition is to do for visual search what Google has done for text search. It wants the camera to be the keyboard and the photo to be the query. It wants to turn people’s cameras into keyboards, to parse their photos into the queries, to convert an image’s objects into keywords.

But if Pinterest wants any image to be searchable, that includes not only professional photographs but poorly lit shots snapped at the farmer’s market. Since Pinterest’s computer-vision algorithm was originally trained on well-labeled, high-quality images, this is like taking a distance runner trained on Olympic tracks and having them race along the side of a mountain. This is the story of how Pinterest is training its computer-vision technology to tackle that mountain.

At first sight

Pinterest set its sights on visual search in 2014. That year, the company acquired an image-recognition startup, established its computer vision team with a small group of engineers and began to show its work.

First, Pinterest applied the technology to Related Pins, the selection of comparable pins presented when someone clicks to expand an individual pin. Until that point, to source Related Pins, Pinterest looked to what other pins people had included in boards — or user-curated collections of pins — with the examined pin. That worked fine for pins that had been added to a lot of boards, but it meant Pinterest had a lot of so-called “dark pins,” or pins that didn’t feature enough metadata to get its recommendation algorithm’s attention. “As a result, six percent of images at Pinterest have very few or no recommendations,” according to a research paper published by Pinterest’s visual discovery team in 2015.

To do a better job of surfacing these obscure pins, Pinterest used computer vision to establish a relationship between them and higher-profile pins that were visually similar. Pinterest began testing these “Visual Related Pins” with 10 percent of its active user base to see if the change led to more people repinning Related Pins. After three months, the change resulted in a two percent increase in total repins for Related Pins.

The following year, Pinterest honed its visual search work. With Visual Related Pins, Pinterest was able to identify visually similar images. Now Pinterest wanted to be able to identify visually similar objects within images.

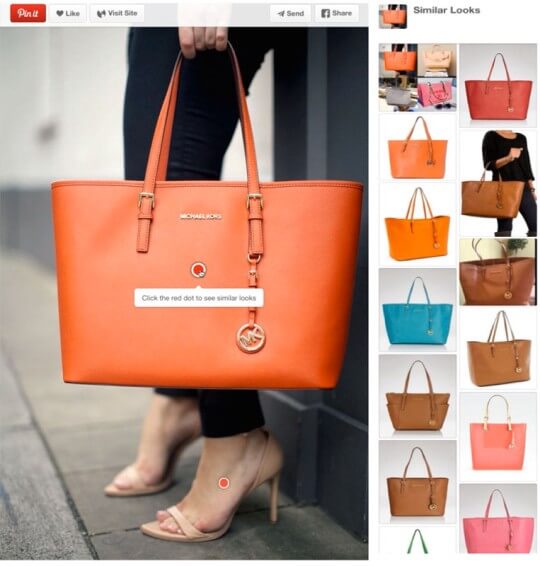

Given the popularity of women’s fashion-related pins on Pinterest, the company’s computer vision team had a sizable and structured data set to work with. So it pulled a random sample of pins from the category and manually labeled 2,399 fashion-related objects across nine categories, such as shoes, dress, glasses, bags, pants and earrings. That work made Pinterest able to identify more than 80 million objects across its platform and set the foundation for what would become Similar Looks, which appended a red dot to fashion-related objects within pins that people could click to find other pins containing similar objects.

Over the course of a month, Pinterest tested Similar Looks with a subset of users. Of the users in that test group, 12 percent who viewed a Similar Looks-enabled pin clicked on its red dot, on an average day, and clicked on one of the resulting pins about half the time. Then Pinterest started adding the Similar Looks results into the set of Related Pins. Blending pins that shared similar visual objects with pins that were shared on the same boards allowed Pinterest to see how its computer vision-based results stacked up against its non-vision-based results. The addition of the Similar Looks results led to a five percent increase in repins and close-ups on Related Pins.

But the success of Similar Looks posed a problem. It couldn’t scale. Pinterest did all of its object-detection entirely offline. “We would take the existing catalog of Pinterest images and run our algorithms over the course of days or weeks even to try to extract what are the objects in this image and what are the visually similar results to each of those objects,” said Kislyuk. As a result only 2.4 percent of Pinterest’s daily active users ever saw a Similar Looks-enabled pin, which company research revealed that people were confused as to why many pins didn’t have a red dot to click.

Not being able to use visual search on any pin “was a huge detractor for users to actually use visual search,” said Kislyuk.

Seeing things in a new light

A year after Pinterest assembled its computer vision team, that team effectively had to start over. It had developed a way to pick out objects inside of images. But it couldn’t scale that method to the more than one billion unique images that were on Pinterest at the time, let alone the countless more images that would be added to the platform over time.

“We went back to the drawing board and said, ‘Okay, everything has to be real-time. Everything has to work on any set of pixels,'” said Kislyuk. “We re-engineered the entire technical stack and applied some of the latest advances in deep learning and computer vision to let us do very, very fast image-matching. Less than a fraction of a second.”

Thanks to the fact that so many images on Pinterest are either high-quality stock photography or professional product images, and that Pinterest’s users write captions that help to explain what’s in a photo, four engineers from Pinterest and some members of UC Berkeley’s computer vision lab pulled off that pivot in the space of a few months. The result was Flashlight, Pinterest’s first official visual search tool that rolled out in November 2015.

Instead of people relying on Pinterest’s technology to pick out individual objects in a photo and prime a list of results — as was the case with Similar Looks — with Flashlight people could use a cropping tool to zoom in on an area or object in the photo and trigger a real-time search for similar-looking images. Soon after the product rolled out, two percent of Pinterest’s users were conducting four million visual search queries a day, and Pinterest’s visual search results generated two-thirds the engagement rate of its years-old text search results, according to a research paper written by Pinterest’s engineers that was published in March 2017.

By June 2016, Pinterest’s Flashlight technology was capable of automatically detecting objects in images, like what was possible with Similar Looks but in real time. That would not only make it easier for people to search based on specific objects in an image, but also make it easier for Pinterest to surface results, or at least easier to avoid having to parse oddly cropped areas of an image.

Once again, Pinterest had pushed out an automated, object-detecting visual search product. Once again, it had a problem. The engagement metric that Pinterest monitored to gauge Flashlight’s performance — whether people were “pinning,” or saving, the resulting pins — had decreased after the initial launch of automatic object detection. Pinterest identified two main turn-offs for users: (1) errors in Pinterest’s object detection; and (2) irrelevant results.

And once again, Pinterest’s computer vision team came up with a solution. People who have bad vision typically try to trace the outline of something they’re looking at and use that to deduce what it is. If something is relatively tall, more vertical than horizontal and narrowest at the top, well, so are people, so maybe it’s a person. Deducing that may help to identify the arms and legs and confirm the categorization. Computers can learn to see in a similar way.

“One really important trick we found — and that I don’t think I’ve seen published anywhere else, so hopefully we’re the initial authors on this — is, looking at the visual search results for a particular bounding box or object that we see in an image, we can make that category conformity score basically say out of all of these results, do they conform to the same type of object? Are they all shoe results? Or are they all couch results?,” said Kislyuk.

The use of a category conformity score not only reversed Flashlight’s poor engagement scores, but it also meant that Pinterest could extend Flashlight into a visual shopping tool. It did that in February 2017 with the rollout of Shop the Look, which automatically attaches circles to clothing objects in images and displays pins, including buyable ones, featuring the individual objects.

And perhaps most importantly, Flashlight’s success meant that Pinterest could attempt to detect objects in lower-quality images, the type that someone might take on their phone while at the farmer’s market.

A new Lens

When Pinterest announced in June 2016 that its visual search technology could automatically recognize objects in photos, it also teased a much more ambitious product.

“We’re also building technology that will help people get recommendations on Pinterest for products they discover in the real world, by simply taking a photo. This will enable a new kind of visual search experience, combining image retrieval, object detection and the power of our interest graph. Stay tuned for more information on camera search technology,” Kislyuk wrote as the last paragraph of a post to Pinterest’s engineering blog announcing automatic object detection.

Eight months later, in February 2017, Pinterest unveiled Lens. People using Pinterest’s mobile app would be able to take a photo of anything in the real world, and Pinterest’s visual search technology would identify the objects in the photo and return a list of relevant pins. It was Google search, but with a camera instead of a keyboard.

Pinterest wasn’t the first company to convert photos into search queries. Google tried something similar with the Google Goggles app it initially introduced for Android phones in December 2009, which it will be trying again with the unreleased Google Lens. So did Amazon, in December 2011, with the launch of Flow, which it has since added to its main mobile app so that people can take a photo of any object and find the same or similar-looking products for sale on Amazon. But Pinterest believes it is taking a new angle on a relatively old idea.

“For a consumer product, just visual similarity alone is not that useful of a product. If I just give you a bunch of pictures that are the same exact thing over and over again, the utility is pretty low. I think this is where a lot of visual search apps in the past have struggled or failed,” said Kislyuk. He added, “When we went to the drawing board for Lens, what we really tried to optimize for is how do you have actionable results that are actually useful for our pinners.”

A picture is worth a thousand keywords

Pinterest’s visual search capabilities had come a long way from Similar Looks, and it wasn’t about to revert back to queuing up visually similar images — or at least not only visually similar images. For example, if someone uses Lens to take a photo of a rowing machine, they may be less interested in seeing other rowing machines — they likely already have access to the one in the photo — than in seeing different exercises to do with a rowing machine.

So Pinterest had to design a search system that could parse a photo like a text query. To do that, Pinterest relied on its established text search system. “We make somewhat of a product decision that the text search results, specifically like ‘rowing machine exercises,’ those types of results are more useful than visual search results,” said Kislyuk.

When Lens processes an image — in addition to parsing out the objects and colors contained in an image — it pulls up the annotations, or words most commonly associated with images of those objects on its platform. Then Lens conducts three types of searches: (1) It uses Flashlight to look for visually similar images; (2) it uses the annotations as text queries to look for the best-performing search results; and (3) it combs through its index of objects tagged within images to find other photos that contain the queried photo’s objects. Lens then takes the results from these three categories and combines them, ranking them in order of relevance and confidence.

“Over time we actually leverage all the user data, all the past queries people have made, and it actually can be decided by our users. If we have enough queries of a rowing machine, we can see which types of results got the most engagement and make sure those are the ones that get boosted up. So far what we’ve seen is the text search results, in general, are a lot of more engaging and useful to users,” said Kislyuk.

Lens focus

Lens wouldn’t be useful to Pinterest’s users if it couldn’t decipher users’ own photos. Taking Pinterest’s visual search technology that was built on stock photography and professional product images and applying it to user-generated photos “is one of the core technological challenges that we’ve been working on on Lens for the past year,” said Kislyuk. “We actually do have a fair amount of [user-generated] content; it just tends to be dark.”

In the past, “dark” content meant newly-uploaded images that hadn’t received many repins or been saved to many boards and therefore lacked the metadata that Pinterest had used to augment its understanding of the pixels in a picture. But with Lens, Pinterest would need to be able to work without any metadata. Sure, people could use Lens through Pinterest’s mobile app, and Pinterest could pick up some signals from a person’s profile to aid its image processing. But Lens will also work through Samsung’s Siri-like virtual assistant Bixby, so that anyone owning the hardware brand’s latest Galaxy S8 smartphone could take a photo and have the Lens-powered Bixby tell them what’s in the shot.

“There, the images that are coming in, we have no other information aside from just the image. So we have to do as fine-grained a visual search as we can based on the pixels,” said Kislyuk.

To that end, Pinterest trains its algorithm on the similarities between professionally shot and user-generated photos of the same object and has the algorithm cluster the photos close together for that object. “In technical parlance, what that means is the model learns background invariance, so it learns to ignore the aesthetic attributes of the lighting and how well it’s positioned and if it’s very clean or not and tries to get to the essence of what is this actual product,” Kislyuk said.

Pinterest also crowd-sources the learning process. In March it rolled out Lens to everyone in the US who uses Pinterest’s mobile app on their iPhones or Android phones. While the product is meant to tell people what’s in a photo, it also added a way for people to tell Lens what’s in the photo by adding their own labels. Helpful as that may be, it’s also risky because people could try to trick Pinterest’s algorithm, such as by teaching it that shoes should actually be labeled with another word that starts with “sh.” So Pinterest uses crowd-sourcing platforms to validate the user-generated labels. “The good news is we only need that for training data. We don’t need it within the product itself. So after a certain point, we’ll have a good enough data set — and in many areas we have more than enough images labeled — so we don’t have to focus on this problem for too much longer,” Kislyuk said.

Pinterest is also working with marketers to catalyze the process. Every product image that a brand uploads to Pinterest — such as buyable pins to include in Shop the Look — “is indexed into Lens almost immediately,” said Kislyuk.

That will help Pinterest when it eventually starts to insert ads within Lens results, as the company said in May it plans to do. But to do that, Pinterest must ensure that a brand’s ad doesn’t risk appearing in the wrong context, like in response to a controversial query or an image of a competitor’s product.

“The precision bar is very high, and we won’t let that go out unless the precision bar is met. And it’s much higher than the precision bar we would have for organic Lens search results,” said Kislyuk.

For now Pinterest remains in the experimental stages when it comes to opening up Lens to brands. You read that right: Pinterest is already experimenting with opening up Lens to brands. Pinterest has started working with brands to specially train its algorithm on their product images.

“We’ve worked with brands already where they would give us content — ‘here is a type of product we want to do really, really well on and can you recognize this at a very high precision’ — and we will make sure our product can do that. And they can then build a custom experience around that,” said Kislyuk.

Consider a footwear brand that’s getting ready to debut a new line of boots that look a lot different from what people are currently wearing. That brand could work with Pinterest to not only ensure that Lens is able to recognize the boots but also to present people scanning the boots with a branded board full of pins detailing the product with links to buy it from the brand’s site. “That’s absolutely top of our mind,” said Kislyuk. “Lens has to be a platform for the long, long term.”

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories