Site taxonomy for SEO: A straightforward guide

In this guide: learn why website taxonomy is fundamental to SEO success and how to optimize site taxonomies.

You’ve optimised your homepage, products and articles – but still aren’t hitting your SEO KPIs.

Why could this be happening?

Chances are your site taxonomy is holding you back.

What is site taxonomy?

Every website has a structure that acts as the scaffolding supporting your individual content pages. It’s a question of quality.

Is the system rigorous and streamlined? Or is it an unmanaged and disorganised jumble of pages?

Site taxonomy is the way in which a website classifies its content.

This allows the content to be organized into groups that share similar characteristics. This helps audiences to find content and Googlebot to index it.

The three most common site taxonomies are:

- Categories: These tend to be hierarchical and general in nature.

- Tags: These tend to be single value, non-hierarchical and specific in nature.

- Facets (a.k.a., filters or attributes): These tend to be multi-value, non-hierarchical and specific in nature.

When you create a new category, tag or facet, you’re creating a term within your taxonomy.

More often than not, every new term auto-generates a new page based on the URL structure. And this is exactly where the SEO problems begin.

Focus on topics, not keywords

Remember the SEO dark ages of keyword targeting – where the reigning advice was to create a page for each variant; the singular, the plural, the words in a different order, etc.

Those days are behind us. Replaced by the topic focus renaissance where we as SEOs need to think bigger than keyword variations and concentrate on satisfying the user intent behind the query.

Yet many website taxonomies unknowingly hark back to those dark ages of one keyword per page. Sometimes due to a lack of understanding. Sometimes because the content is just that old. Often because of poorly maintained taxonomies.

The reality is people love to publish new content, but they are fearful to remove pages.

This leads to a teeming mass of out-of-date, badly organized, potentially cannibalistic content obfuscated by navigation that is designed for the now.

And this is a gargantuan problem for SEO.

URLs are not ranked solely on their own merits. Every page indexed by search engines impacts how the quality algorithms evaluate domain reputation.

Google’s John Mueller has said:

“Our quality algorithms do look at the website overall, so they do look at everything that’s indexed. And if we see that the bulk of the indexed content is actually lower quality content then we might say ‘well, maybe this site overall is kind of lower quality’.”

All those taxonomy pages lurking in the depths of your site are causing index bloat, where an excessive number of low-value pages have made it into the index and are dragging down your ability to rank all pages on your website.

How to optimize site taxonomies

SEO category pages

If you call yourself a technical SEO, chances are you’ve invested endless hours into category tree (re)design.

You’ve delved into keyword research, competitor analysis, current user behaviour (navigation patterns, page entries, time on page, conversion rates, search behaviour, etc.), brand positioning and content coverage to see if you can not only match but satisfy the user’s intent.

This is followed by seemingly endless discussions and workshops with stakeholders to get buy-in and, after a lot of compromises, an agreement.

Then, finally, it’s time for a nerve-wracking launch that hopefully leads to an upward trend of organic search sessions.

But site taxonomy is beyond category tree design alone.

On an enterprise-level website, you may have say 50 to 300 categories and subcategories, but tens of thousands of pages auto-generated by other taxonomies, such as tags or facet combinations.

So when Googlebot comes along to look at your website, categories are only a small part of the whole.

And optimizing only a fraction of your website, even if they are the pages with the highest search volume, is not enough. Index bloat can impact your site authority.

What’s more, there’s a high chance for some of your curated category pages there lurks an unseen tag page competing for the same topic. For example, if a restaurant site may have both a category and tag page for “pizza.”

This negatively impacts user experience as it makes it more difficult to find the content the user is looking for. It also dilutes the site’s topical authority, and thus its ability to rank either of the pages.

SEO tag pages

Get a database export of every tag on your website.

You will likely be shocked by not only the number, but also the terms used.

Excessive tagging is criminal. The worst offenders are on sites where content creators can assign free-form tags to each post.

These tags are designed to speak to the entities in the story – things like a person, an event, a location or a broader topic.

I have seen multitudes of tags with only one article assigned. Not because the topic hadn’t been covered many times, but because the people manually tagging the pages don’t all think in the same words. So you get all kinds of keyword variation tag pages.

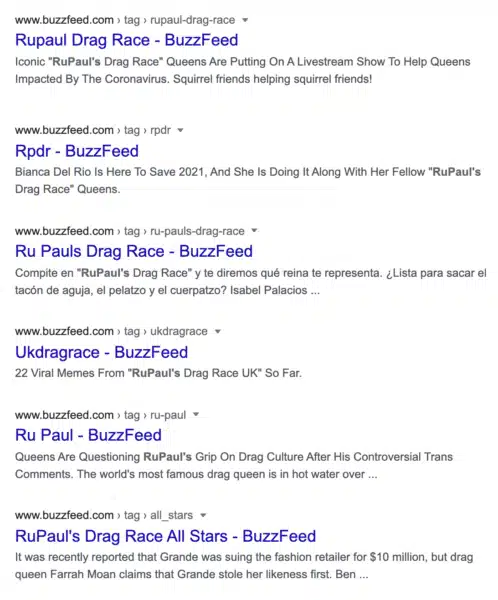

For example, for one topic (e.g., RuPaul’s Drag Race), there can be multiple free-form tags:

- RuPaul Drag Race

- RPDR

- Ru-Pauls Drag Race

- UKDragRace

- Ru-Paul

- All Stars

Each creating an indexable tag page (hi, BuzzFeed):

And this was just one topic.

More often than not, sites have no agreed upon limitations. No naming conventions. No method. Only madness.

By creating so many redundant tag pages, link equity value is split across multiple pages and the site is bloated with similar but thin content pages, which sends mixed signals to Google.

Quick fix: Use autocomplete to suggest existing tags as content creators begin to type.

Long-term SEO solution: Review the entire tag list. Keep tags that are valuable to users. 301 redirect or remove with a 410 those which aren’t. Then implement relevant limitations on tag creation, either via business processes or a technological approach.

SEO faceted navigation

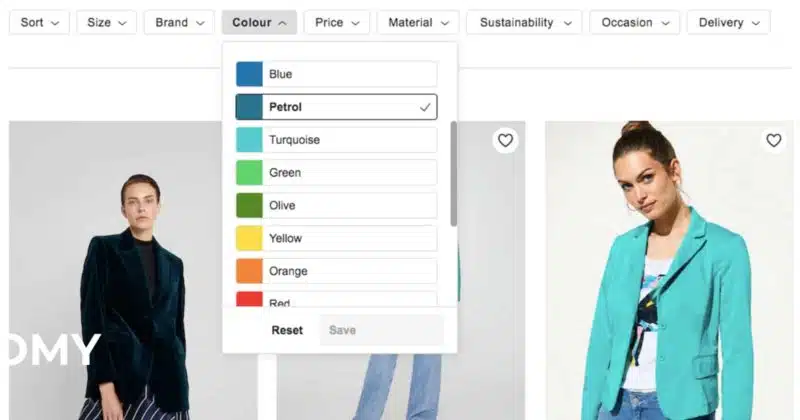

For many sites, similar to tag pages, historical free-form entry has been the issue. So you end up with a color filter with blue, petrol and turquoise.

This creates thin content issues for the more uncommon colors – and annoys the user because there are only three items in petrol, one is actually dark blue and the other turquoise.

For other sites, the issue is balancing the needs of the user to filter down to exactly what they are looking for against indexable pages being high quality and unique. For example, you can filter down by every neighborhood in a city, but only three have substantial content.

Many sites handle their faceted navigation with dynamic parameters and falsely believe this action alone takes care of all things SEO. However, depending how it is implemented, it can have a wide range of impacts on your SEO.

What’s more, a wild proliferation of facets will inevitably lead to pages that have too little content to justify their existence. They are not useful to the user and won’t send a strong topical authority signal to search engines.

- Quick fix: Consolidate facet values that will never offer either users or Google value, adding a 301 redirect if the facet was indexed.

- Long-term SEO solution: Review all existing and prospective facets (keys and their values) with both SEO potential and audience usage metrics in mind. Based on this list, take data-backed decisions which are to be static vs. dynamic URLs, as well as how you will handle the parameters. Finally, decide who in your organization owns the ongoing maintenance of that list.

Taxonomy creation is not a one-time event

The secret to designing website taxonomy?

It will never be finished and it will never be perfect because content is constantly in flux:

- Categories become obsolete.

- New types of products are added.

- Market conditions change.

- Brand positioning shifts.

- Fresh user data highlights previously unseen opportunities.

There needs to be a plan on how to optimize site taxonomy on a regular basis.

This often involves a controlled vocabulary – an authoritative, restricted list of terms to classify content to support findability.

And this requires taxonomy governance – a design for existing and future content to follow the taxonomy with clear directives of when terms are to be added, edited or removed and by whom to reflect the changing needs of users.

The problem? The best laid plans rarely work in the long term.

People leave. Decision power shifts. All that documentation will likely be forgotten.

Site taxonomy tool

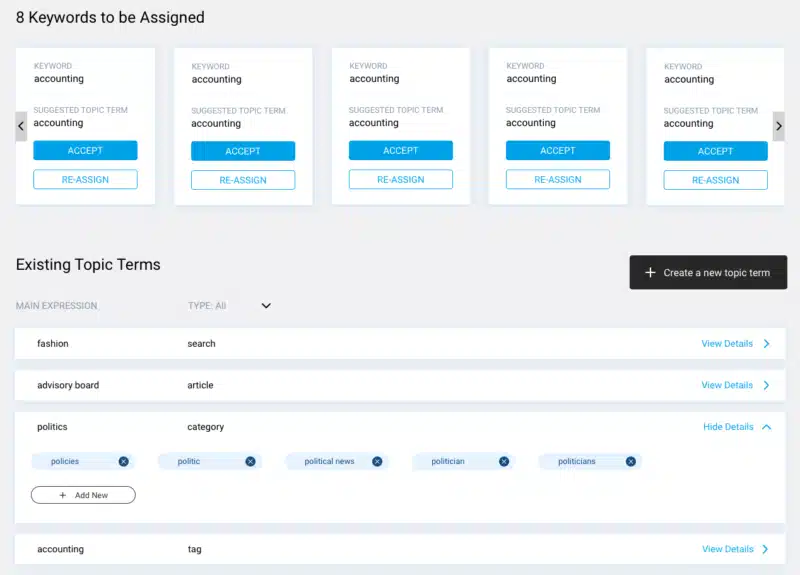

Rather than having site taxonomy as something to be reviewed on a periodic basis, get familiar with APIs (or hire a developer who is) and build yourself a site taxonomy tool that gives you all the data you need to make the right taxonomy decisions on an ongoing basis.

Understand the reality of your current taxonomies by adding terms and mapping them against a taxonomy type, URL and associated keywords.

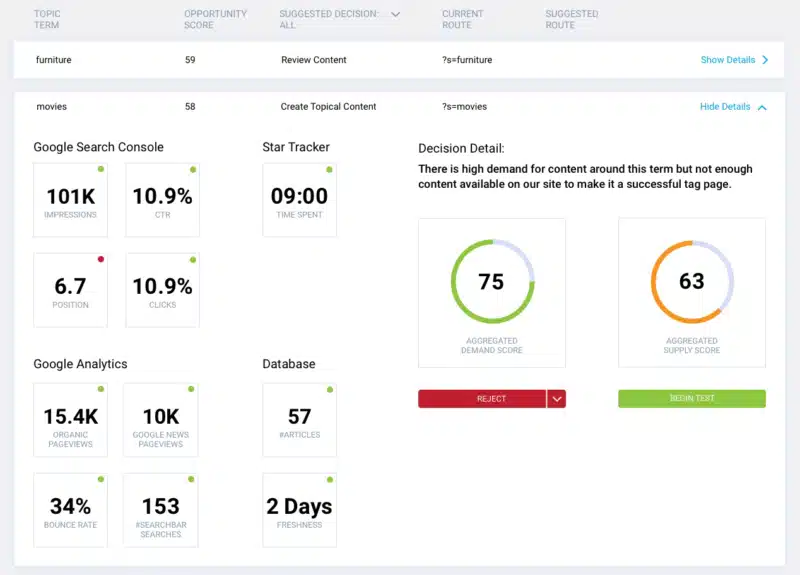

Then, for each term, you can begin to balance topic demand against content supply.

Understand on-site demand with pageviews and site search data from the Google Analytics Reporting API and off-site demand by connecting to the Google Search Console API to pull impressions, CTR and position. For wider market demand, you can pull data from the Google Ads API or the unofficial Google Trends API.

Understand the amount and quality of supply from your database by pulling in the number of content pieces that are assigned to that taxonomy term, freshness based on the average age of the most recent content pieces or bounce rates from the Google Analytics Reporting API.

Bear in mind, the best site taxonomy ever designed won’t perform well if it’s not properly applied so that the right content gets the right tags.

Why use a living tool, rather than periodic review and formal documentation?

- You can scale the site taxonomy in response primarily to the website’s end user, secondarily to the market.

- Based decisions on data over individual opinions.

- Drive a deeper understanding of topics and intents to help you provide content users actually want, not content based only on the face-value of keywords.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land