Data exploration from: An experiment in trying to predict Google rankings

Contributor JR Oakes shares a deep dive into 500,000 search results for transactional service industry queries from 200 of the largest cities in America.

Introduction

Over the last few months, we have been working with a company named Statec (a data science company from Brazil) to engineer features for predictive algorithms. One of the initial considerations in working with predictive algorithms is picking relevant data to train them on.

We set out quite naively to put together a list of webpage features that we thought may offer some value. Our goal was simply to see if from available features, we could get close to predicting the rank of a webpage in Google. We learned soon into this process that we had to put blinders on to data that was unreachable and hope for the best with what we had.

The following is an analysis of the data we collected, how we collected it and useful correlations derived from the data.

The data

One initial problem was that we needed to gain access to ranking data for enough search engine results page (SERP) results to provide a useful training set. Luckily, GetStat made this very easy. With GetStat, we simply loaded up keyword combinations across the top 25 service industries with the location of the top 200 cities (by size) in the US. This resulted in 5,000 unique search terms (e.g., “Charlotte Accountant” taken from Charlotte, NC).

Our company, Consultwebs, is focused on legal marketing, but we wanted the model to be more universal. After loading up the 5,000 terms and waiting a day, we then had roughly 500,000 search results we could use to construct our data set.

After finding this so easy, we collected the rest of the data. I had built several crawlers with Node.js, so I decided to build a feature extraction mechanism on top of pre-existing work. Luckily, Node.js is an excellent ecosystem for this type of job. Below I list several libraries that make Node wonderful for data collection:

- Aylien TextAPI — This is a node API for a third-party service that does sentiment analysis, text extraction, summarization, concept/keyword extraction and Named-Entity Recognition (NER).

- Natural — An awesome natural language processing toolkit for node. It doesn’t hold a candle to what is available on Python, but was surprisingly helpful for our needs.

- Text Statistics — Helpful to get data on sentence length, reading level and so on.

- Majestic — I started out crawling their API via a custom script, but they provided the data in one gulp, which was very nice. Thanks, Dixon!

- Cheerio — An easy-to-use library for parsing DOM elements using jQuery-style markup.

- IPInfo — Not really a library, but a great API to get server info.

The crawling process was very slow, due mainly to hit limits by API providers and our proxy service. We would have created a cluster, but the expense limited us to hitting a couple of the APIs about once per second.

Slowly, we gained a complete crawl of the full 500,000 URLs. Below are a few notes on my experience with crawling URLs for data collection:

- Use APIs where possible. Aylien was invaluable at doing tasks where node libraries would be inconsistent.

- Find a good proxy service that will allow switching between consecutive calls.

- Create logic for websites and content types that may cause errors. Craigslist, PDF and Word docs caused issues during the crawl.

- Check the collected data diligently, especially during the first several thousand results, to make sure that errors in the crawl are not creating issues with the structure of the data collected.

The results

We have reported our results from the ranking predictions in a separate post, but I wanted to review some of the interesting insights in the data collected.

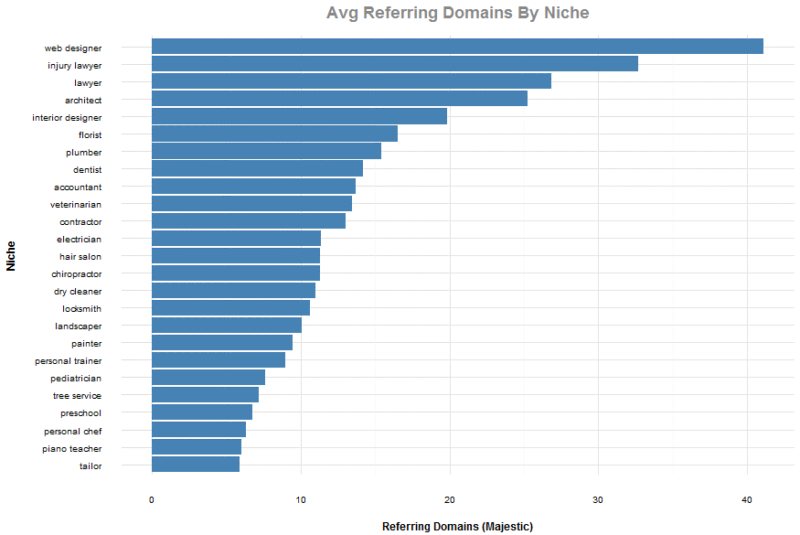

Most competitive niches

For this data, we reduced the entire data set to only include rankings in the top 20 and also removed the top four percent of observations based on referring domains. The goal in removing the top four percent of referring domains was to keep URLs such as Google, Yelp and other large websites from having undue influence on the averages. Since we were focusing on service industry results, we wanted to make sure that local business websites would likely be compared, and not major directories.

In the chart below, we assume that the web designer category is the largest due to the practice of footer links from website work. The second two highest are no surprise to those of us who work in the legal niche.

Top city link competition

Again we filtered to the top 20 ranking results across all observations and also removed the top four percent of observations based on referring domains to remove URLs from Google, Yelp and other large websites. Feel free to use this in proposals when qualifying needs for clients in particular cities.

The top results here are no surprise to those of us who have had clients in these cities. New York, in particular, is a daunting task for many niches.

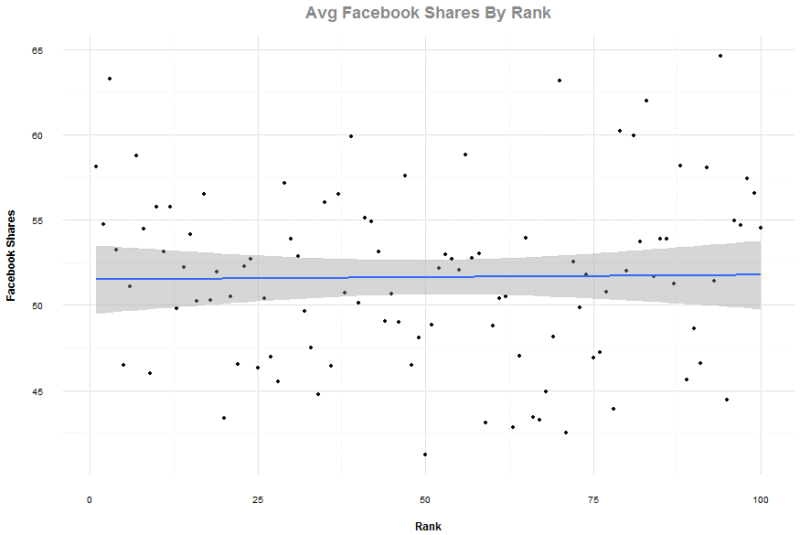

Facebook shares

For this data, we kept the full rank data at 100 results per search term, but we removed observations with referring domains over the top four percent threshold and over 5,000 Facebook shares. This was a minimal reduction to the overall size, yet it made the data plot much cleaner.

The plot reminds me of when I go out to the shooting range, in that there is really no order to shots. The Pearson correlation of average shares to rank is 0.016, and you can tell from the chart that it would be hard to draw a line between Facebook and any effect on ranking for these type of sites.

Majestic Citation Flow

For Citation Flow (CF), we stayed with the full 100 results per search term, but we again removed the top four percent of referring domains. Unsurprisingly to anyone who uses this metric, there was a very strong correlation of -0.872 between the average CF score and ranking position. There is a negative correlation because the rank becomes lower as the CF score progresses higher. This is a good reason to use CF.

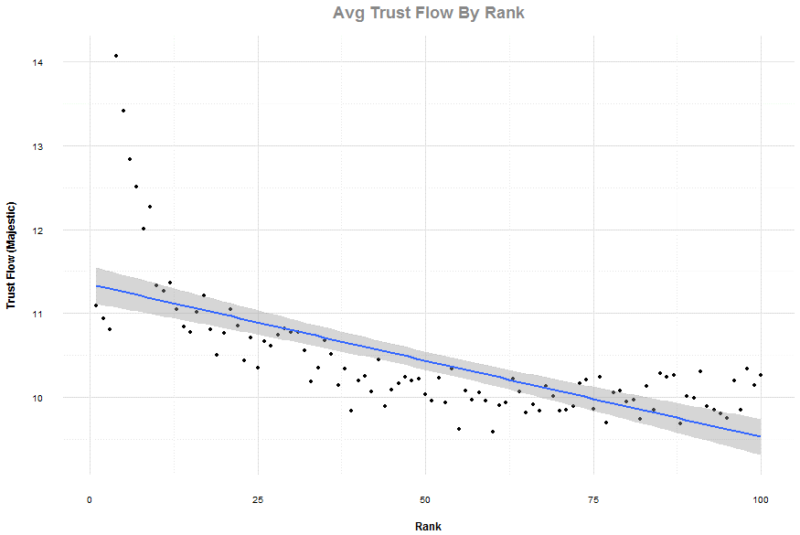

Majestic Trust Flow

For Trust Flow, we stayed with the full 100 results per search term, but we again removed the top four percent of referring domains. The correlation was not as strong as Citation Flow, but relatively strong at -0.695. An interesting note from the graph is the trajectory upward as you get into the top 20 results. Also notice that the 1 to 3 positions are probably skewed due to the impact of other metrics on local results.

Response time

Speed is on top of everyone’s mind today with Google’s focus on it and new projects like AMP. Due to crawling limitations, we were only able to measure the time it took for the hosting server to get us the contents of the page. We wanted to be careful not to call this load time, as that is often considered as the time it takes your browser to load and render the page. There is also a consideration of latency encountered between our server (AWS) and the host, but we think in aggregate any skew in results would be negligible.

Again, this is 100 search results for each search term, with the top four percent by referring domains removed. The Pearson correlation is 0.414, which suggests a relationship between response time and ranking.

Although similar to the correlation found by Backlinko for HTTPS, this might be explained in terms of better run, and optimized sites all around tend to be toward the top. In the Backlinko findings, I would question whether it is accurate to chalk up HTTPS to Google ranking preference (I know what they said) or to the fact that in many verticals, the top results are dominated by brands that tend toward HTTPS.

Text length

This one was a bit of a shock to me, but keep in mind that the keywords in this data set were more transactional in nature and not the usual Wikipedia eliciting results.The full 100 results were used, as well as the top four percent by referring domains removed.

The Pearson correlation to rank is 0.829, which suggests that it may not all be about longer content. Please note that again the local results are clearly there, and it is important to note that text length is measured in characters and can be converted to words on average by dividing by 4.5.

Server type

One of the other features that we collected is server type. This data was pulled from the server response header “Server” and classified to one of 13 categories. We restricted the results to the top 20 for each search term, and no filter was placed for referring domains. Also, we omitted types that were not defined or infrequent in the dataset. The type “GWS” is for Google Web Services. The lower average rank can be attributed to Google video and Google local results typically appearing with prominent positioning.

URL depth

For URL depth, we filtered to the top 20 ranking results across all observations and also removed the top four percent of observations based on referring domains to remove URLs from Google, Yelp and other large websites. This is an interesting one because common advice is that you want your most important results as close to the root of the site as possible. Also, notice the impact of local in terms of preference for the home page of a website.

Conclusion

I don’t think there was anything truly earth-shattering in the results of our data analysis, and this is only a small sampling of data from the 70+ features that we collected during our training.

The two most important takeaways for me are that links and speed are areas where one can make the most impact on a website. Content needs to be good (and there are indications all over the place that user behavior influences rank for some verticals), but you need to be seen to generate user behavior. The one thing that is most interesting in this data set is that it is more geared towards small business-type queries than other studies that sample a broad range of queries.

I always have been an advocate of testing, rather than relying on what works for other people or what was reported on your favorite blogs. GetStat and a bit of JavaScript (node) can give you the ability to easily put together collection mechanisms to get a more nuanced view of results relevant to the niche you are working in. Being able to deliver these types of studies can also help when trying to provide justification to our non-SEO peers as to why we are recommending for things to be done a certain way.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land