Google Adds URL Parameter Options to Google Webmaster Tools

In 2009, Google launched the parameter handling feature of Google webmaster tools, which enabled site owners to specify the parameters on their site that were optional vs. required. A year later, they improved this feature by providing an option for a default value. Google says that they’ve seen a positive impact from the usage of those […]

In 2009, Google launched the parameter handling feature of Google webmaster tools, which enabled site owners to specify the parameters on their site that were optional vs. required. A year later, they improved this feature by providing an option for a default value. Google says that they’ve seen a positive impact from the usage of those tools thus far.” Now, they’ve improved the feature again, by enabling site owners to specify how a parameter changes the content of the page.

My original article on parameter handling describes the issues that parameters can cause with how search engines crawl and index a site. In particular, as the parameters multiply, the number of near duplicate pages grows exponentially and links may be coming in to all of the various versions. This dilutes PageRank potential, and the “canonical” version of a page may not ending ranking as well as it otherwise would. Search engines may also spend much of their crawl time of various versions of a small subset of pages, preventing them from fully crawling (and thus indexing) the site.

New Options for Providing Google Information About the Parameters In Your URLs

So what’s new? You can now specify whether or not a parameter changes the content on the page. For those that don’t, once you specify that, you’re done! Things get more complicated if a parameter changes the content on the page. You now have a number of options available, described in more detail below. This latest incarnation of the feature impacts both which URLs are crawled and how the parameters are handled.

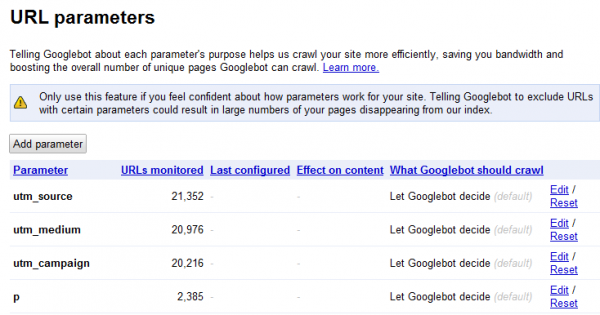

To access the feature, log into your Google webmaster tools account, click on the site you want to configure, and then choose Site configuration > URL parameters. You’ll see a list of parameters Google has found on the site, along with the number of URLs Google is “monitoring” that contain this parameter.

The default behavior is “Let Googlebot decide”. This results in Google figuring out duplicates and clustering them. As they say in their help content:

“When Google detects duplicate content, such as variations caused by URL parameters, we group the duplicate URLs into one cluster and select what we think is the “best” URL to represent the cluster in search results. We then consolidate properties of the URLs in the cluster, such as link popularity, to the representative URL. Consolidating properties from duplicates into one representative URL often provides users with more accurate search results.

To improve this process, we recommend using the parameter handling tool to give Google information about how to handle URLs containing specific parameters. We’ll do our best to take this information into account; however, there may be cases when the provided suggestions may do more harm than good for a site.”

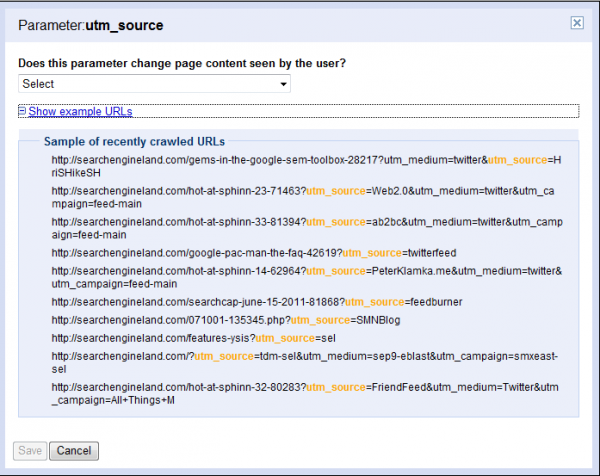

But if you want to have some control over that process, click Edit to specify whether that parameter changes the page content. You can look at a list of recently crawled URLs with that parameter to help you figure out what they’re used for.

Your choices are:

- No: Doesn’t affect page content

- Yes: Changes, reorders, or narrows page content

If a Parameters Doesn’t Page Content

If you choose no, you see the following message:

Select this option if this parameter can be set to any value without changing the page content. For example, select this option if the parameter is a session ID. If many URLs differ only in this parameter, Googlebot will crawl one representative URL.

Google will associate any URLs with those parameters with the versions without them. So for instance, if Google comes across the following two URLs, they’ll cluster both together as the first URL:

- www.mygreatsite.com/buffy-is-still-awesome.php

- www.mygreatsite.com/buffy-is-still-great.php?referrer=buffyangelship4eva

If a Parameter Changes Page Content

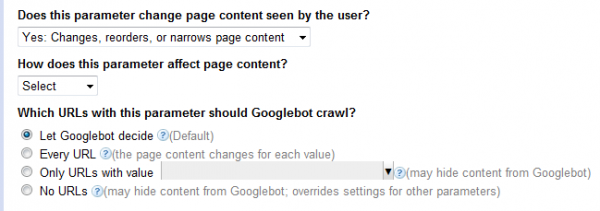

If you choose Yes, you see even more options.

How does this parameter affect page content:

- Sorts – the content stays the same, but is reordered

- Narrows – the parameter is a filter that displays a subset of the content

- Specifies – the parameter determines the page content

- Translates – the page content appears in a different language

- Paginates – that parameter indicates the page number of a larger set of content

- Other – none of the above

Which URLs with this parameter should Googlebot crawl:

- Let Googlebot decide –this is the default; Google will use other signals to determine which URLs to crawl

- Every URL – Google should crawl all URLs and use the value information above to choose what to consolidate

- Only URLs with value – triggers a list of values Googlebot has crawled to choose from; use this option if you want Google to crawl only URLs that contain a specific value for a parameter (and not crawl any others)

- No URLs – you don’t want Google to crawl any URLs with this parameter (but not that using a pattern match in robots.txt may be better option in this case)

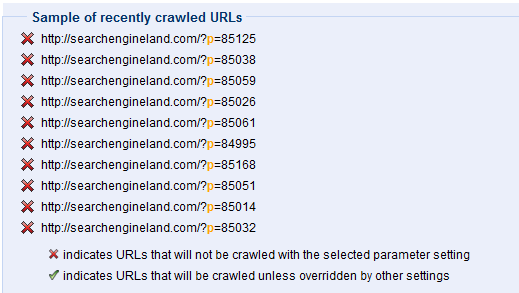

Handily, Google shows you how your choices will impact crawling:

Seem overwhelming? Fortunately, another new feature Google has launched is the ability to download all of the parameter settings as a CSV file so you can sort though them offline.

Should You Use This Feature?

As Google notes on the page, “Only use this feature if you feel confident about how parameters work for your site. Telling Googlebot to exclude URLs with certain parameters could result in large numbers of your pages disappearing from our index.” The benefit, though, is multifold. The crawl is more efficient, so more pages of the site may get crawled and therefore indexed, and links are consolidated to a single version that may then gain rankings.

My guess is that Google may not immediately stop crawling the pages, as they may want to make sure the content is in fact the same before consolidating the URLs into a single version. But if you aren’t an expert with URL parameters and canonicalization, you probably want to let Google sort this out and not change these settings. They do, in fact, do a fairly good job, particularly when URLs use standard key-value pairs.

If you know the ins and outs of URL parameters and know exactly what you want Google to crawl, then go for it. Jonathon Colman of REI jumped on these new features right away!

Should you use this feature if you’re already using the rel=canonical attribute on the pages? Sure! Google’s help says:

“You can also give Google additional information by adding the

rel="canonical"element to the HTML source of your preferred URL. Use which option works best for you; it’s fine to use both if you want to be very thorough.”

I still have questions about this feature, like how it impacts a crawlable link architecture with substantial pagination. If you’re interested in digging as well, note that I’ll be moderating a session on this at SMX East, and Maile Ohye of Google, who’s spent a lot of time on this issue, as well as experienced practioners, will be on hand to provide practical answers.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. Search Engine Land is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.