PPC experimentation vs. PPC testing: A practical breakdown

Maximize PPC impact by integrating experimentation with testing tactics, plus tips on using Google and Meta advertising tools effectively.

PPC experts love to talk about testing and experimentation, and for good reason: they’re both crucial tools in a marketer’s toolkit.

Yet, they’re often used interchangeably despite being fundamentally different.

Testing is about optimization – refining what’s already in play – while experimentation is about discovery, helping you explore bold ideas and validate strategic shifts.

PPC experimentation vs. PPC testing: How do they differ?

Understanding the distinction between experimentation and testing is key to using them effectively in your PPC strategy.

The way you approach each should reflect their distinct purposes and outcomes.

Experimentation

Experimentation is a scientific method used to validate hypotheses or discover new knowledge.

It’s exploratory and focuses on learning something fundamentally new.

For example, you might run a campaign on TikTok to see how it resonates with younger audiences.

Testing

Testing is a structured procedure designed to assess the quality or performance of a specific variable.

It’s about optimization, not discovery.

An example would be changing a single variable in an ad creative, such as the headline, to see if CTR improves.

The distinction is crucial because it dictates how you set up your initiatives and interpret results.

- Experiments often require more robust setups. They are designed to explore broader questions.

- Tests focus on smaller, tactical changes to optimize performance within existing campaigns.

In my two-plus decades in marketing, I’ve seen that the most successful teams find the right balance between testing for quick wins and experimenting to build trust and uncover new opportunities.

Let’s explore in-platform experimentation tools, manual testing methods and even advanced analytics techniques like media mix modeling, which go beyond both testing and experimentation.

Dig deeper: How to develop PPC testing strategies

In-platform experiments

One advantage of advertising on mature platforms like Google and Meta is that they offer automated, in-platform tests. These are so dialed in that they can safely be called experiments.

Beyond offering solid methodology, these models are fast and relatively easy to set up – much more so than homemade tests would be. They include:

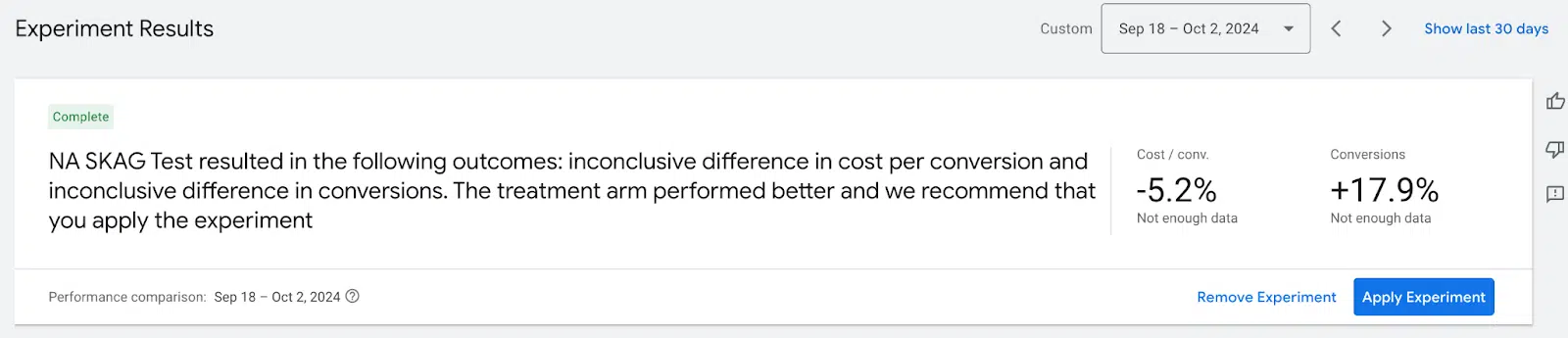

Google Ads experiments

Want to test bidding strategies, new landing pages or slightly different ad copy?

Google lets you set up Experiments without messing with your original campaign.

A key caveat: Google Ads Experiments require a high volume of data to be effective. This is because the audience must be split into test and control groups, which demands more data than your overall campaigns typically need.

Use these experiments if you’re bringing in more than 100 conversions per month. If it’s less than that, your results won’t be as reliable.

If you do have enough data to work with, you can expect black-and-white results like this one:

Google also offers Video experiments specific to YouTube ads. These enable advertisers to test and compare different video ad variations to find the most effective one.

Google allocates traffic between video ad variations based on set parameters (creative, ad length and format). It measures:

- View-through rate.

- Brand awareness lift.

- Purchase intent.

These are fuzzier metrics than CPC or CVR, but they should be part of your quiver if you’re embracing a more full-funnel approach to your campaigns.

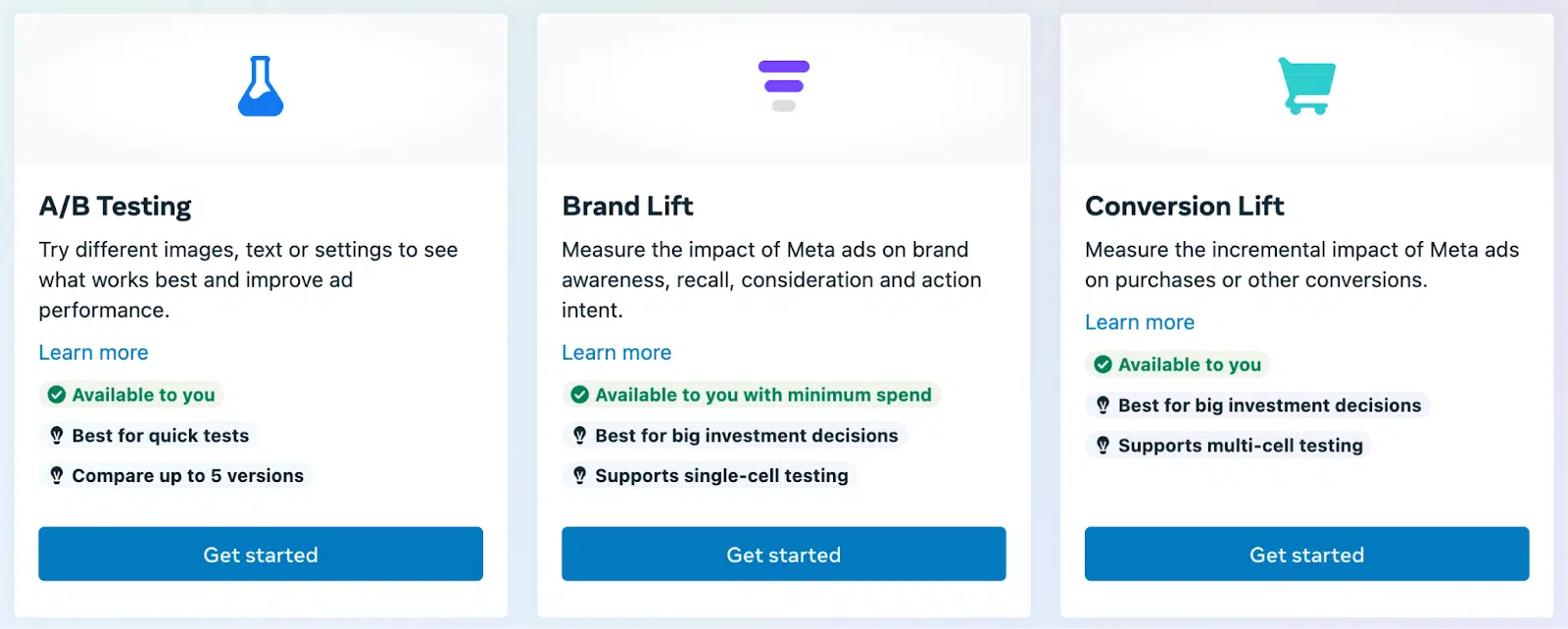

Meta’s experiment options

Meta offers several experiments designed to help advertisers measure and optimize the impact of their ads. Here’s a look at the most popular options:

- A/B testing

- Also known as split testing, this allows advertisers to compare two versions of an ad to see which performs better.

- Brand lift studies

- Measure how exposure to an ad impacts key brand metrics such as brand awareness, ad recall, message association and purchase intent.

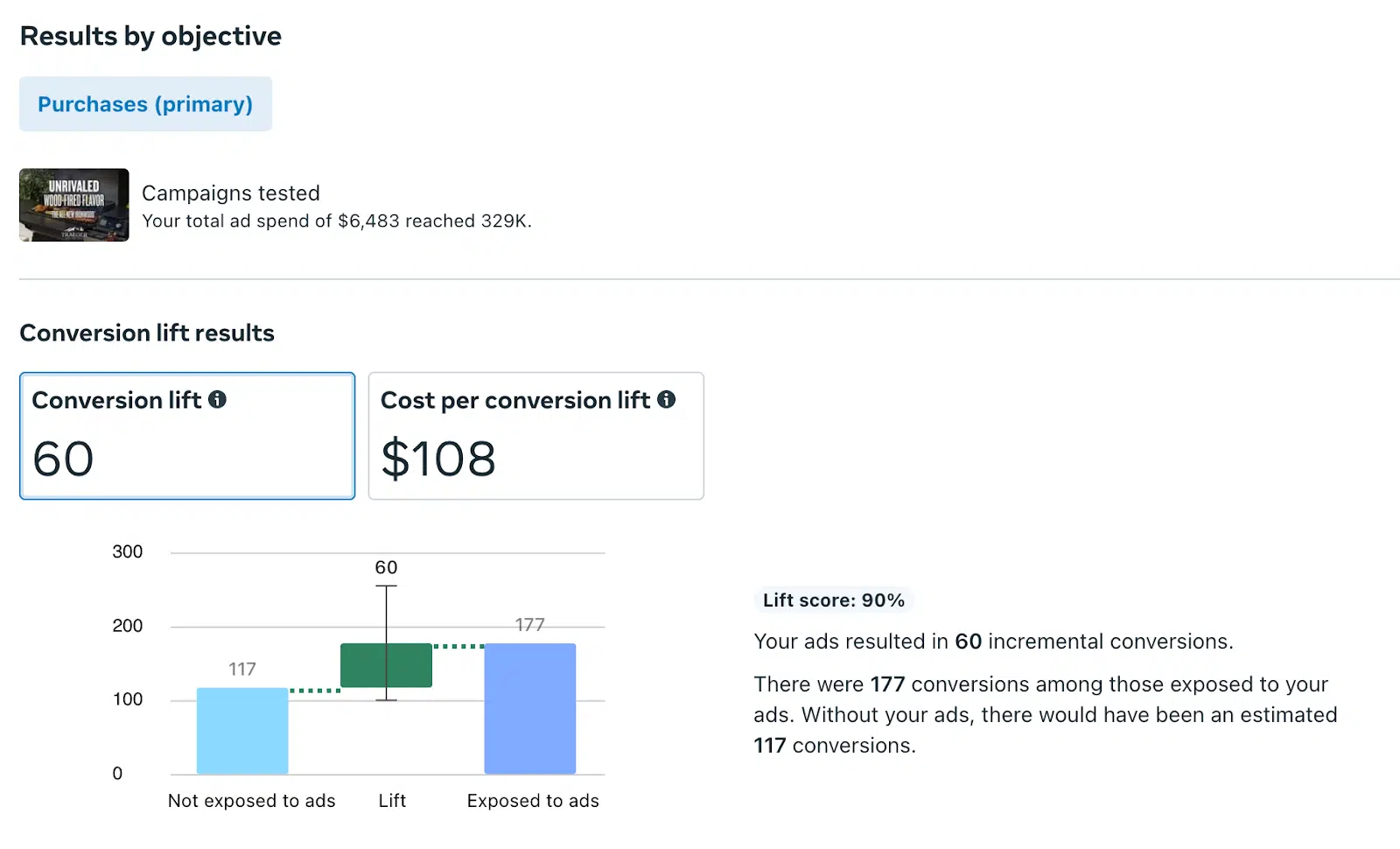

- Conversion lift studies

- Aim to measure the direct impact of Meta ads on online or offline conversions by isolating the people who saw the ads and tracking their actions.

- This is my favorite type of Meta experiment as it helps measure the true incrementality of Meta’s paid ads, which is a huge factor in our agency clients’ budget allocation.

Below is an example of results from a Meta Conversion lift test.

In this case, ads resulted in 60 incremental conversions – that is, conversions that would not have occurred without paid ads.

The experiment also provides data on the average cost of each incremental conversion, which will always be higher than the average overall conversion cost of your campaigns.

Responsive search ads

Responsive search ads (RSAs) are Google’s test-a-lot-at-once ads.

You can give Google up to 15 headlines and four descriptions, and it will shuffle them like a deck of cards until it finds a combination that works.

RSAs are best for testing ad copy elements to see which resonates best with audiences, improving click-through rates and conversions.

Manual experiments and testing

Now, if you’re a PPC purist – or you need to use a different source of truth for your experiments – manual options are for you.

Here are a few manual methods that’ll keep you in the driver’s seat:

A/B testing (DIY edition)

You create two variations of an ad or landing page and compare their performance to determine which one works better.

It’s straightforward:

- Split your audience.

- Run the two versions.

- Watch the performance like a hawk.

When the data rolls in, you get to declare the winner.

Sequential testing

This might be your best bet if you have a small audience or niche market.

You run one version of your ad for a while, then switch to a new version.

There are downsides, though:

- It takes time.

- Seasonality might skew the results.

It works well for things like account restructures, where it is difficult to form control and test groups and changes to the whole program are needed.

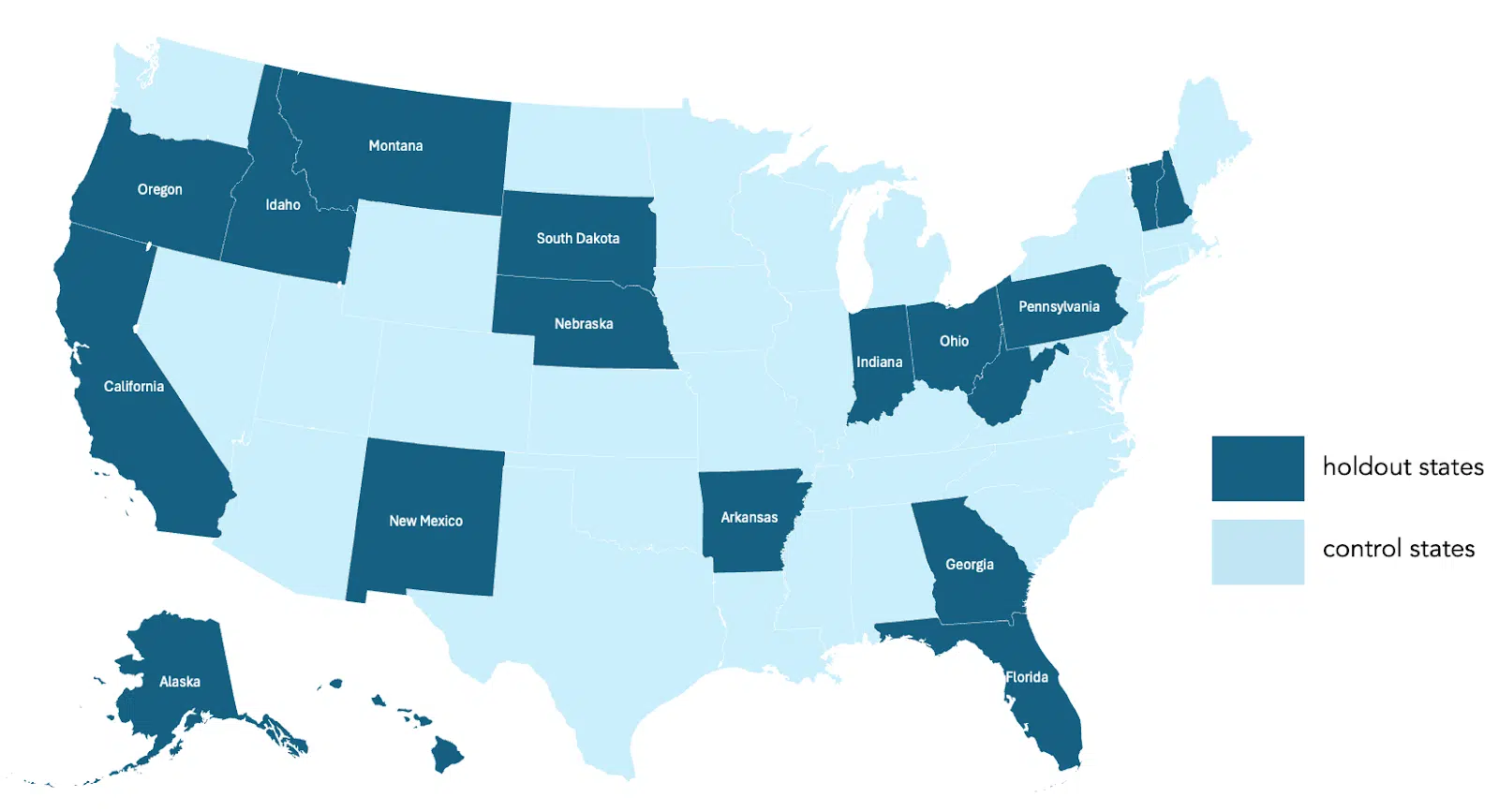

Geo-split testing

For detail-oriented marketers, you can run two different campaigns targeting different geographic regions.

Want to see if your ad resonates more in Chicago than in San Francisco?

Geo-split testing gives you clear data.

This is one of my favorite test designs as it allows us to use any back-end data (Salesforce, Shopify, sometimes Google Analytics) and helps establish causation – not just correlation.

Dig deeper: A/B testing mistakes PPC marketers make and how to fix them

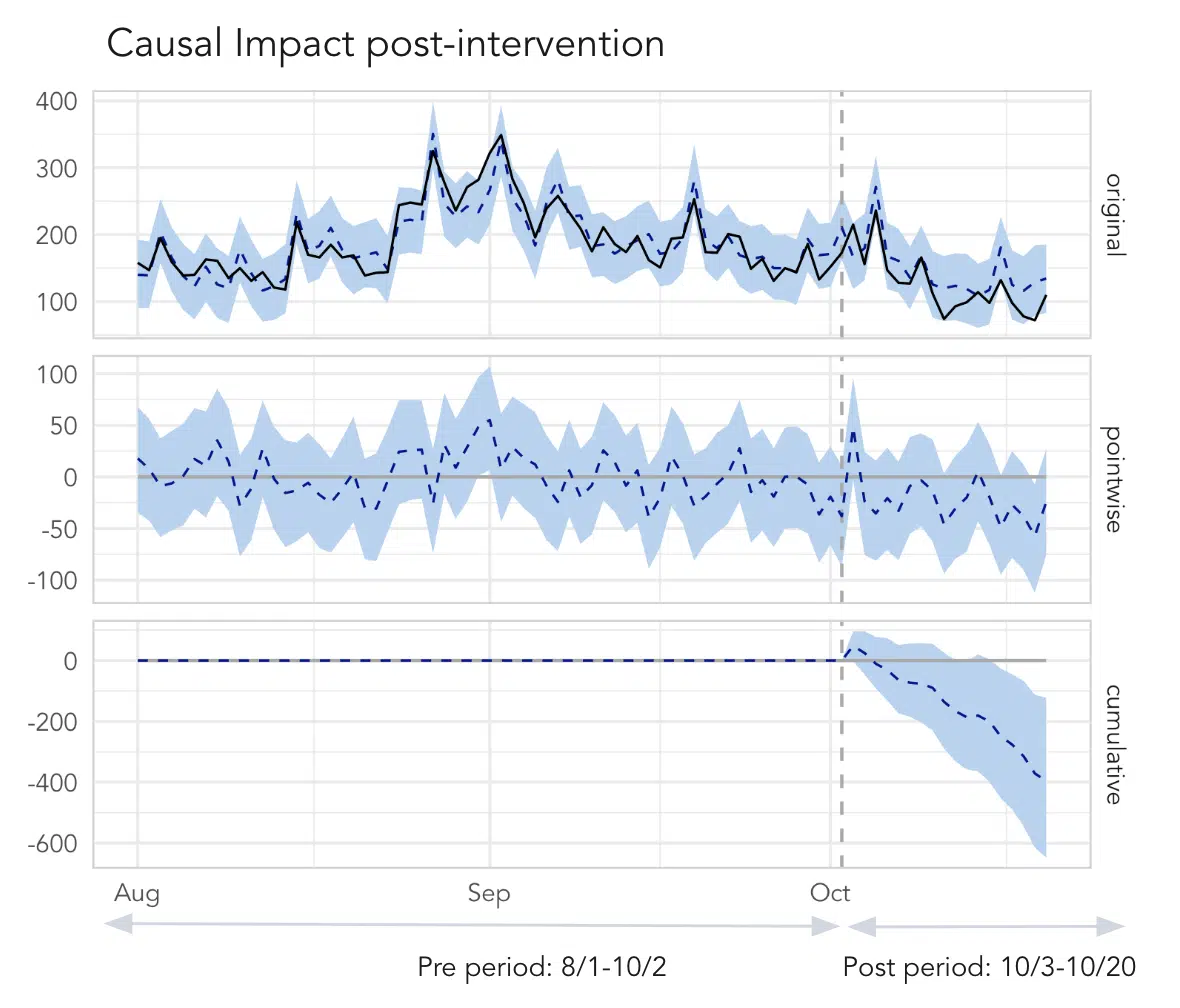

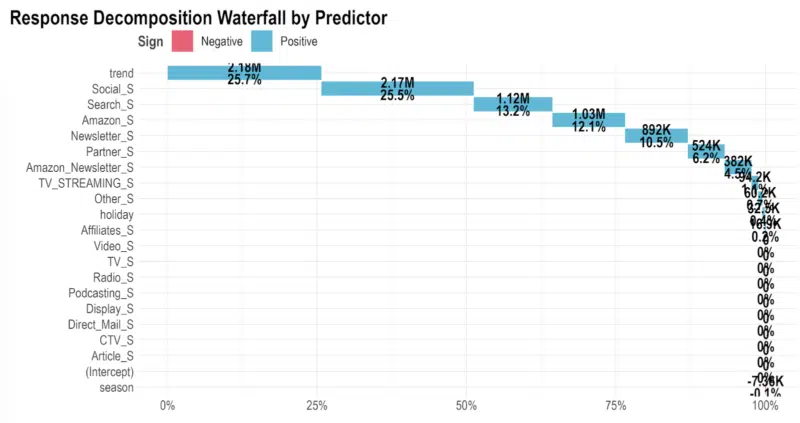

Beyond experimentation vs. testing: Media mix modeling

One of my favorite analytics initiatives to run with our clients is media mix modeling (MMM).

This model analyzes historical data using data science techniques such as non-linear regression methods, which account for seasonal trends, among other factors.

Because it’s based on historical data, it doesn’t require you to run any new tests or experiments; its goal is to use past data to help advertisers refine their cross-channel budget allocation to get better results.

MMM has lots going for it:

There are robust open-source tools.

- It doesn’t rely on cookies to be privacy-compliant.

- It gives you at-scale insights that can lead to transformative growth and performance gains.

- It’s more powerful than any testing method but also high-level. It can answer questions like “Is Facebook incremental?” without running an experiment.

That said, having an expert at the wheel is important when building and interpreting MMM analyses.

In some cases, dialing up or down specific initiatives might help improve your MMM’s accuracy.

This is especially true if you’ve historically run many evergreen initiatives, which makes it hard to dissociate the impact of multiple initiatives at once.

Dig deeper: How to evolve your PPC measurement strategy for a privacy-first future

Balancing testing, experimentation and performance in PPC

So, should you be testing or experimenting?

The answer is clear: both are essential.

In-platform testing and experiments provide quick results, making them ideal for large-scale campaigns and strategic insights.

Meanwhile, manual experimentation offers greater control, leveraging your first-party data for deeper account optimizations.

As emphasized earlier, the most successful marketing teams allocate resources for both approaches.

If you’re already planning for 2025, consider outlining specific tests and experiments, detailing expected learnings and how you’ll apply the insights to drive success.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. Search Engine Land is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.