A Super Fresh Google Index? Server Errors & Rankings Impacts

Whether it's Caffeine or a separate Google update, server errors can negatively impact rankings more quickly now. Columnist Austin Blais explains.

Any SEO professional will tell you that server errors (defined as status codes in the 5xx range) on one’s website should be minimized. Generally speaking, if a page cannot be accessed by a search engine, it cannot be accessed by a user. Though server downtime is sometimes necessary to implement fixes and update websites, be sure to handle server downtime properly and serve a 503 response — but not for too long.

But how exactly do server errors impact a site’s search engine optimization? What effects, if any, do server errors have on search engine visibility and rankings?

In early 2015, the effects of a fresh index began to emerge. Our team collected data on 5xx errors and evaluated keyword rankings. The impacts were startling, specifically with 500 Internal Server Errors and 503 Service Unavailable Errors.

When a 500 response was encountered, the page could immediately drop from ranking. When a 503 response was encountered, the impacts were not immediate, and the 503 response needed to be consistently returned to drop a page from ranking and potentially be de-indexed (impacts began as soon as one to two weeks). This experience occurred on a page-by page basis.

Following are three case studies showing the impact of server errors on search rankings:

1. 500 Internal Server Errors Occurring Intermittently

By intermittent, it means that that one specific URL would sometimes return a 200 Success or a 500 Internal Server Error. The 500 error occurred due to server instability. Crawl stats such as pages crawled per day declined significantly. This resulted in a negative rankings shift for the pages that had intermittent 500 errors.

These pages would mostly return 200 Success responses when crawled by our SEO team, but the infrequent 500 Internal Server Error would still happen. What is intriguing is that the errors couldn’t be replicated on the same magnitude with crawlers such as Merkle’s proprietary crawler, “Scalpel,” or other crawling tools.

However, Google would report these errors via the Google Search Console (GSC) Crawl Errors report. As much as SEOs try to emulate Google crawlers with crawling tools, these results indicate that it’s very difficult to replicate the exact way Google crawls.

The implementation that began this issue was a Single Page Application (SPA) developed with Angular JavaScript. Google crawls in many crawl threads simultaneously, and if those threads were requesting different files/URLs in the SPA at once (such as many facets within a given category), then the server could have failed intermittently.

Documented Impacts: Server errors increased considerably. Crawl stats were negatively impacted, and rankings subsequently suffered in Google for the pages returning the error. No immediate impacts were recorded in Bing.

Rankings shifts during the intermittent 500 responses:

Google:

- 2,122 keywords dropped from the top 10 in rankings.

- 2,959 keywords that previously ranked 11-20 dropped to lower than 20.

Bing: No change in rankings.

Screenshot from Google Search Console

Screenshot from Google Search Console

Screenshot from BrightEdge Keyword Tracking Tool

As the BrightEdge graph above conveys, the number of keywords ranked on page one moved from about 9K keywords to 6K keywords in one week. These rankings shifts resulted in immense losses in Google traffic. Bing wasn’t impacted.

For this particular case study, organic traffic decreased by around 25 percent, which had a direct impact on the bottom line. These server errors continued for a few weeks due to ongoing issues with the Angular JavaScript implementation. Once the server errors subsided (the server instability was addressed by the domain), the rankings and traffic returned.

2. 18-Hour Downtime With 500 Responses

For a certain page type, 500 error responses to Googlebot requests occurred over the course of about 18 hours. Crawl logs convey that these pages were consistently returning a 500 error during this time, but it is unknown exactly how long each page was returning this error.

For tracked keywords, the domain would drop in ranking anywhere from five to 100 positions, depending on whether another relevant page existed on the domain. If there was a similar page, and Google chose to rank it, the drop wouldn’t be as large (~five–20 positions), as Google would consider the domain as still relevant to the query.

It was interesting that Google would rank a page that wasn’t exactly what the user was looking for but it still considered the domain as relevant to the query. As mentioned, the similar page wouldn’t rank as well. It is unknown why Google did this, but this was the behavior that was observed.

Documented Impacts: This scenario showed that for all 239 tracked queries, there was a change in the top ranked page. All of these pages were reported as returning 500 Internal Server Errors during the downtime.

These keywords were tracked using BrightEdge. Because BrightEdge does multiple analyses over the week to produce an average rank, the 18-hour downtime was enough to shift the top ranked page report for the entire week.

- For all 239 queries, there was an average ranking drop of about 10 positions. (This is particularly significant considering that 233 of these queries were ranked 12 or better before the server errors occurred, and total monthly search volume was around 500K.)

- Some ranking shifts were small for certain queries when a similar page was found. “Dyson vacuums,” for example, dropped three positions because the top ranked page shifted from the general Dyson vacuums page to a specific Dyson vacuum model page.

- A particularly large drop occurred when a site sold dryers but didn’t have any dryer type subcategory pages. In this instance, the keyword “dryers” dropped from 7 to 33.

3. 503 Service Unavailable Responses Over Long-Term

Over the course of three months, a domain returned 503 Service Unavailable responses for certain page types on a semi-frequent basis. The domain had developed an SPA framework with AJAX that would begin timing out when the SPA host file couldn’t support multiple requests to the file variations (or in this case, facets) requested of it.

In this case, we were able to replicate the experience with our crawling tools. Our crawlers would experience numerous 503 responses, as well as connection timeouts. While the connection timeouts could conflate the findings, they were not nearly as numerous compared to the 503s.

Additionally, 503 responses were reported by GSC. It is noted that GSC doesn’t have a mechanism for reporting connection timeouts.

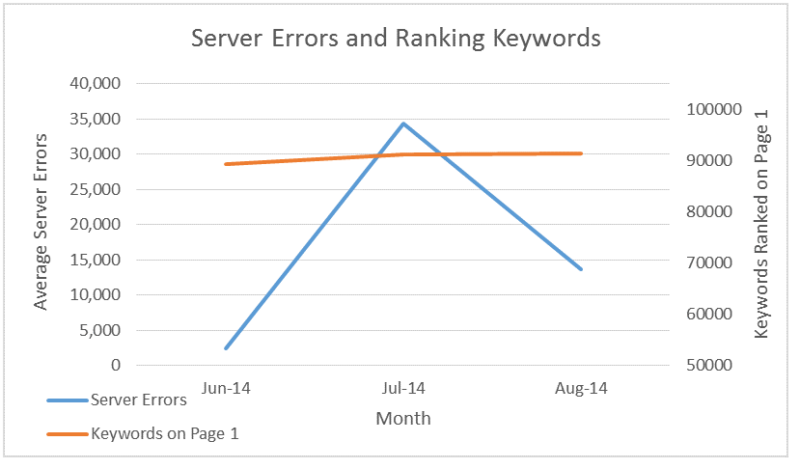

Documented Impact: Around 1K keywords dropped from page one during a period where 503 errors spiked from an average of 3K errors to an average of 25–30K errors reported by GSC. During this time, no improvements were made to these pages, and most other factors remained constant on the website. Therefore, the 503 errors over time had a direct, negative impact on rankings.

Situation 3 does coincide with John Mueller’s comment on server errors in early June:

If they persist for longer and don’t look like temporary problems anymore, we tend to start dropping those URLs from our index (until we can re-crawl them normally again).

There’s also a decent chance that these URLs are put in a queue to get crawled a bit sooner than the crawl frequency of a traditional crawl-cache cycle, but this statement has not been confirmed.

Implications For Your Website

Google’s index and rankings are now updated so frequently that a 500 Internal Server Error response can almost immediately impact rankings. The 503 service unavailable responses can affect rankings over time. Both of these 5xx errors will reduce the crawling of your site by Google’s bots.

While there is no label for it, it seems that Google has a very fresh index on a page-by-page basis. The page-by-page concept ties directly to the recent Mobile Algorithm Update and how mobile friendliness is analyzed/updated.

It was commonly held in SEO that server errors over time could hurt a domain’s rankings. That is likely still the case, but now there is a page-by-page element, as well.

Long story short, server downtime (i.e., 503 responses) is bad if it goes on for too long, and having pages respond with a 500 Internal Server Error when they should serve a different header response is not advisable. Pierre Far has a nice breakdown on server downtime.

How Long Has This Caffeine-Type Update Been Observed?

It is unclear how long this “very fresh” index has been around. We do have data from the summer of 2014 conveying similar instances of server errors with no impact on page-specific rankings.

Google did comment in the fall of 2014 on crawl budget and how it will throttle crawls when server errors occur but didn’t mention how it would impact the index or rankings. That may have been an indication of things to come.

On a given week in July 2014, a domain’s 503 Service Unavailable errors spiked from 2K errors to 72K errors.

The impact on the pages crawled per day and SERP rankings? None.

There was absolutely no change in the High, Average or Low pages crawled per day. The average pages crawled per day actually increased by 9 percent the two weeks following the spike in server errors.

Additionally, the rankings did not change for any of the pages reporting server errors. Unlike the experience in February 2015 documented in example 3, this server error issue over a long period of time did not result in throttling by Google or rankings impacts.

It should be noted that Google throttled its crawls at times dating back to at least 2012 when encountering 5xx responses, but it wasn’t as immediate as it is now. Based on evidence, Google now throttles its crawls more rapidly when it experiences server errors.

A Few Ideas To Think About Moving Forward

For large enterprise sites, it is highly recommended that engineering teams consider the amount of load SPAs will need to handle when Google requests numerous sub-files within the host URL. Do server-side rewrites need to occur from the base URL? If they are numerous, it could lead to server errors if load is not anticipated and handled.

As mentioned above, if a 500 Internal Server Error occurs for a page, Google is finding and ranking a similar page from the same domain if it can find it. Note the “similar” page will likely appear at a lower ranking. Why would Google do this? Oftentimes, the page is not what the user intended to find.

How soon does Google return after a 500 or 503? Is it in a separate queue that gets crawled again in a shorter time frame than the traditional crawl-cache cycle?

Is it a Caffeine Update or a different type of Server Error Fresh Index Update? Either way, the impacts are real and can be immediate.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land