Yes, Bing Has Human Search Quality Raters & Here’s How They Judge Web Pages

A web page that definitively satisfies a searcher’s intent is “Perfect,” and should appear at the top of Bing’s search results. On the other end of the scale, spammy web pages and pages that almost no searcher would find useful are deemed “Bad.” That’s a bit of how Bing instructs the people in its Human […]

That’s a bit of how Bing instructs the people in its Human Relevance System (HRS) project to grade web pages. It’s explained in a 52-page document that Bing calls the “HRS Judging Guidelines.”

The HRS project is similar to the Quality Rater program that Google uses. Microsoft’s version has been around in some form since shortly after MSN Search began generating its own search results in late 2004. Like Google, Microsoft uses testing services (like Lionbridge and others) to hire human search evaluators and administer the program. (Microsoft often refers to the evaluators as “judges,” and I’ll do the same in this article.)

Very little, if anything, has been written about Microsoft’s HRS project, and the company’s communications team was understandably reluctant to discuss it with Search Engine Land when we contacted them recently. But, when we shared a copy of the guidelines document that was given to us by a former judge, a Bing spokesperson did confirm that it’s the current version of the HRS guidelines. The document is dated March 15, 2012.

What’s inside? How does Bing ask its human search quality judges to grade web pages? Read on for details.

Searcher Intent & Landing Pages

The document goes into detail about the three primary query intents (Navigational, Informational and Transactional) and offers suggestions for how to determine user intent based on the search query. Human judges are instructed to consider these four questions related to intent when they judge landing pages (the “LP” referred to below):

1. Intent: Does the LP content address a possible intent for the query?

2. Scope: Does the range and depth of the LP content match what the user wants?

3. Authority: Is the trustworthiness of the content on the LP appropriate to the expectations of the user?

4. Quality: Do the appearance and organization of the LP providing a satisfying experience?

Ultimately, judges are told to identify if a landing page satisfies searcher intent on a scale from “strongly” to “poorly,” with additional categories for obscene and inaccessible content.

The guidelines document explains that “A strongly satisfying page will closely match the user’s intent and requirements in scope and authority, while a poorly satisfying result will be useful to almost no users.”

The Rating Matrix

The HRS Judging Guidelines asks judges to rely on a Rating Matrix to grade web documents. The matrix combines A) likely searcher intent with B) how well the document satisfies that intent. A document that “strongly” satisfies the “most likely” intent is graded Excellent/Perfect, while a document that “poorly” matches the most likely intent is graded Bad.

Rating Options

The five rating options that judges can use are shown in the matrix above, but the guidelines offer a more detailed explanation. This is really the heart of the document — the section that reveals what Bing looks for in grading (and likely in ranking) web pages/documents.

Here’s how Bing explains the five possible ratings:

1.) Perfect

“The LP is the definitive or official page that strongly satisfies the most likely intent.”

The document says that a Perfect landing page “should appear as the top search result.” It also says that only one landing page will typically deserve a Perfect rating, but for some generic queries (such as “loans” or “insurance”) there will not be a Perfect landing page. A Perfect page should address the intent of at least 50 percent of searchers.

2.) Excellent

Bing describes this as a landing page that “strongly satisfies a very likely or most likely intent” and “closely matches the requirements of the query in scope, freshness, authority, market and language.” Users finding an Excellent landing page “could end their search here and move on.” An Excellent page should address the intent of at least 25 percent of searchers.

An example in the document is that Barnes & Noble’s home page is an “Excellent” result for the search query “buy books.”

3.) Good

A Good landing page “moderately satisfies a very likely or most likely intent, or strongly satisfies a likely intent.” Bing says most searchers wouldn’t be completely satisfied with one of these pages and would continue searching. A Good page should address the intent of at least 10 percent of searchers.

4.) Fair

This rating applies to pages that are only useful to some searchers. A Fair page “weakly satisfies a very likely or most likely intent, moderately satisfies a likely intent, or strongly satisfies an unlikely intent.” A Fair page addresses the intent of at least one percent of searchers.

5.) Bad

In addition to being useful to almost no one and not satisfying user intent, this rating applies to a web page that “uses spam techniques” or “misleadingly provides content from other sites,” as well as to parked domains and pages that attempt to install malware. A Bad page addresses the intent of less than one percent of searchers.

The document goes into some detail on additional ratings like “Detrimental,” which applies (in part) to web documents that display adult-only content, and “No Judgment” for pages that can’t be accessed for a variety of reasons.

Freshness

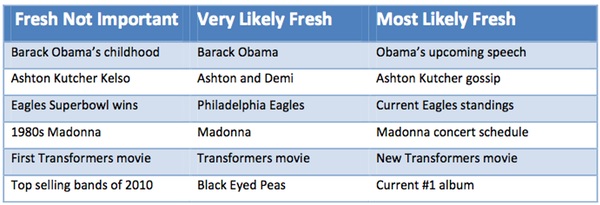

There’s a fairly detailed section on freshness. It explains why judges should take freshness into account when reviewing web documents and suggests situations when fresh content is more valuable and others when it’s not as important. The document explains that there are “essentially” three categories of freshness-related queries — Fresh Not Important, Very Likely Fresh and Most Likely Fresh — and offers this chart with example search queries to distinguish them.

Additional Considerations

There are also sections addressing queries where the search term is a URL, how to judge misspelled queries and how to judge local queries. For example, the home page of the Arizona Hispanic Chamber of Commerce is considered “Perfect” for the query hispanic chamber of comerce glendale az because Glendale is a suburb of Phoenix and there’s no Hispanic Chamber of Commerce office in Glendale.

As I said above, very little has ever been written about Microsoft’s Human Relevance System project for rating search results. From reading through the guidelines doc, I’d say it’s not all that different from Google’s handbook for its raters, which we first wrote about in 2008.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land