Six Key Themes From “Meet The Search Engines” At SMX East

Contributor Eric Enge recaps a discussion with Gary Illyes (Google) and Duane Forrester (Bing) about the current and future state of search engines.

On October 1, I attended the “Meet the Search Engines” panel at SMX East. This panel had Google’s Gary Illyes and Bing’s Duane Forrester on stage, with Search Engine Land founding editor Danny Sullivan moderating. In this post, I’ll review six key themes from the discussion.

Theme 1: AJAX Crawling

Back in October of 2009, Google recommended a way for making AJAX crawlable. Basically, this involved using a hashbang (#!) in your URL to signal AJAX crawlable URLs to search engines.

When search engines see this, they will then modify the URL and request it with the #! replaced by this string: ?_escaped_fragment_. For example, this URL:

will be replaced by this one:

When a Web server receives this version of the URL, this acts as a signal to return an “HTML Snapshot” back to the search engine. That HTML Snapshot is basically a fully rendered version of what the user would see, but in a format that is easy for the search engines to understand and interpret.

The news from Illyes on this issue at the conference is this: Google no longer recommends this approach. However, Illyes also clarified that this approach will continue to work on Google; it’s just not something they recommend any more. He also said that Google has a major blog post coming out in “about two weeks” that clarifies their position on this.

Forrester and Illyes also talked about AngularJS for a bit in this part of the session. One of the key points is that there are a lot of sites using AJAX or AngularJS that simply do not need to, from a user experience perspective.

These frameworks are often serious coding overkill, and while they might be exciting for your developer to play with, that is not reason enough to use them. Make sure that they are really needed for your user experience before you decide to go down this path.

Theme 2: Security

This discussion started with a focus on HTTPS as a ranking signal. To this, Illyes noted that you should switch to HTTPS for users, not as a ranking signal. As he has noted many times, any ranking benefit is really more of a tiebreaker for two equal pages, so you are not likely to notice increased rankings in Google if you make the switch.

Forrester noted that “it’s tough for the engines to take a (hard ranking) line on security.” My perspective on this is that this is the key point. Though users are slowly getting more concerned about security, it would be a bad search engine experience if the sites that use HTTPS all floated to the top because there are many sites that are not secure that users really want. The search engines do need to serve their users first.

However, the reason the search engines push the security agenda is an important one. Forrester emphasized this and referenced a book published by former FBI agent Mark Goodman called “Future Crimes.” The book details just how insecure the Web is at this point, and how exposed we all are.

My personal prediction is that over the next two years, we will see a series of frightening security breaches, and the average person’s overall consciousness of this issue is going to scale up rapidly. You think that the Ashley Madison security breach was bad? There is much worse to come.

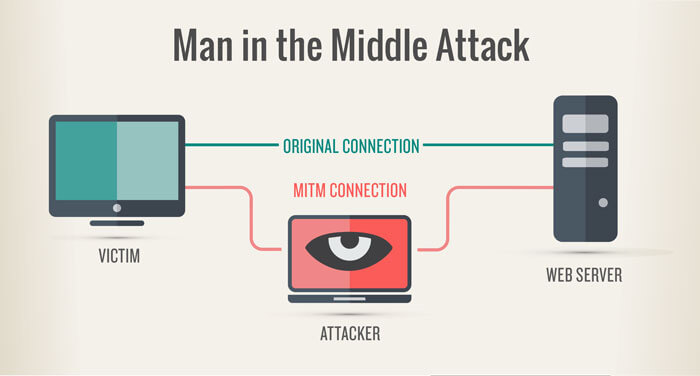

One other key point. I often hear discussions where people say that only e-commerce sites need to implement HTTPS support. Not true. Even straight blog sites should do so. Why? Because it makes it much more likely that the user receives the exact content that you deliver, not some modified form thereof. Here’s how this can get compromised by a “Man in the Middle” attack:

Let me illustrate with a real scenario. You are on the road, and you hop onto a WiFi network, such as one at Starbucks or your hotel. The WiFi service provider has the ability to modify the content that you, the user, are receiving. One basic use of this is to inject ads. That by itself might be somewhat concerning.

But that’s not all. They also have the ability to collect data on what type of content you access and profile that content. They can use this profile however they choose. Certainly not my preference to have that happening, so the sooner more of the Web switches to HTTPS the better.

Theme 3: The Millennials Are Coming!

This discussion was driven by Forrester, who went into great detail about the need to recognize how this new generation is different. Raised in an online/smartphone world, they change the rules of the game. Here is a basic summary of some of the key aspects Forrester highlighted:

- No patience for poor quality.

- Demand real engagement from brands.

- Authenticity is highly valued.

- They look for experiences.

- Their attention span is short.

Forrester further noted that as they come of age and their parents die, they will be part of the largest transfer of wealth in the history of the planet (7 trillion dollars!).

The point of all this is that they will be more demanding than other generations in this regard. I participated in a discussion in the closing panel, “Best Of Show: Top SMX Takeaways,” where someone from the audience challenged this as being significant, since these are things that all generations have looked for.

That’s true, but what’s new is the degree to which Millennials demand this. If the attention span of someone for a piece of content a decade ago was 10 minutes, today that is more like two minutes. If a decade ago, you had 20 or 30 seconds to entice someone into starting to read your content, today you have two or three.

Practically speaking, this means that you will need to place an ever-increasing focus on user value and broadcast a user-first approach to marketing, or you won’t be able to succeed.

Theme 4: Content Syndication

Someone asked about the value of content syndication. Forrester started this off by noting that you should not do this for SEO, but it might be a good thing to do for other business purposes. He also said that in theory, you should not have a duplicate content problem, but there was no guarantee of this.

In short, he could not guarantee that Bing would recognize your site as being the original author of the content, though they would get it right most of the time.

Illyes chimed in to emphasize that there is no such thing as a duplicate content penalty. All that happens with duplicate content is that Google chooses a canonical version and will show that. That’s not a penalty to your site, it’s simply Google making a choice as to which version is the best.

He then went on to say that it’s also possible that if The New York Times syndicates content to CNN, then CNN could be seen as the originator. For that reason, it’s a very good idea to give search engines hints as to which version is the original version, such as a rel=”canonical” tag. In my example, that would be a canonical tag on the CNN version of the article pointing to the New York Times version of the article.

My take: Yes, there is a use for content syndication in your digital marketing strategy. You should view this primarily as a way to potentially build your reputation and visibility. The following image should help illustrate the concept:

The point is to not spew your content over a bunch of low-value sites hoping that the links back to you will help you (They may even hurt you). Instead, focus your efforts on syndicating content to sites that are higher in authority than yours, ones that have an audience you desire to get in front of.

This will help you build your reputation and visibility. In addition, if there is any type of syndication that might provide some SEO-link value, this is the way to do it.

Final note on this issue: If you are able to get that higher authority content to accept content from you, why not give them original content and publish something else on your site? This is a great way to build your visibility, and then get links back to your site, where users can see different content. A much better overall strategy in my opinion.

Theme 5: URLs For International Domains

Someone from the audience asked a question as to what URL structure was best for international sites. Illyes started by saying it doesn’t matter to Google. The most important thing for you to do is to make use of hreflang tags. (You can see a tutorial on how to implement hreflang tags here.)

Forrester then chimed in and noted that there could be user reasons for caring about which URL to use, however. For example, if you have a site targeting people in France, it would be wise to implement a “.fr” version of your domain. He also noted that this is not a search engine issue, but one of giving users the experience they prefer.

That’s not necessarily the end of this issue, though; you will see in the discussion below on use of click-through rate data that there could be an indirect impact on SEO. (In Bing, at least, if users are more likely to click on a “.fr” domain than a site showing pages for users in a “/fr” folder, that could impact rankings.)

Theme 6: User Data & Search Engine Rankings

This started as a page speed discussion, which was by itself not that interesting. However, it caused Forrester to quip, “Here’s one more way that page speed matters for SEO: The faster a user clicks back from a SERP click-through, then the faster you will rank lower.” This is not entirely new news, as it’s something that I discussed with Forrester in 2011.

Illyes then commented that user interaction data is very noisy and hard to use. However, he noted that Google does use it in certain ways. For example, they might use it in experiments when testing out a new feature in the results.

He also said that it’s useful in personalization scenarios as well, e.g., if a user searches for “apple,” it’s not entirely clear if they mean the company or the fruit. If the user, over time, shows a clear preference for pages about the fruit, Google may personalize the results to emphasize fruit-related pages more when they see those types of searches from that user.

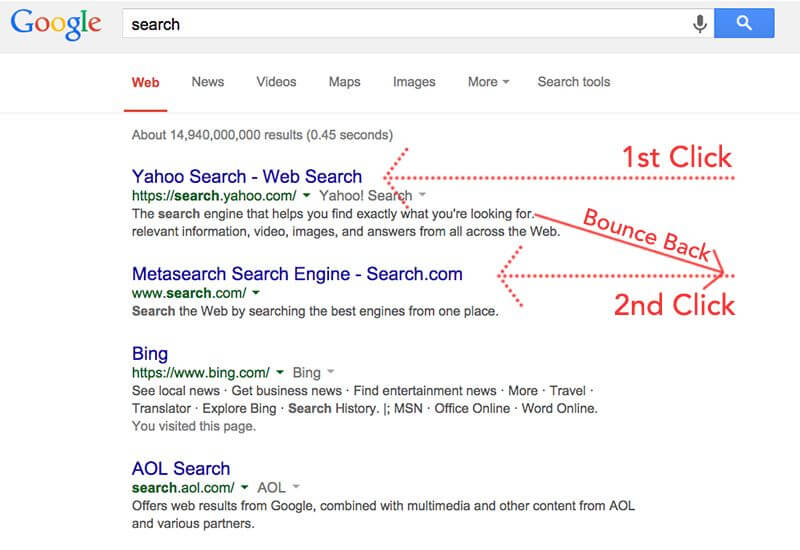

One thing that wasn’t discussed was the concept of pogosticking, which is a more modified version of simply measuring click-through rate:

Now, here is my stance on this one. It’s not clear what search engines might be doing in this area, but collecting data on content quality and user engagement has to be very important to them. It’s going to be very hard to figure out what signals they look at in particular, but they are looking at some form of this data. You can see more on this in my post here on SEL about the 100 User Model.

Basically, I strongly believe that higher user satisfaction with the results search engines offer increases revenue to the search engines. Why? If users get sent to sites that don’t answer their questions, they will simply head off to other places to get answers to their questions (Facebook, texting their friends, calling someone, searching on Amazon, oh, and yes, trying a different search engine).

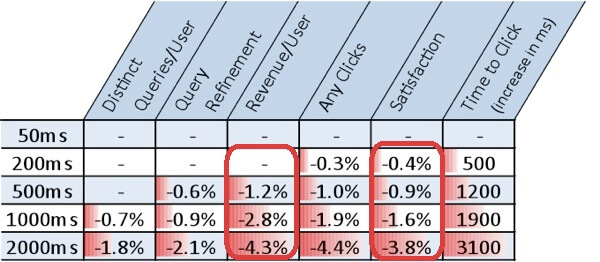

Back in 2009, both Google and Bing published the results of a server-side delays test, where they introduced small delays into the rendering of the SERPs. Here is a table of the results:

Notice the strong correlation between user satisfaction and revenue. While this data is more about speed delays than it is about serving poor quality search results, it’s not a major leap to conclude that the issue for Google and Bing is the same.

In short, ensuring that they are serving the best possible results is of critical importance to both of them. For that reason, I believe that they are using as many methods (that provide them with high quality signals) as possible to do that.

Summary

For me, those were the major themes coming out of this session. There was much more covered along the way, but these are the things that stuck out to me the most. Let me know what you think.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land