Fixing historical redirects using Wayback Machine APIs

Columnist Patrick Stox believes that fixing historical redirects is often an easy way to achieve some quick wins, and this column shows you how to do just that using the Wayback Machine CDX Server API.

If you had to tell an established company to do just one thing to improve their SEO, what would it be? What would you tell them is the one thing that would have the most impact in the least amount of time and would really move the needle for them?

Personally, I would tell them to fix their historical redirects. The process involves fixing redirects that were never done (or dropped over time) from old pages on a website that had accumulated links and aren’t properly transferring signals to the current pages. You’re recovering value that already belongs to your website but was lost.

In all of my years doing SEO, I would say that fixing redirects has been one of the most powerful tactics. No one really likes to do redirects, and all too often they are completely omitted or forgotten over the years. It’s really important to have a process in place to make sure that redirects get done, that they are done correctly and that they are up-to-date.

I can’t tell you how great it is to save people after a botched migration or redesign, or to be able to show massive improvements at the start of a campaign with just a few hours of work. Getting off to a great start with a new client really instills a level of trust in your work.

Lost links

My first article on Search Engine Land, “Take Back Your Lost Links,” was about this very topic. In it, I showed how a company that had failed to implement redirects with a website redesign experienced a significant drop in their traffic.

After fixing these redirects and grabbing a list of pages that had previously existed on older versions of the website from the Internet Archive’s Wayback Machine, I was able to fix historical redirects that had not been done, or had been lost in the updates over the years, and saw traffic double from previous levels within a month.

I’ve seen this one tactic — fixing historical redirects — make the difference between being just another competitor out there to being a real player at the top of the market.

The screen shots below show a more recent example taken from early May of this year. Around 25 referring domains were recovered, according to Ahrefs. Normally, when I see sudden spikes like this in Ahrefs, I would start looking for spam, but in this case, it’s just recovering links to pages on previous versions of the website that haven’t existed in a while.

How long would it take to get those links with a link-building campaign versus the time it took to fix a few redirects?

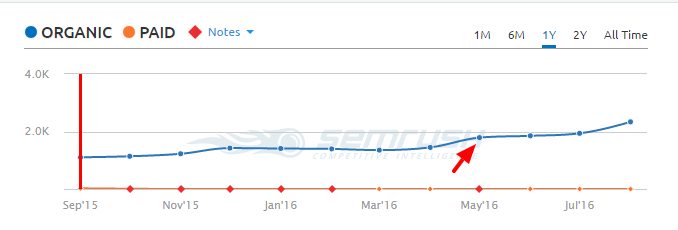

SEMrush shows around 30 percent additional traffic in one month, but it was actually a bit higher, according to Google Analytics data.

Screaming Frog vs. Wayback Machine CDX Server

In my previous post, I chose to crawl the Wayback Machine with Screaming Frog. This is the most complete method for gathering URIs, as it’s crawling everything it finds on every page that has been archived, and it also provides a cleaner output than using the CDX Server (which I’ll go into detail on later in the article). It provides a complete picture, but this method fails when dealing with larger websites due to memory limitations.

After I presented at SMX Advanced this year, I must have had 50 people focus in and ask me about one particular part of my presentation. The sad part is that it wasn’t actually in my slides, but was just a bonus tip. What drew so much attention that the rest of my presentation was ignored? The fact that you could pull URIs from the Wayback Machine easily to check and fix historical redirects.

I actually mentioned outputting URIs in JSON in my previous post as an alternate to Screaming Frog, but this is actually my preferred method. Before you run off because you don’t want to deal with JSON, you should know that the CDX Server outputs as plain text by default.

Wayback Machine CDX Server

You can find the full documentation for the Wayback Machine CDX Server API over on their GitHub.

A very basic query might look like:

https://web.archive.org/cdx/search/cdx?url=searchengineland.com

But I would recommend something like:

Breaking this query down:

- &matchType=domain gives me all results from the domain and subdomains.

- &fl=original says I only want the URIs. This is what makes the output really clean, rather than getting all the columns.

- &collapse=urlkey basically removes all duplicates seen for versions of URIs, leaving us only one listing for each page.

- &limit=100000 is limiting the results to 100,000 rows.

Other things that I’ve found very useful are the Resumption Key, which lets you continue where you left off for really large queries, or to use the Pagination API for these very large queries, which again breaks the data into chunks. Check the documentation for how to use these if you need them.

The other option I find myself using often is the Regex filtering. This allows me to remove various files I might not want, such as css, jss, ico, or in other cases, I might not want images, so I’d filter out things like jpg, jpeg, gif, png and so on.

Cleaning the output

We’re not done here, unfortunately. Even though the query above is fairly clean, there are still a lot of things I don’t want to see in the output: images, feeds, robots.txt, weird URIs created for various reasons. All these still need to be filtered out. There may be ports that need to be removed, parameters set, campaign tags that need to be removed, malformed links, characters that need converting from UTF-8 or a number of other possibilities.

This is different for every website, and you can either use the CDX Server filters or just delete or replace lines or parts in a text document or spreadsheet to get the output where you want it. Don’t get intimidated here. I can’t run you through every possibility, but with some combination of filters, search and replace or manual work, you’ll get a clean list of the old pages.

Checking the redirects

With your clean list of URIs, you’ll want to check what’s happening with these old pages. I still use Screaming Frog for this, and Dan Sharp posted a guide for this here. If there are 404s, you will want to fix these to recover any lost signals. With 302s and 301s with multiple hops, I’d still recommend cleaning these up as a best practice, but the line is now more fuzzy as to the benefit. Some of you may have seen the tweet from Google Webmaster Trends Analyst Gary Illyes:

30x redirects don't lose PageRank anymore.

— Gary 鯨理/경리 Illyes (so official, trust me) (@methode) July 26, 2016

I’ll tell you that every time someone from Google says things like “302s are the same as 301s,” I test it. I’m usually one of the biggest naysayers, because in my experience, it hasn’t been the case. I ran a couple of tests from December through March, when Google’s John Mueller was saying 302s were the same as 301s, and I still saw dropoffs when using 302s and having multiple hops.

I started these tests again the day after Illyes sent the above tweet. While it’s too early to draw a conclusion (It’s only been a month), and I haven’t done a full log analysis yet, I’m actually convinced that this statement is finally true for Google. (I can’t say other search engines have had the same results.) Still, I would recommend cleaning up redirect chains and using 301s as a best practice.

Wrapping up on historical redirects

There are a lot of methods to gather historical pages and fix the redirects, but at the end of the day, what matters is that you fix them. Again, this is one of the tactics I’ve seen make the most impact time and time again. Remember that even after you do this work, it’s important to have policies in place to implement and maintain redirects in order to preserve the value of the links obtained over time.

Since I know many people will ask about what kind of gains they can expect, the truth is that it’s difficult to say. For a relatively new website, there would be no gains; but if you’ve been around a while, changed website structure over the years or merged or migrated websites into the main website, then you could see significant gains. It just depends on how many of the signals were never passed.

If you know that some of the pages might have been spammed with links in the past, then I’d still recommend doing the redirects, but be sure to monitor incoming links and update your disavow file.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land