Who’s Afraid Of The Big Bold Test?

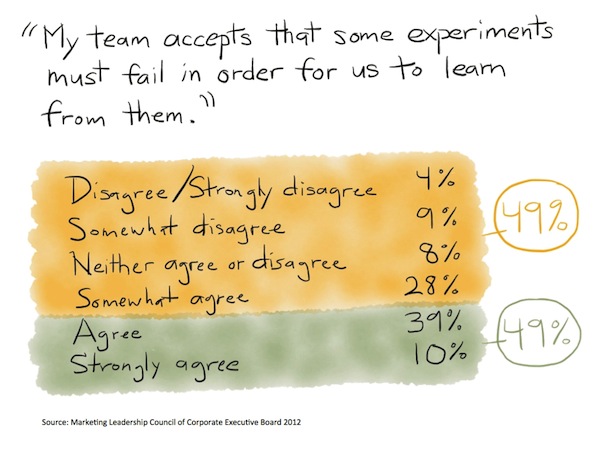

“My team accepts that some experiments must fail in order for us to learn from them.” In a recent study of Fortune 1000 marketers conducted by the Marketing Leadership Council of the Corporate Executive Board, only about 50% of the respondents agreed with that statement. Think about that for a moment. In an age when […]

“My team accepts that some experiments must fail in order for us to learn from them.”

In a recent study of Fortune 1000 marketers conducted by the Marketing Leadership Council of the Corporate Executive Board, only about 50% of the respondents agreed with that statement.

Think about that for a moment.

In an age when it’s never been easier, technically speaking, for marketers to test ad creatives and landing pages, what may be holding back half of them is a cultural resistance to trying something that may fail.

If you’ve got something that is kind of working, do you want to take risks to improve it?

In old-school marketing, where it was often difficult to run small, controlled tests, organizational resistance to taking a chance on change was more understandable. However, that difficulty no longer exists — at least not for any technical reason — in digital marketing. Yet, the hesitation to try something bold still lives on.

That’s a shame, because even if you go through the motions of running a test, you will rarely gain much if you’re unwilling to experiment with bold ideas. If you’re only willing to test, say, two different headlines that are nearly identical, you may squeeze out a little lift. But it’s unlikely that you’re going to achieve a significant bump with such timidity.

How To Be Bold And “Play It Safe”

You can embrace meaningful testing and do so safely.

First, you must recognize that not testing — or testing only minor rearrangements — is not actually safe for most businesses. We live in an age where the status quo is constantly under attack from disruptive innovation. A dominant position in digital marketing can be surprisingly fleeting.

The only way to stay ahead of your competition is to keep innovating. If you don’t figure out the next new way to impact your audience, someone else will. In marketing, this is best achieved through a program of continuous experimentation. Clay Christensen is a big advocate of such self-driven innovation.

Safety is not achieved by watering down your ideas, either. Organizations that develop the capability to brainstorm and pursue bold marketing ideas — and nurture that in their culture — will have a distinct advantage over such milquetoast marketers.

Instead, safety is achieved by limiting the scope of your initial tests of a bold new idea. There are three ways to do this.

The first is to focus on a source of traffic that is important but small — i.e., a highly specialized keyword campaign in Google. The number of respondents to ads in that keyword group are small enough that if the experiment doesn’t work, you’ve risked little. If it succeeds, you count your win and move on to the next one. This is the “long tail” strategy of experimentation.

The second is to try an entirely new campaign, where the source of traffic is arriving from some channel, vehicle, or context that is different from your existing marketing programs. In many cases, this has negligible risk — if the experiment doesn’t work, you simply shut it down. (Or start again with a new hypothesis.) If it succeeds, it’s additive business. This is the “frontier” strategy of experimentation.

The third — and most flexible — is to use weighted split testing. A/B tests don’t have to be implemented as a 50/50 split of your existing traffic. If you have a current “champion” as your status quo that is performing well — but you think has the potential to be improved nonetheless — you can run a “challenger” with an 80/20 split, or even a 90/10 split.

Granted, when you siphon a smaller percentage of your traffic to a challenger in an A/B split test, it may take longer to achieve statistical significance on the result. But if you have a lot of traffic — which popular existing programs often do — the calendar time required to achieve significance may still be quite short.

If the challenger doesn’t work, you’ve minimized its impact to a small fraction of your audience. If it does work, however, you can ratchet up its weighting and eventually crown it as the new champion. This is the “toe in the water” strategy of experimentation.

Effective Test-And-Learn Capability Is A Competitive Advantage

All of these strategies for experimentation can balance the risk-reward equation. The real trick is learning from the experiments that don’t work, not simply celebrating the ones that do and sweeping the rest under the rug.

The ability for a team to look at an experiment that didn’t work, and without blame or shame discuss the insight that can be derived from it is invaluable. Often, failed experiments inspire great hypotheses for new tests.

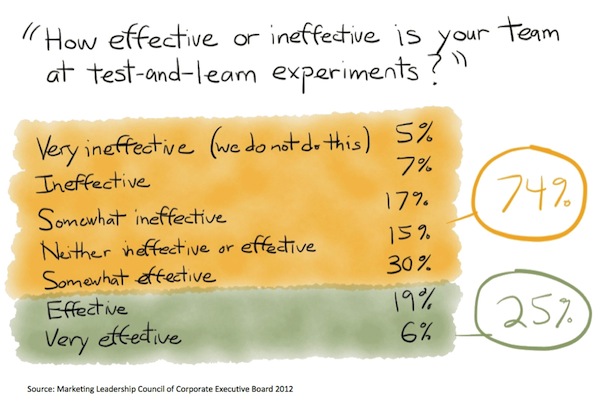

Without the cultural freedom to fail, and to milk insight out of the losses as well as the wins, an organization will not be effective at test-and-learn experiments. Not surprisingly, the Marketing Leadership Council’s research included a question that identified just that weakness:

Only one-quarter of the respondents felt their teams were effective or very effective at test-and-learn experiments. (Although I don’t have the correlation with those who responded to the other question — whether or not they accepted that some experiments must fail — I have a pretty good hypothesis that the yellow-highlighted segments overlap.)

Who are the companies that are very effective at test-and-learn? Google is one. In Jim Manzi’s fascinating book, Uncontrolled: The Surprising Payoff of Trial-and-Error for Business, Politics, and Society, he reported that:

Google ran approximately 12,000 experiments in 2009. Only about 10% of those experiments successfully resulted in changes to Google’s business. But that’s nearly 1,200 demonstrable improvements in a single year. That’s pretty impressive.

It’s not easy to shift culture in an organization. But test-and-learn capabilities, an important part of the broader movement toward agile marketing, will increasingly be a source of competitive advantage.

It won’t be too much longer before it’s more embarrassing to not be doing continuous marketing experiments than it ever was to do an experiment that didn’t win.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land