A Lesson From the Indexing of Google Translate: Blocking Search Results From Search Results

Last year, Google published an SEO Report Card of 100 Google properties. In it, they rated themselves on how well the sites were optimized for search. Google’s Matt Cutts presented the results at SMX West 2010 in Ignite format. He noted that not every Googler is an expert in search and search engine optimization. Googlers […]

Last year, Google published an SEO Report Card of 100 Google properties. In it, they rated themselves on how well the sites were optimized for search. Google’s Matt Cutts presented the results at SMX West 2010 in Ignite format. He noted that not every Googler is an expert in search and search engine optimization. Googlers who don’t work in search don’t get preferential treatment from those who do and just like any site on the internet, sometimes things aren’t implemented correctly. Just because a site is owned by Google doesn’t mean it’s the best example of what to do in terms of SEO.

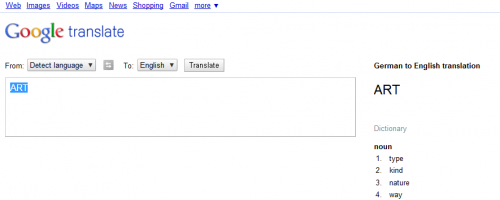

This morning Rishi Lakhani tweeted about Google Translate pages appearing in Google search results. As you can see in the example below, pages with individual translation requests have been indexed.

All of the URLs that include a parameter seem to be individual translations. For instance, https://translate.google.com/?q=ART# displays as follows:

The problems with these types of pages being indexed in search engines is twofold:

- The Google webmaster guidelines say that Google doesn’t want to show search results in its search results and recommends that content owners block search results on their site from being indexed using robots.txt or a meta robots tag.

- That same guideline recommends blocking autogenerated pages from being indexed and a Google Webmaster Central blog a few months ago provided recommendations for handling machine-translated text so that it didn’t appear in search results.

A site owner might also want to block these types of pages from being crawled and indexed to increase crawl efficiency and ensure the most valuable pages on the site are being crawled and indexed instead.

I asked Google about this and they confirmed that indeed it was simply a matter of the Google Translate team not being aware of the issue and said they would resolve it.

Blocking Autogenerated Search Pages From Being Indexed

In the case of Google Translate, the ideal scenario is that the main page and any secondary pages (such as this tools page) be indexed, but that any pages from translation requests not be indexed.

Using robots.txt

The best way to do this would be to add a disallow line in the robots.txt file for the site that blocks indexing based on a pattern match of the URL query parameter. For instance:

Disallow: /*q=

This pattern would prevent search engines from indexing any URLs containing q=. (The * before the q= means that the q= can appear anywhere in the URL.)

In the case of translate.google.com (and all related TLDs), the robots.txt file that exists for the subdomains seems to be copied from www.google.com. Remember that search engines obey the robots.txt file for each subomain separately. Using the same robots.txt file for a subdomain that’s used for the www variation of the domain could have unintended consequences because the subomain likely has an entirely different folder and URL structure. (You can always check the behavior of your robots.txt file using Google Webmaster Tools.)

Adding the disallow pattern shown above to the www.google.com/robots.txt file would not work as search engines wouldn’t check that file when crawling the translate subdomain and in would instead cause search engines not to index URLs that match the pattern on www.google.com.

translate.google.com (and all google.com subdomains should have their own robots.txt file that’s customized for that subdomain.

Using the meta robots tag

If Google isn’t able to create a separate robots.txt file for the translate subdomain, they should first remove the file that’s there (and from other subdomains as well, as it could be causing unexpected indexing results for those subdomains). Then, they should use the meta robots tag on the individual pages they want blocked. Since the pages in question are dynamically generated, the way to do this would be to add logic to the code that generates these pages that writes the robots meta tag to the page as its created. This tag belongs in the <head> section of the page and looks as follows:

<meta="robots" content="noindex">

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories