Fetch, Googlebot! Google’s New Way To Submit URLs & Updated Pages

Today, Google launched a new way for site owners to request that specific web pages be crawled. How is this different from the other ways available to let Google know about your pages and when should you use this feature vs. the others? Read on for more. This new method for submitting URLs to Google […]

Today, Google launched a new way for site owners to request that specific web pages be crawled. How is this different from the other ways available to let Google know about your pages and when should you use this feature vs. the others? Read on for more.

Today, Google launched a new way for site owners to request that specific web pages be crawled. How is this different from the other ways available to let Google know about your pages and when should you use this feature vs. the others? Read on for more.

This new method for submitting URLs to Google is limited, so you should use it when it’s important that certain pages be crawled right away. Although Google doesn’t guarantee that they’ll index every page that they crawl, this new feature does seem to at least escalate that evaluation process.

To better understand how this feature works, let’s take a look at how Google crawls the web and the various ways URLs are fed into Google’s crawling and indexing system.

How Google Crawls & Indexes the Web

First, it’s important to know a bit about Google’s crawling and indexing pipeline. Google learns about URLs through all of the ways described below and then adds those URLs to its crawl scheduling system. It dedups the list and then rearranges the list of URLs in priority order and crawls in that order.

The priority is based on all kinds of factors, including the overall value of the page, based in part on PageRank, as well as how often the content changes and how important it is for Google to index that new content (a news home page would fall into this category, for instance.

Once a page is crawled, Google then goes through another algorithmic process to determine whether to store the page in their index.

What this means is that Google doesn’t crawl every page they know about and doesn’t index every page they crawl.

How Google Finds Pages To Crawl

Obviously, a page can’t begin the crawling and indexing process until Google knows about it. Below are some of the common ways (including the newest!) Google learns about new or changed pages.

Discovery

Google has lots of ways to discover URLs on the web and they’re pretty good at it. After all, they could never have built up a comprehensive index in the first place without having a good handle on discovering pages on the web.

Originally, Google discovered pages based on links, and that’s the still a core method they use (which is why it’s so vital to ensure that you link to every page on your site internally, as you may not have an external link to every page), although they’ve evolved those methods over time (for instance, they now use RSS feeds for discovery).

Once Google knows about a page, it continues to crawl it periodically. Most sites do fine relying on this method, particularly if the site is well-linked (which of course, is more easily said than done).

XML Sitemaps

The XML Sitemaps protocol enables you to submit a complete list of URLs to Google and Bing. The search engines don’t guarantee that they’ll crawl every URL submitted, but they do feed this list into their crawl scheduling system.

Public Requests

Google has always had an “Add URL” form available for anyone to request that a URL be added to the index. Historically, this form was intended for searchers who noticed that a particular page seemed to be missing.

As you might imagine, this scenario happened less often over time, and Google likely doesn’t weight these requests as heavily as they do other signals for crawling.

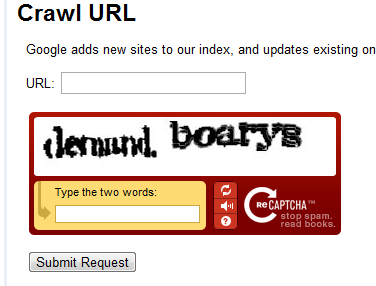

With this latest launch, the Add URL form has been renamed Crawl URL, you have to log in to it with a Google Account, and while you can add any URL (not just those on a site you’ve verified you own), you can submit only up to 50 URLs a week.

Google Webmaster Tools Fetch As Googlebot

This is the new feature launched today. The Fetch as Googlebot feature itself has been around for a while and lets you direct Googlebot to crawl a specific URL on a site you’ve verified you own and see what response Googlebot gets back from the server. This is helpful in debugging issues with the crawl that aren’t obvious when you load the site in a browser as a user.

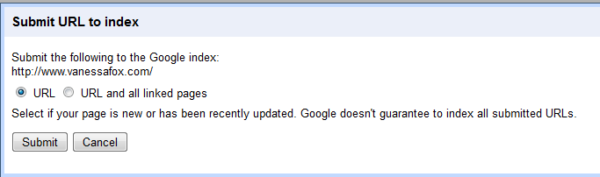

Since this functionality requires that Googlebot, in fact, crawls the page, there’s no need to start at the beginning of crawling process, so you can now simply request indexing consideration for that page after you fetch it by clicking Submit to index.

When Should You Use The New URL Submission Feature?

XML Sitemaps are still the best way to provide a comprehensive list of URLs to Google and a solid internal link architecture and external links to pages through your site is still the best way to encourage Google to crawl and index those pages.

However, this new feature is ideal for situations when you launch a new set of pages on your site or have major updates. Google also notes you can use this feature to speed up URL removal or cache updates.

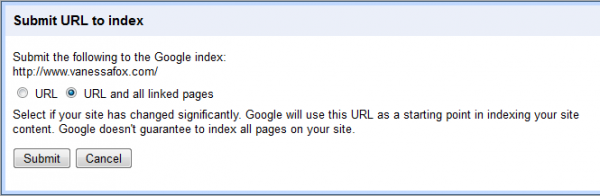

You can submit up to 50 URLs a week (although the UI seems to have a bug that starts you with 28). You can also submit the crawled URL and all pages linked from that URL, but those submissions are limited to 10 per month. A common use of that latter option would be when you have launched a new section of your site.

Submit a Single URL to Google’s Index

Submit All Pages Linked to a URL to Google’s Index

You should always use this option over the public Crawl URL submission, as requests by verified site owners are likely given higher prioritization. As Google notes, this request doesn’t ensure the URL will in fact end up in the index, but the page is much farther along in the process.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land