Google announces cards, discovery tools, revamped image search at 20th anniversary event

Most of the new features were for mobile devices and focused on structured data and machine learning.

At a surprisingly low-key 20th anniversary and future of search event, Google announced a number of new search features. Some are new, some are expansions or adaptations of existing capabilities, and some are borrowed or “inspired by” competitors’ products (Snap, Instagram, Pinterest).

Ben Gomes, VP of search, led off the event with a brief historical overview of Google’s mission and approach to organizing information. He then introduced conceptual buckets defining the next generation of search capabilities, three “search shifts”:

- From answers to journeys.

- From search queries to queryless discovery.

- From text to visual content.

Personalizing the ‘search journey.’ Years ago, Microsoft determined that search was not a one-off behavior. Its research showed people were typically conducting multiple queries over many sessions to accomplish tasks like finding a job, planning a wedding, buying a car, renting an apartment or planning a vacation. Today, Google echoed that idea in two related search announcements: Activity Cards and improved Collections.

Activity Cards will surface “where you left off in search,” showing you previous pages/sites visited and previous queries. Google says they won’t show up every time or for every query, and users can edit and remove results from the Cards.

The new Collections

Collections already existed, but it’s not clear how many people have been using it. Collections allows users to save and organize content on their phones. The new version of Collections is closely tied to Activity Cards.

Users will be able to save pages to their Collections from Activity Cards. There will also be suggestions of related topics. One can imagine the utility of this for planning and high-consideration shopping (e.g., buying a car, home or major appliance).

Google is also adding what it’s calling a “topic layer” to the knowledge graph. This is what’s behind its growing number of related suggestions. According to Google:

The Topic Layer is built by analyzing all the content that exists on the web for a given topic and develops hundreds and thousands of subtopics. For these subtopics, we can identify the most relevant articles and videos — the ones that have shown themselves to be evergreen and continually useful, as well as fresh content on the topic. We then look at patterns to understand how these subtopics relate to each other, so we can more intelligently surface the type of content you might want to explore next.

The ‘Topic Layer’ in the Answer Box/Featured Snippets

In the image above, you can see that there are distinct subtopics for each dog breed. This is based on Google’s understanding of the relationships between these individual breeds and related queries and content. It’s more structured data in action.

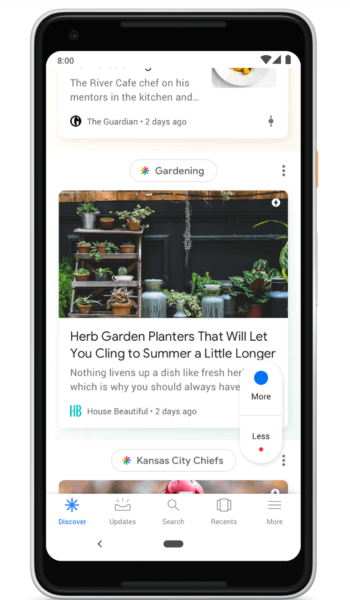

Google Feed becomes ‘Discover.’ Google revealed that Google Feed has 800 million monthly active users globally. It also said that the feed is an increasing source of traffic for third-party publishers. Today, the company announced a name change and some enhancements to Google Feed.

The new product name is “Discover.” And it will appear on the mobile version of the Google home page.

Beyond the new name are design improvements and the addition of new user controls and capabilities. Each news or content item will now be accompanied by a topic heading that is effectively a search query, to learn more about the particular topic.

Users can also follow topics and indicate whether they want to see more or less content on a particular topic. Google will also be adding more video content to the feed.

Google Feed is now Discover

Visual search: borrowing from Snapchat, Pinterest. The third major set of announcements surrounded visual search and discovery. And these updates and changes take at least some of their inspiration from Snap, Instagram and Pinterest.

Following up on AMP stories, Google is expanding them into search results, initially around celebrities and athletes. Stories is a visually immersive mobile format that includes video and is swipeable, created by machine:

[W]e’re beginning to use AI to intelligently construct AMP stories and surface this content in Search. We’re starting today with stories about notable people — like celebrities and athletes — providing a glimpse into facts and important moments from their lives in a rich, visual format. This format lets you easily tap to the articles for more information and provides a new way to discover content from the web.

Separately, Google said that it’s using computer vision to identify relevant segments of videos for quick preview in search results. Called “featured videos,” it will help users identify more quickly the videos that are relevant to their interests by showcasing the on-point sections.

Google also said that it’s updating Google images this week to “show more context around images,” to give users more information and better enable them to take action — on both desktop and mobile devices.

The company added that Google Lens is coming to Google Images, to enable visual searching on any item or object in a photo. If Lens gets the desired product or image wrong, users will be able to manually indicate (draw a circle around) the specific search object to redirect Lens to that item.

The other stuff. Google ended with enhancements to its Job search capabilities that will show job searchers nearby educational or job-training opportunities connected with particular openings. This new initiative is called Pathways.

The company announced initial partnerships with the State of Virginia, the Virginia Community College System and local Virginia employers. Its also partnered with Goodwill to help that organization expose its job-training opportunities in search.

Finally, Google is expanding its SOS and Public Alerts to include flood warnings, using sophisticated data modeling to illustrate the potential extent and progression of flood paths and resulting damage. It’s launching the program in India in partnership with that country’s Central Water Commission.

What it all means for marketers. Google is bringing much more structured content into search results and in some cases pre-empting queries or suggesting queries that will generate additional search sessions. Conventional “blue links,” especially on mobile devices, will be further de-emphasized. All these new features also create potential new contextual ad inventory for marketers.

Google didn’t discuss monetization or ad placements in any of these features or content areas. However, at least some of them are likely to see ad placements in the near or medium term.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land