Why Quality Is The Only Sustainable SEO Strategy

One of the most important takeaways after the Panda Update / Farmer fallout is to make your sites as high-quality and useful as possible. The next year should be interesting, as some sites invest in quality, while others try to game signals seeking shortcuts to the hard work. Both are valid, as long as you’re […]

One of the most important takeaways after the Panda Update / Farmer fallout is to make your sites as high-quality and useful as possible. The next year should be interesting, as some sites invest in quality, while others try to game signals seeking shortcuts to the hard work. Both are valid, as long as you’re ready to accept the risk of shortcuts, but only the hard work will continue to yield results long-term.

Matt Cutts and Amit Singhal conceded that there are signals in the latest algorithm update that can be gamed. (Any algorithm can be gamed.) What are they? It will take time, but eventually some of them will be discovered.

If quality, credibility, and authority can all be algorithmically identified, then certainly they are based on distinct sets of factors that in sum create signals.

Things such as density of advertising, author names and titles, address and phone information, badges and memberships in known organizations, content density, the quality of a site’s link profile, maybe even W3C compliance (although that’s a stretch) are all potential areas to investigate. This advice is not just for the gamers, these are areas where high-quality, white-hat SEOs should be looking, too.

We are coming upon a graduation of sorts for SEO that will continue to bring various disciplines together: information architecture, user experience, even web design are all important in regards to SEO and how a site is scored.

How a site “feels” to a visitor, the credibility it portrays, these are areas that design plays an important part in, an area that John Andrews was already talking about at SearchFest in 2010.

Above and beyond the granular tactical stuff we SEOs are obsessed with, we need to figure out what users want, because that’s where Google is going. Chasing users, not algorithms, will have the best long-term influence on a site’s rankings.

After all, Google (and any search engine) is basically a means to an end, a way to capture audience share (the users) who depend on search to find good information. I’ve been saying exactly the same thing for about 10 years, and it’s more true now than ever.

What Link Metrics Translate To Higher Rankings?

The conference season is in full swing and I’ve spoken at a couple recent ones: SearchFest, where I presented on link building with Rand Fishkin, and at SMX West where I presented with Vanessa Fox, Dennis Goedegebuure and Tony Adam on enterprise SEO.

Building presentations is always a great exercise, because it forces you to distill your thoughts into actionable, quality information for conference attendees. I love the process and I really enjoy speaking at these shows.

My SearchFest presentation sought to communicate the following four points to our audience:

1. Traffic yield of the URL

While “people” need keywords to find what they’re looking for, keywords are just a proxy for the people who use them. As SEOs, we tend to obsess on keywords… after all, they’re where the money is. Right? Sort of. Keywords are a means to an end, they are bait on a hook. The hook is your quality resource which will attract and retain them. And that resource is best signified for SEOs by one thing and one thing only: the URL. In SEO, the URL is where all the value is, not the keywords.

Ranking reports are becoming even more meaningless than ever. Google appears to be throwing random results back for IPs and/or user agents that appear to be scraping for rankings. This creates a lot of noise and problems as reports are built for clients.

What matters is not the ranking (funny though how Google reports on “average rank” in Webmaster Tools), but the total traffic yield of the URL.

What is the traffic quantity in total keyword searches? How much volume do those searches have, how much traffic does the URL see? And what is the quality of traffic, such as bounce rate (hopefully low), average time on site or pages per visit, and conversion rate (hopefully those are high).

That is much better information than a ranking report. All this said, ranking reports are not going away because there is far too much education yet to be done on the client side. Ranking reports are comfortable, they are what’s always been used to track SEO success. That needs to change.

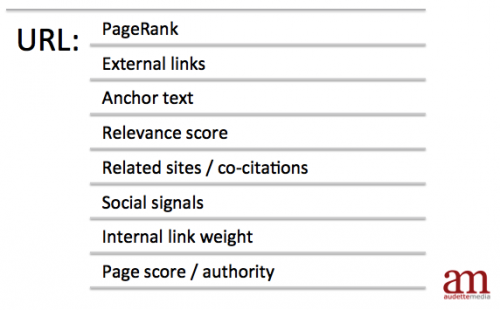

Getting back to the above point, the URL is where all the value is stored. Page scoring factors and many other criteria are rolled up into the URL, which is stored as a distinct field in the search engine databases.

2. Preserving the power of URLs

This is why it’s absolutely critical that URLs are preserved. Well-aged URLs will score best, unless they’re in News and QDF searches. Redirects greatly hamper SEO success. Any redirect.

Recent experiences have shown a great deal of equity loss when using 301s, and in some cases, a rel canonical tag appears to work better to transfer equity. The idea that one can “store” internal PageRank to be used later with a 301 is what basically introduced the equity rot that is occuring with permanent redirects now.

I still recommend using a 301 when you can. It’s the best possible way to permanently move content. Just be open to rel canonical, because it’s quite powerful and can be a very strong signal for Google at this time. It’s also well adopted across the web. Bing also supports it, but reports are mixed how well they’re using it.

3. Looking beyond links

It’s not only about links. However, especially prior to Panda Farmer, links tend to brute force top rankings on competitive SERPs (when overall domain authority, or the “wikipedia effect,”, doesn’t hold sway).

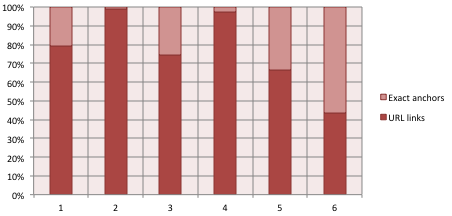

I took the time to analyze several competitive SERPs to see what factors really mattered when it comes to links: is it sheer quantity, unique domains, page-specific links, diversity, or anchor text? In my analysis, the biggest four factors were domain authority, total domain links and unique domains, page-specific links and uniques, and matching anchor text.

However, it was interesting to note that in several cases, prominence of exact-match anchors seemed to be very common in positions 7-10, possibly indicating up-and-coming competitors pushing hard for rankings using heavy anchor matching. Stronger competitors were benefiting from a more cohesive link strategy that also focused on sheer quantity, especially quantity of unique referring domains.

The below image shows the link profile for the SERP ‘marketing automation’ in unique referring links and matching anchor text. Can you find the Wikipedia entry? Bet you can.

4. Link factors in search algorithms

There are many link factors that could (and should) be taken into account by any algorithm. These include (at least):

- Recency (are links “come and go,” have there been a lot or very few links recently, etc

- Transience (do links disappear after a time)

- Anchor text (how much exact match is there)

- Context (is the link contextual)

- Relevance (how related to the site’s content is the link)

- Prominence of placement (is the link in a spot that maximizes its CTR, or is it lower left or in a footer)

- Other links on the page (what quality are the other links on the page, and how well do they match)

- Trends (what is the trend of links over time)

- Co-citation (what kinds of links point to the page)

- Frequency of linking (how frequently do the domains exchange links)

While the above is a fairly exhaustive list of link factors, in our analyses we’ve found time and again that there are basically 4 link factors that tend to influence performance:

- The domain authority of the ranking URL

- The quantity and diversity of links into the domain

- The quantity and diversity of links into the URL

- The amount of matching anchors

(“Diversity” here meaning the amount of unique referring domains.)

There are always exceptions, and in fact, every SERP is unique. Additionally, it’s impossible to isolate link scoring outside of on-page factors; rankings are more complex than links. But the result of link analyses tend to show the above factors.

How To Achieve SEO Sustainability

In industrial strength SEO, quality and scale must hold sway. On-page strategies, internal linking, and off-page strategies in social and link development, should always emphasize quality and scalable techniques.

As we’ve found with the latest Google algorithm shift, when quality and the user is kept in focus, performance can withstand even dramatic algorithm adjustments. The name of the game in SEO is change, but by keeping focused on users and not algorithms, negative consequences can be minimized.

That’s not to say you shouldn’t keep an eye on what the engines are doing. On the contrary, I recommend studying the algos like a hawk! It’s essential to know what’s happening and why. Just don’t build your SEO strategy around the algorithms. Build your SEO strategy around your users.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories