How to remove sensitive client data from Google’s index

Uncover the critical search indexing issue many SEOs overlook, the accidental exposure of client data on Google, and what to do about it.

Better keyword rankings. More traffic. Extra conversions from organic search. These are the KPIs used to measure SEO performance.

But beyond growth metrics, there’s a key element that some consultants or agencies overlook when managing a client’s SEO campaigns:

Preventing confidential client content from appearing in Google search results.

When neglected, this might result in a breach of trust or expensive litigation that can ultimately end a client relationship.

All this need not happen if you know how easily client data can enter Google’s index and how to avoid it.

Uncover the critical search indexing issue many SEOs miss, the accidental exposure of client data on Google, and ways to deindex such content.

How I found sensitive data

I’m a full-time independent SEO consultant who has partnered with varied midsize companies since 2018, having improved organic search results for over 10 years.

When doing a technical SEO audit, I use a site search operator (entering site:domain.com) on Google to check out results. Here, I can quickly see how site names, titles, URLs and snippets look across different page categories.

I also notice patterns of what’s getting indexed, perhaps appending keywords to the operator to get more specific when needed.

For most clients, I’ll sometimes notice dev/testing/staging sites getting indexed, thin content diluting link equity or harming crawl efficacy (or leading to keyword cannibalization) and paid landing pages that aren’t meant to rank.

I’ve started to detect, though, with alarming frequency, something unique to SaaS clients:

Pages typically under subdomains that no one ever thinks about – in either marketing or product teams – being indexed.

The most innocuous are customer subdomains that customize their login experience (e.g., client.example.com).

Even here, a client may not wish to have their name in search results. Depending on your product, this could reveal a differentiator or vulnerability to competitors.

In far more serious cases, web-based forms with collected data (from specific people) could be found.

In the worst cases (and with the right search query), even form fields could be accessed and changed due to a lack of password protection.

While not related to growing through organic search, I’m quick to point these out. It seemed obvious to me that much could be at stake here.

In at least several cases, this became an “all-hands-on-deck” problem in that I was asked to get this data out of search results faster than ASAP.

One CEO mentioned that his security consultants never mentioned this possibility. This was quickly found through a basic step that most SEOs would do in an audit.

To be fair, it nearly always requires an unusual search to find these sorts of pages.

Yet consider the odd searches that clients, maybe even your leadership team, would enter – not to mention rivals. (Never forget the enduring statistic that 15% of search queries on Google are unique!)

Even if not a legal issue, sensitive data in search results found by clients first could still harm your relationship.

Why is this data even on Google?

A single, inconspicuous link to a page from any resource accessed by search engines, anywhere on the web, is all it takes:

- Is the page listed in your XML sitemap, even if it’s not linked on your site?

- Could there have been a reference on your site in the past or something that goes unnoticed in JavaScript?

- More often than not, the client links to the page – but it’s only intended for specific people to see, like survey participants, not the general public.

Thankfully, awareness is more than half the battle here. Once you know the pages to be removed from search, you can quickly jumpstart the correction process, starting with Google.

How to quickly deindex content in Google

Find a pattern for URLs with sensitive data shown in Google search results

For example, it’s common to have a subdomain titled data.example.com that houses the web-based version of your SaaS product. You can use the site search operator to scan results pages.

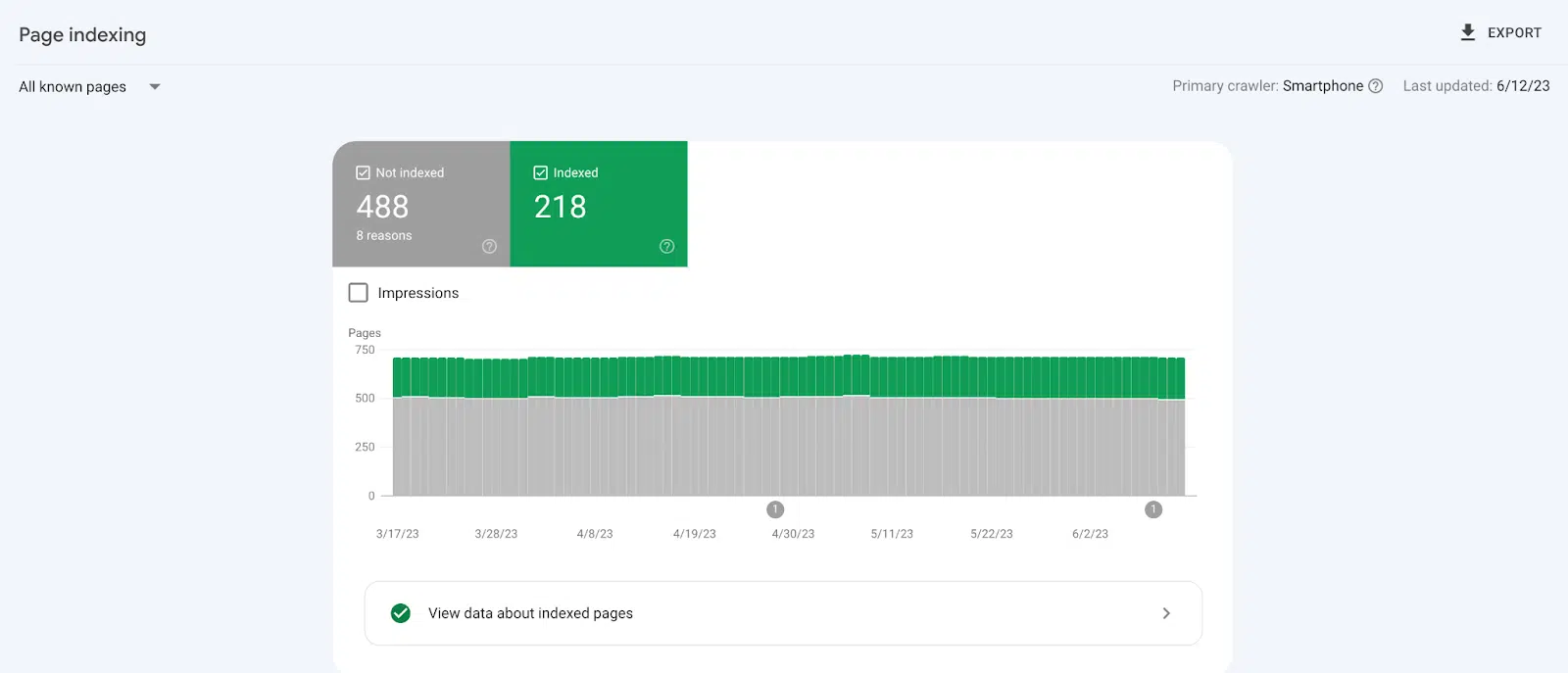

Use the Page Indexing report in Google Search Console (GSC) to view all indexed URLs

This may not show everything. Contacting your product team about this could help, as they may be able to provide everything you need more quickly and accurately.

Double-check your URLs

Confirm by using the URL Inspection tool for every URL, if possible or at least a sample, in GSC in case the links you found are no longer at those locations.

To find the offending pages, consider all URL versions that may canonicalize toward what you see in search results.

With the canonical URL removed, the alternate versions may get indexed.

Apply the pattern (the second radio button under New Request), likely a subdomain, or list every URL by making a new request in the GSC Removals tool.

For a limited set of pages, using the URL Inspection tool once this step is applied may speed up removal and can also confirm the latest status. This must be done one at a time. (Though not the giant that Google is, at least today, you should also do this in Microsoft Bing’s Block URL tool.)

By taking these steps, removal from Google’s index will only last six months.

It will not prevent the issue forever or from occurring on other search engines, so you’ll need to take a final step below.

How to remove content from Google permanently

Two methods can work here:

1. Use a noindex meta robots tag in the head section of those pages

You should have your web developers add this to the page template to replicate it across all pages.

- For PDFs, images and other non-HTML content, you can add an X-Robots-Tag HTTP header with a value of either noindex/none. This is also valid for regular HTML pages but not as fast to implement.

Note: Don’t use robots.txt disallow rules (exception for images), which only work if there’s no problem in the first place. A disallow blocks crawling but not indexing.

2. Gate the content

Password-protecting your webpages or files will ensure that only authorized users can access them. This is also another way to block your content from appearing on Google.

Preventing sensitive content from appearing in search results

After taking one of these steps, you can rest assured that pages with sensitive client data will be removed and not re-enter Google’s index, with pages removed within a day, in most cases.

In good faith, you should tell your clients exactly what happened. Just remember that nothing ever goes away completely on the web.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories