SEO shortcuts gone wrong: How one site tanked – and what you can learn

Scaling SEO with AI? Beware the risks. One site’s rapid rise led to a steep crash. Here’s what went wrong and how to avoid the same fate.

AI has made it easier than ever to scale SEO fast – but with it comes high risk.

What happens when the party is over and short-term wins become long-term losses?

Recently, I worked with a site that had expanded aggressively using AI-powered programmatic.

It worked until it didn’t, resulting in a steep decline in rankings, which I doubt they will recover.

It’s not worth the fallout when the party is over. And, eventually, it will be.

Here are the lessons learned along the way and ways to approach things differently.

When the party ends

The risks of AI-driven content strategies aren’t always immediately apparent. In this case, the signs were there long before rankings collapsed.

This client came to us after reading my last article on the impact of the August 2024 Google core update.

Their results were declining rapidly, and they wanted us to audit the site to shed some light on why this might be happening.

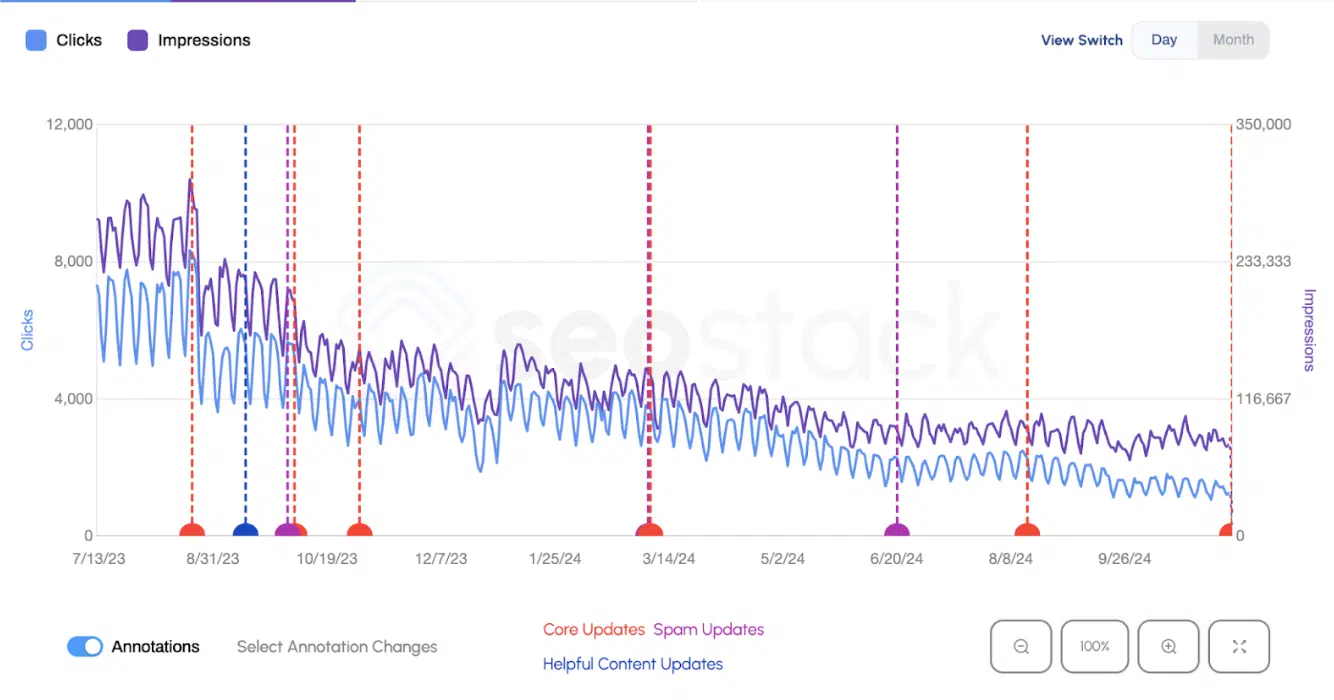

The August 2023 core update first impacted the site, followed by even deeper losses after the helpful content update.

These weren’t just temporary ranking fluctuations – they signaled a deeper problem.

Obviously, for a website whose primary channel was SEO, this had a detrimental effect on revenue.

The first step was to evaluate the impact we were seeing here.

- Is the site still ranking for relevant queries and simply dropping in positions?

- Or has it lost queries alongside clicks?

I’m a fan of Daniel Foley Carter’s framework for evaluating impact as slippage or devaluation, which is made easy by his SEO Stack tool.

As he puts it:

- “Query counting is useful to evaluate if a page is suffering from devaluation or slippage – which is ideal if your site gets spanked by HCU / E-E-A-T infused Core Updates.”

The two scenarios are quite different and have different implications:

- Slippage: The page maintains query counts, but clicks drop out.

- Devaluation: The page queries decrease simultaneously as queries drop to lower position groups.

Slippage means the content is still valuable, but Google has deprioritized it in favor of stronger competitors or intent shifts.

Devaluation is far more severe – Google now sees the content as low-quality or irrelevant.

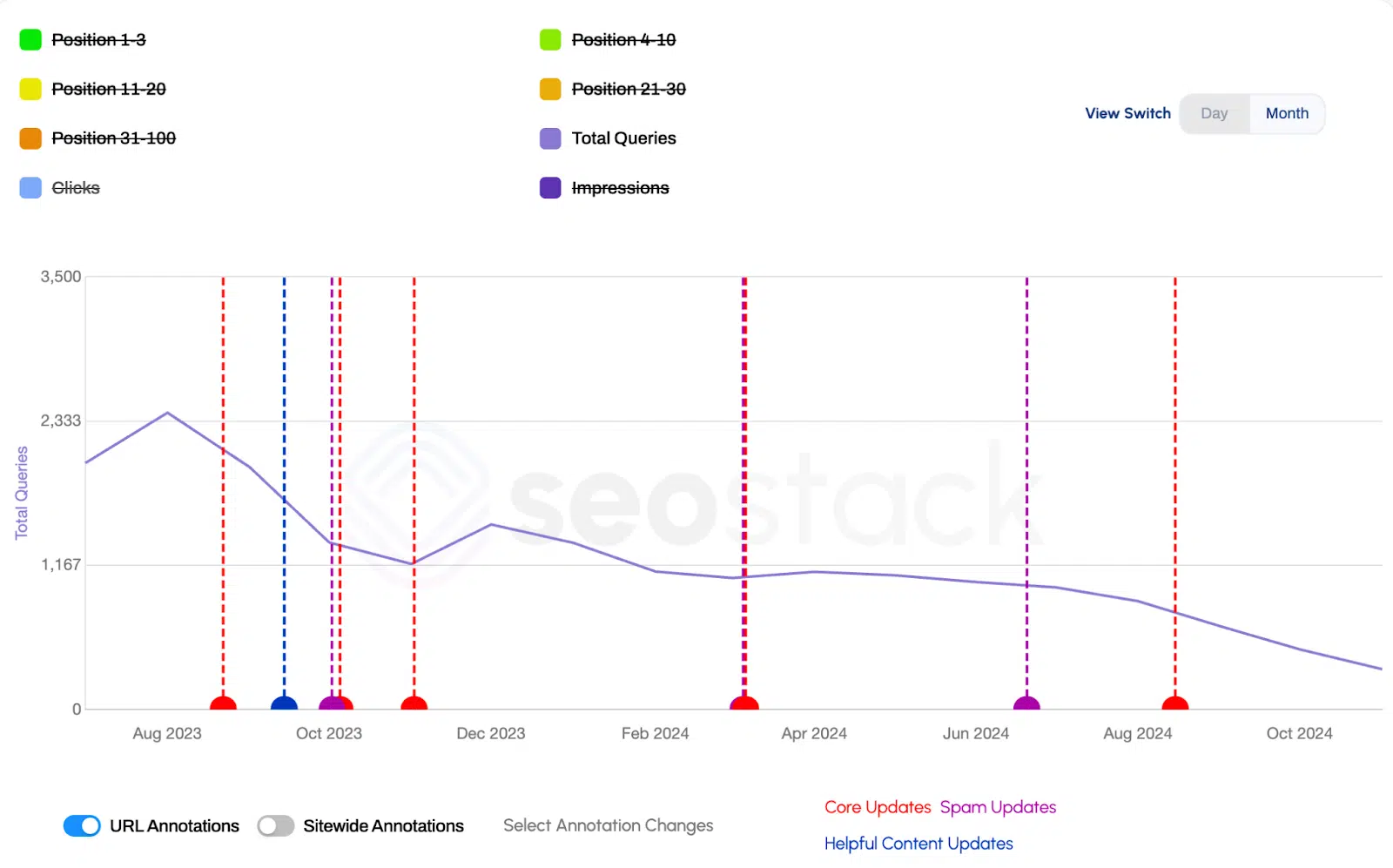

We analyzed a sample of the top revenue-driving pages to understand if there is a pattern.

Out of the 30 pages analyzed, more than 80% lost their total query counts and positions.

For example, the page below was the best performer in both traffic and revenue. The query drop was severe.

It is evident that most pages have been devalued, and the website as a whole was seen as being of low quality.

The cause behind the effect

The client had not received a manual penalty – so what triggered the decline?

A combination of low-quality signals and scaled content strategies made their site appear spammy in Google’s eyes.

Let’s dig into the biggest red flags we found:

- Templated content and duplication.

- Low site authority signals.

- Inflated user engagement.

- Misuse of Google’s Indexing API.

Templated content and duplication

The website in question had excessive templated content with significant duplication, making Google (and me) think the pages were produced using poor AI-enabled programmatic strategies.

One of the first things we checked was how much of the site’s content was duplicated.

Surprisingly, Screaming Frog didn’t flag this as a major problem, even at a 70% duplication. Siteliner is what gave us better results – over 90% duplication.

Considering what I saw in the manual check of several pages, this was no surprise. Those pages were super similar.

Each piece had a clear template they were following, and there was only minimal differentiation between the content.

When asked, the client denied using automation but said AI was used. However, they insisted their content underwent QA and editorial review.

Unfortunately, the data told a different story.

Site authority

From the Google API leak, the DOJ trial, and insights from a recent Google exploit, we know that Google uses some form of site authority signal.

According to the exploit, the quality score is based on:

- Brand visibility (e.g., branded searches).

- User interactions (e.g., clicks).

- Anchor text relevance around the web.

Relying on short-term strategies can tank your site’s reputation and score.

Brand signals

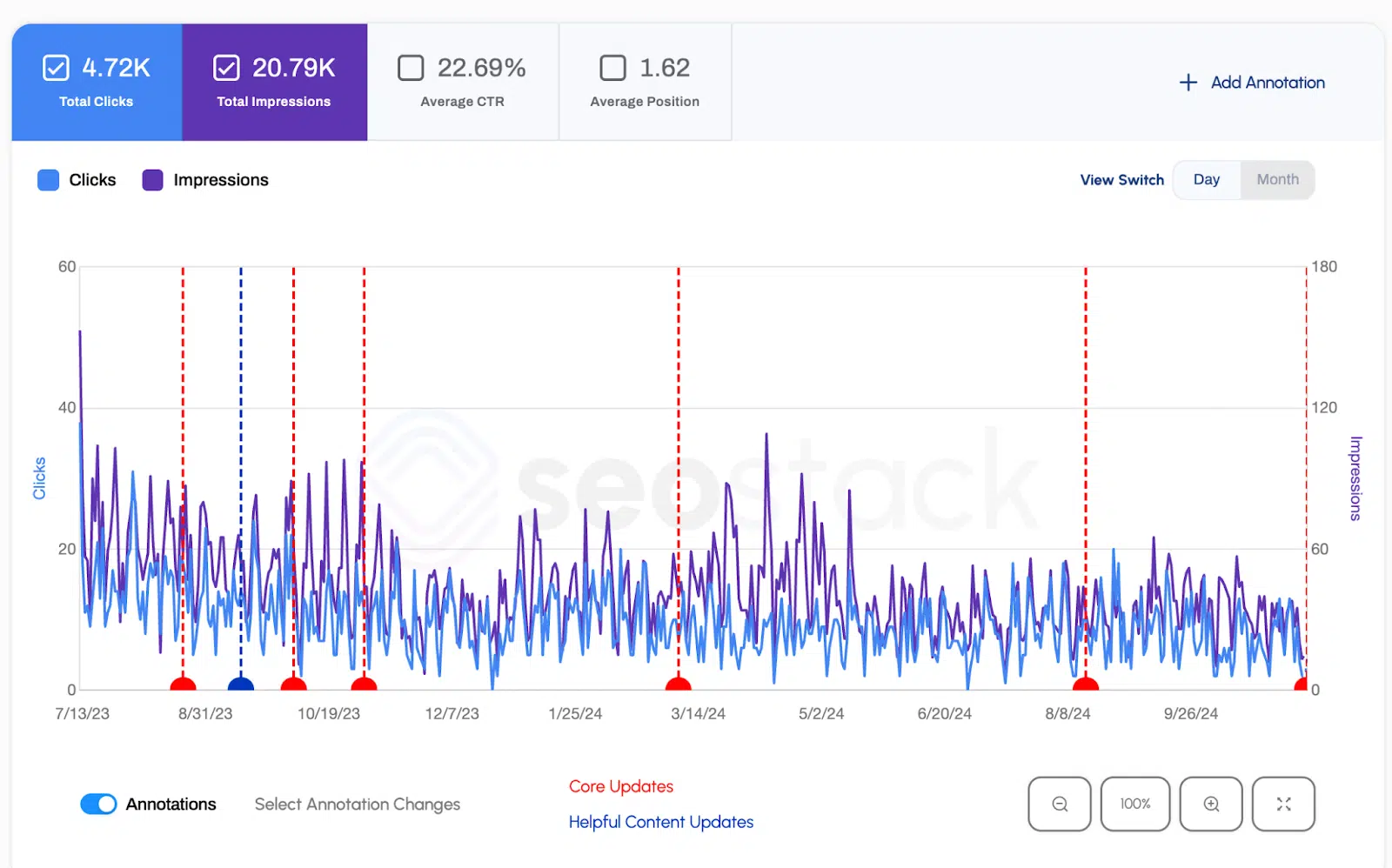

To understand the impact on our new client, we first looked at the impact on brand search vs. non-brand.

As suspected, non-brand keywords nose-dived.

Brand was less affected.

This is expected since branded keywords are generally considered more secure than non-brand ones.

After all, the intent there is strong. Those users search for your brand with a clear intention to engage.

It also often takes some time to see the impact of branded keywords.

Many users searching for a specific brand are repeat visitors, and their search behavior is less sensitive to short-term ranking fluctuations.

Finally, brand traffic is more influenced by broader marketing efforts – such as paid ads, email campaigns, or offline marketing.

But something else stood out – the brand’s visibility didn’t match its historical traffic trends.

We analyzed their branded search trajectory vs. overall traffic, and the findings were telling:

- Their brand recognition was remarkably low relative to traffic volume, even when considering that GSC samples data on a filtered report.

- Despite their improved visibility and active social presence, branded searches showed no meaningful growth curve.

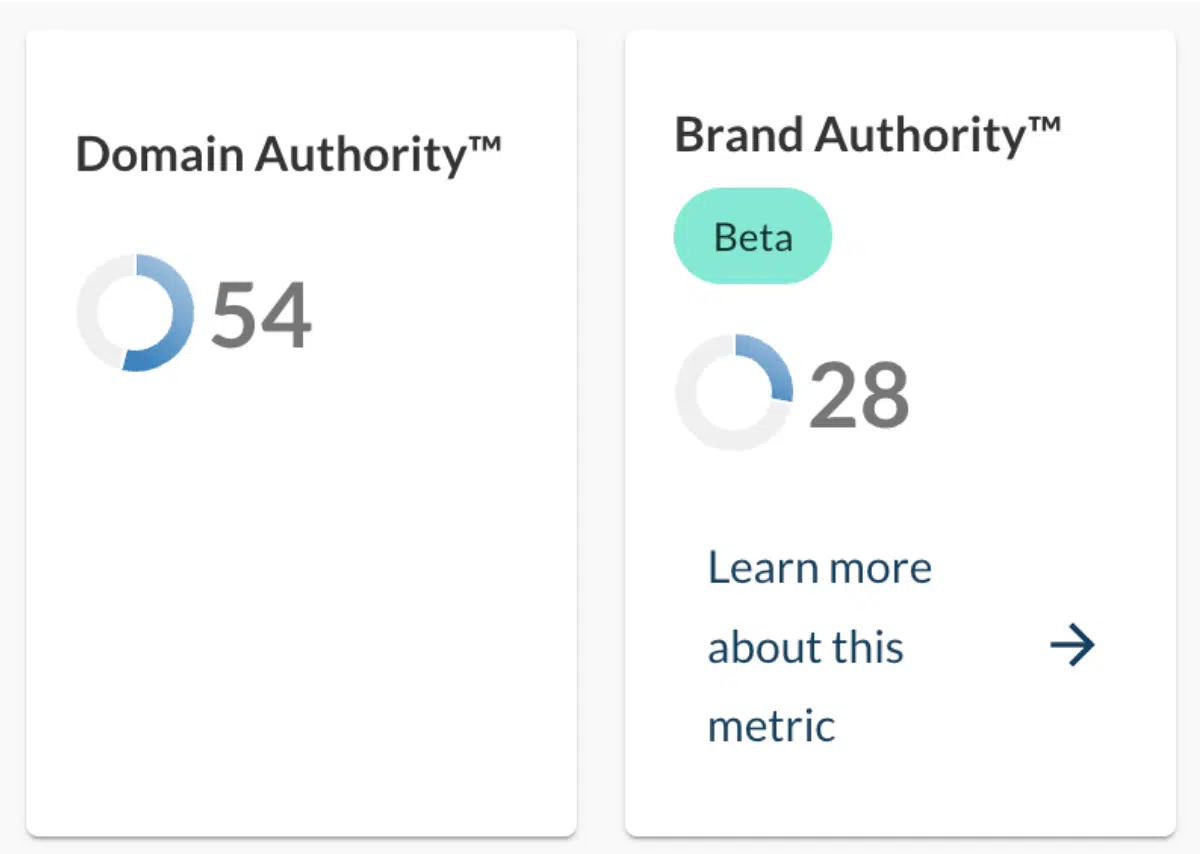

We know that Google uses some type of authority metrics for websites.

Many SEO tools have their own equivalents that try to emulate how this is tracked. One example is Moz, with its Domain Authority (DA) and, recently, Brand Authority (BA) metrics.

The DA score has been around for ages. While Moz says it is a combination of various factors, in my experience, the main driver is the website’s link profile.

BA, on the other hand, is a metric launched in 2023. It relates to a brand’s broader online influence and focuses on the strength of a website’s branded search terms.

Let’s be clear: I’m not convinced that DA or BA are anywhere close to how Google ranks websites. They are third-party metrics, not confirmed ranking signals. And they should never be used as KPIs!

However, the pattern seen for this client when looking at the brand data in GSC reminded me of the two metrics, particularly the recent Moz study on the impact of HCU (and the subsequent core website updates).

The study suggests that HCU might focus more on balancing brand authority with domain authority rather than solely assessing the subjective helpfulness of content.

Websites with high DA but low BA (relative to their DA) tend to experience demotions.

While the metrics are not to be taken as ranking signals, I can see the logic here.

If your website has a ton of links, but no one is searching for your brand, it will look fishy.

This aligns with Google’s longstanding goal to prevent “over-optimized” or “over-SEOed” sites from ranking well if they lack genuine user interest or navigational demand.

Google Search Console already pointed toward an issue with brand perception.

I wanted to see if the DA/BA analysis pointed the same way.

The DA for this client’s website was high, while the BA was remarkably low. This made the website’s BA/DA ratio very high, approximately 1.93.

The site had a high DA due to SEO efforts but a low BA (indicating limited genuine brand interest or demand).

This imbalance made the site appear “over-optimized” to Google with little brand demand or user loyalty.

Dig deeper: 13 questions to diagnose and resolve declining organic traffic

User engagement

The next step was to dig into user engagement.

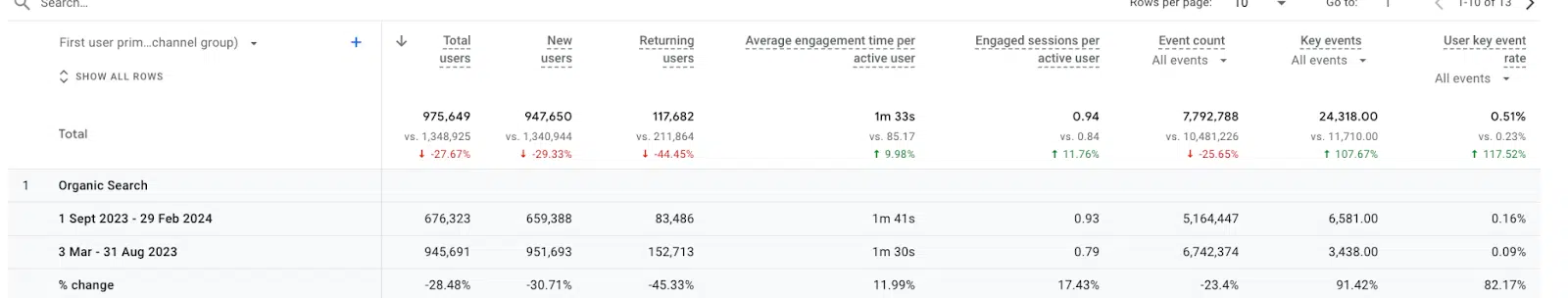

We first compared organic users in GA4 – six months before and after the August 2023 update.

Traffic was down for both new and returning users, but many of the engagement indicators were up, except for event counts.

Was it simply that fewer visitors were coming to the site, but those who reached it found the content more valuable than before?

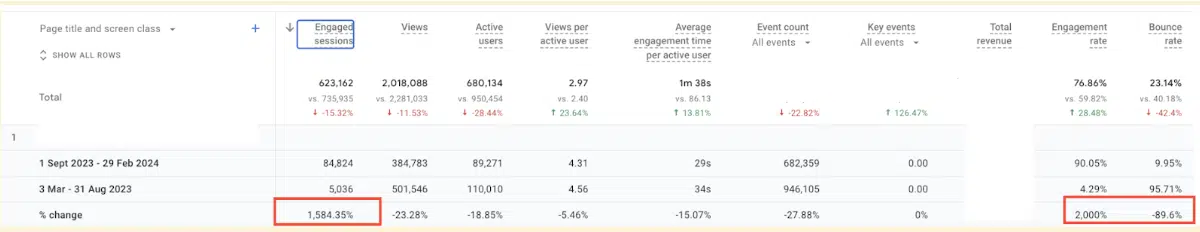

To dig deeper, we looked at an individual page that lost rankings.

Their strongest performing page pre-update revealed:

- Engaged sessions were up 1,000%.

- The engagement rate increased 2,000%.

- Bounce rate dropped by 89%.

(Note: While these metrics can be useful in cases like this, they shouldn’t be fully trusted. Bounce rate, in particular, is tricky. A high bounce rate doesn’t necessarily mean a page isn’t useful, and it can be easily manipulated. Tracking meaningful engagement through custom events and audience data is a better approach.)

At first glance, these might seem like positive signs – but in reality, these numbers were likely artificially inflated.

Strategies such as click manipulation are not new. However, with the findings from Google’s API leak and exploit data, I fear they can become more tempting.

Both the leak and the API have caused a misunderstanding of what engagement signals mean as part of how Google ranks websites.

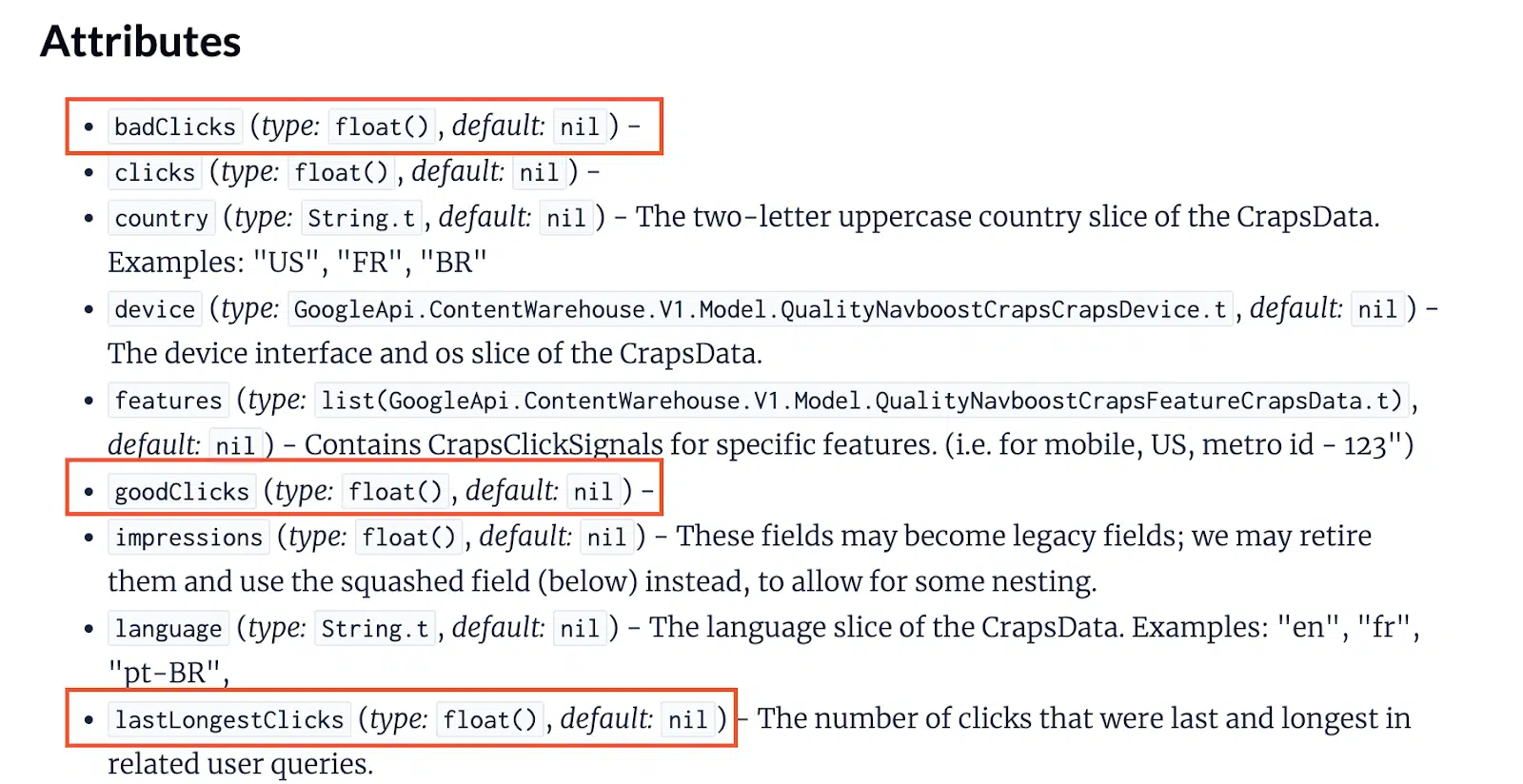

From my understanding, how NavBoost tracks clicks is much more complex. It’s not just about the number of clicks it’s about the quality.

This is why attributes from the leak include elements such as badClicks, goodClicks, and lastLongestClicks.

And, as Candour’s Mark Williams-Cook said at the recent Search Norwich:

- “If your site has a meteoric rise, but no one has heard of you – it looks fishy.”

Inflating engagement signals rather than focusing on real engagement will not yield long-term results.

Using this strategy after the drop just confirms why the rankings should have dropped in the first place.

Unsurprisingly, the rankings kept declining for this client despite the short-term spikes in engagement.

(Note: We could have also looked at other factors, such as spikes in user locations and IP analysis. However, the goal wasn’t to catch the client using risky strategies or place blame – it was to help them avoid those mistakes in the future.)

Misusing the Google Indexing API

Even before starting this project, I spotted some red flags.

One major issue was the misuse of the Google Indexing API in Search Console to speed up indexing.

This is only intended for job posting sites and websites hosting live-streamed events.

We also know that the submissions undergo rigorous spam detection.

While Google has said misuse won’t directly hurt rankings, it was another signal that the site was engaging in high-risk tactics.

The problem with scaling fast using high-risk strategies

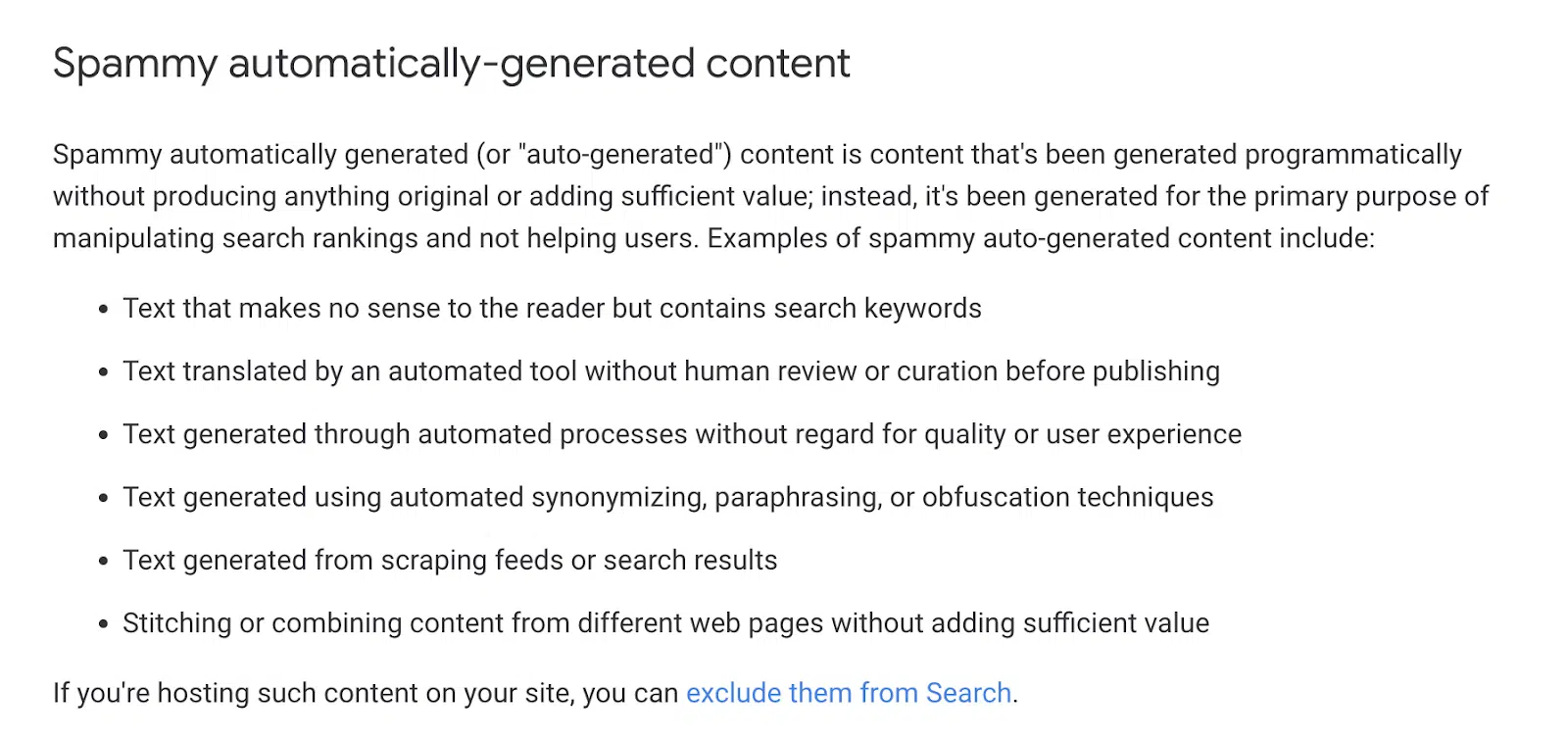

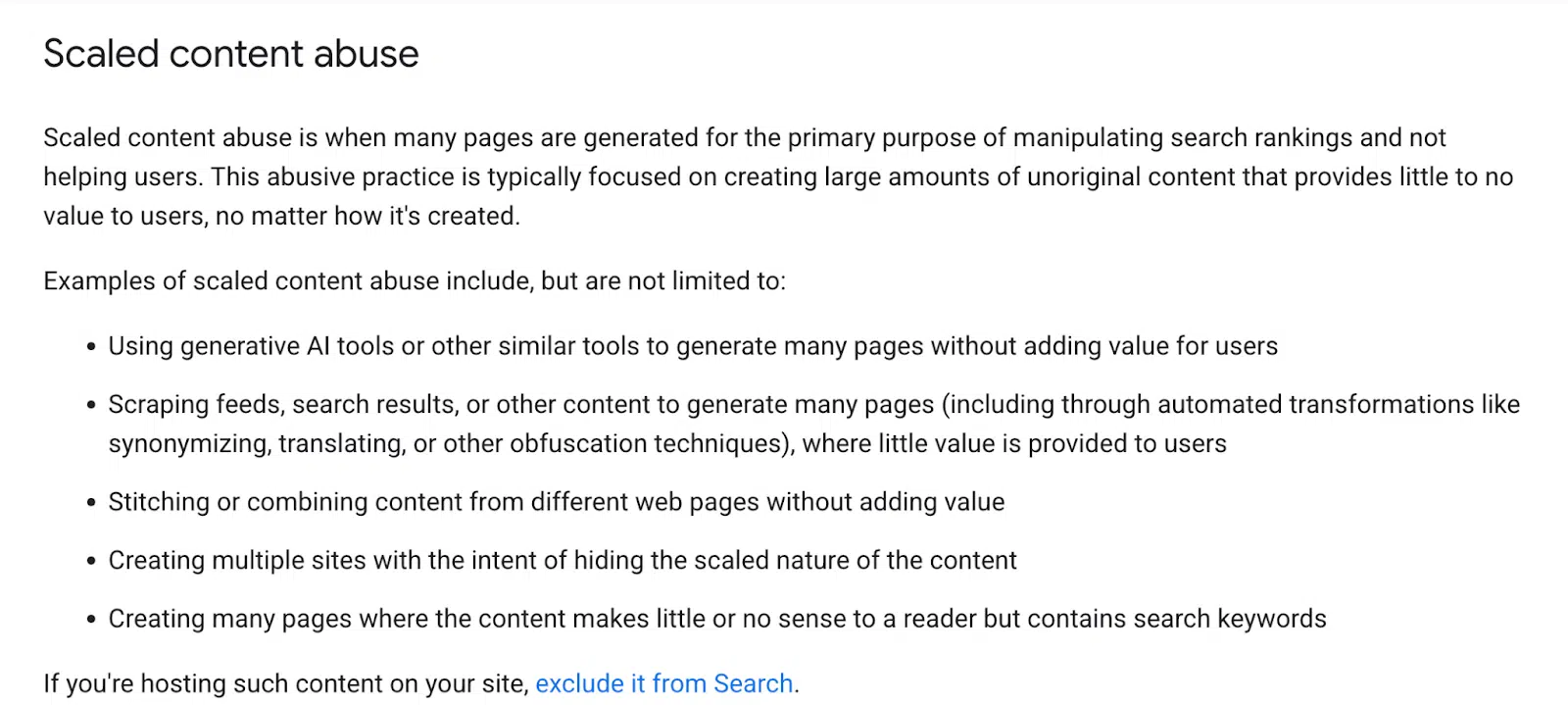

In 2024, Google updated its Spam policy to reflect a broader understanding of content spam.

What used to be a section on “spammy automatically generated content” is now bundled into the section on “scaled content abuse.”

Google has shifted its focus from how content is generated to why and for whom it is created.

The scale of production and the intent behind it now play a key role in determining spam.

Mass-producing low-value content – whether AI-generated, automated, or manually created – is, and should be, considered spam.

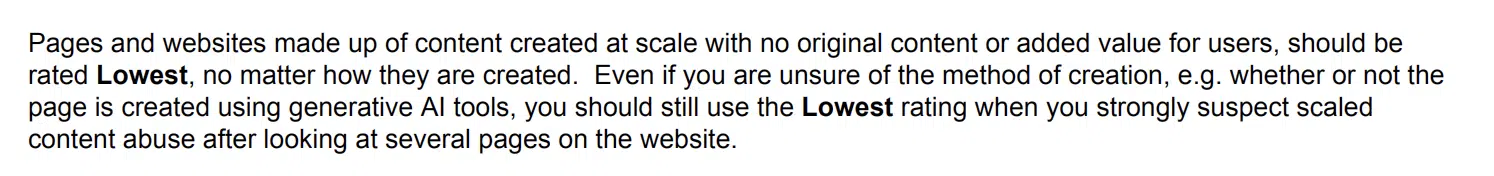

Unsurprisingly, in January 2025, Google updated the Search Quality Rater Guidelines to align with this approach.

Among other changes, the Page Quality Lowest and Low sections were revised.

Notably, a new section on Scaled Content Abuse was introduced, reflecting elements of the Spam Policy and reinforcing Google’s stance on mass-produced, low-value content.

This client didn’t receive a manual action, but it was clear that Google had flagged the site as Lower quality.

Scaling fast in this way is unsustainable, and it also opens up wider challenges and responsibilities for us as web users.

I recently had someone in the industry share a brilliant metaphor for the danger of the proliferation of AI-generated content:

For the tons of people pumping out AI content with minimal oversight – they’re just peeing in the community pool.

Ingesting vast amounts of text only to regurgitate similar material as a modern equivalent of old-school article-spinning (a popular spam tactic of the early 2000s).

It clutters the digital space with low-value content and dilutes trust in online information.

Personally, that’s not where I’d want the web to go.

How to do it differently

The rise of AI has accelerated programmatic SEO and the push to scale quickly, but just because it’s easy doesn’t mean it’s the right approach.

If I had been involved from the beginning, I know I would have taken a different approach. But would that mean the strategy no longer qualifies as programmatic? Likely.

Can AI-powered programmatic SEO truly scale effectively? Honestly, I’m not sure.

What I do know is that there are valuable lessons to take away.

Here are a few insights from this project and the advice I would have given if this client had worked with us from the start.

Start with the goal in mind

We’ve all heard it before, yet some still miss the point: No matter how you scale, the goal should always be to serve users, not search engines.

SEO should be a byproduct of great content – not the goal.

In this case, the client’s objective was unclear, but the data suggested they were mass-producing pages just to rank.

That’s not a strategy I would have recommended.

Focus on creating helpful, unique content (even if templated)

The issue wasn’t the use of templates, but the lack of meaningful differentiation and information gain.

If you’re scaling through programmatic SEO, make sure those pages truly serve users. Here are a few ways to do that:

- Ensure each programmatic page offers a unique user benefit, such as expert commentary or real stories.

- Use dynamic content blocks instead of repeating templates.

- Incorporate data-driven insights and user-generated content (UGC).

Personally, I love using UGC as a way to scale quickly without sacrificing quality, triggering spam signals, or polluting the digital ecosystem.

Tory Gray shares some great examples of this approach in our SEOs Getting Coffee podcast.

Avoid over-reliance on AI

AI has incredible potential when used responsibly.

Over-reliance is a risk to sustainable business growth and impacts the broader web.

In this case, the client’s data strongly suggested their content was AI-generated and automatically created. It lacked depth and differentiation.

They should have blended AI with human expertise, incorporating real insights, case studies, and industry knowledge.

Prioritize brand

Invest in brand-building before and alongside SEO because authority matters.

Long-term success comes from building brand recognition, not just chasing rankings.

This client’s brand had low recognition, and its branded search traffic showed no natural growth.

It was clear SEO had taken priority over brand development.

Strengthening brand authority also means diversifying acquisition channels and reinforcing user signals beyond Google rankings.

In a time when AI Overviews are absorbing traffic, this can be the difference between thriving and shutting down.

Avoid clear violations of Google’s guidelines

Some Google guidelines are open to interpretation, but many are not. Don’t push your luck.

Ignoring them won’t work and will only contribute to tanking your domain.

In this case, rankings dropped – likely for good – because the client’s practices conflicted with Google’s policies.

They misused the Google Indexing API, sending spam signals to Google. Their engagement metrics showed unnatural spikes.

And those were just a few red flags. The party was over, and all that remained was a burned domain.

Dig deeper: How to analyze and fix traffic drops: A 7-step framework

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories