Using word vectors and applying them in SEO

Contributor JR Oakes takes look at technology from the natural language processing and machine-learning community to see if it's useful for SEO.

Today, the SEO world is abuzz with the term “relevancy.” Google has gone well past keywords and their frequency to looking at the meaning imparted by the words and how they relate to the query at hand.

In fact, for years, the common term used for working with text and language had been natural language processing (NLP). The new focus, though, is natural language understanding (NLU). In the following paragraphs, we want to introduce you to a machine-learning product that has been very helpful in quantifying and enhancing relevancy of content.

Earlier this year, we started training models based on a code base called Char-rnn from Andrej Karpathy. The really interesting thing about this code base was that you could (after training) end up with a model that would generate content based on what it learned from the training documents. It wouldn’t just repeat the content, but it would generate new readable (although quite nonsensical) content.

It operates by using a neural network to learn which character to guess next. If you have the time, Karpathy’s write-up is a fascinating read that will help you understand a bit more about how this works.

In testing out various code bases, we came across one that, instead of predicting characters, attempted to predict which words would come next. The most interesting part of this was that it used something called GloVe embeddings that were basically words turned into numbers in such a way that the plot of the number coordinates imparted semantic relationships between the words. I know, that was a mouthful.

What is GloVe?

GloVe stands for “global vectors for word representation.” They are built from very large content corpuses and look at co-occurrence statistics of words to define relationships between those words. From their site:

[blockquote] GloVe is an unsupervised learning algorithm for obtaining vector representations for words. Training is performed on aggregated global word-word co-occurrence statistics from a corpus, and the resulting representations showcase interesting linear substructures of the word vector space.[/blockquote]

Here is an example of the term “SEO” converted into a word vector:

To work with GloVe embeddings, you need familiarity with Python and Word2Vec, as well as a server of sufficient size to handle in-memory storage of 6+ billion words. You have been warned.

Why are GloVe vectors important?

GloVe vectors are important because they can help us understand and measure relevancy. Using Word2Vec, you can do things like measure the similarity between words or documents, find most similar words to a word or phrase, add and subtract words from each other to find interesting results, and also visualize the relationship between words in a document.

Similarity

If you have an understanding of Python, Gensim is an excellent tool for running similarity analysis on words and documents. We updated a converter on Github to make it easier to convert GloVe vectors to a format that Gensim can use here.

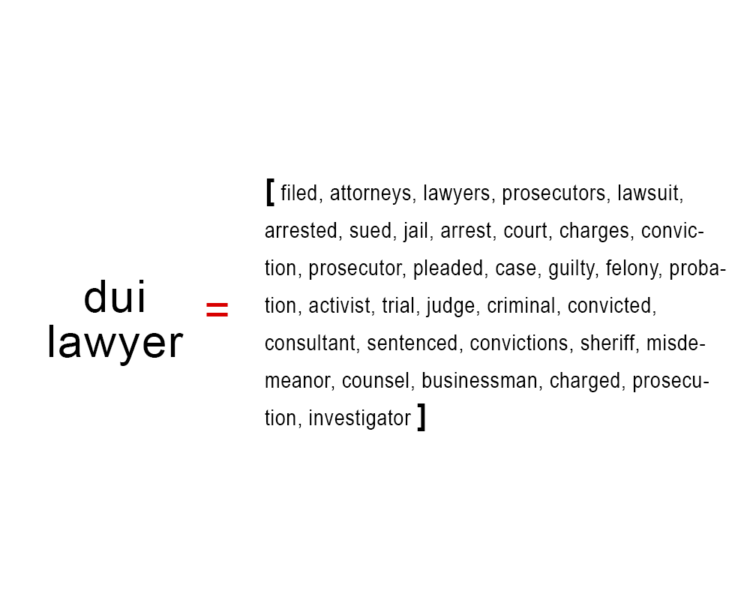

To show the power of GloVe vectors to produce semantically similar words to a seed word or phrase, take a look at the following image. This was the result of finding the most similar words to “dui lawyer” using the Gensim library and GloVe vectors (geographical terms were removed).

Note how these are not word variations or synonyms, but rather concepts that you would expect to encounter when dealing with an attorney in this practice area.

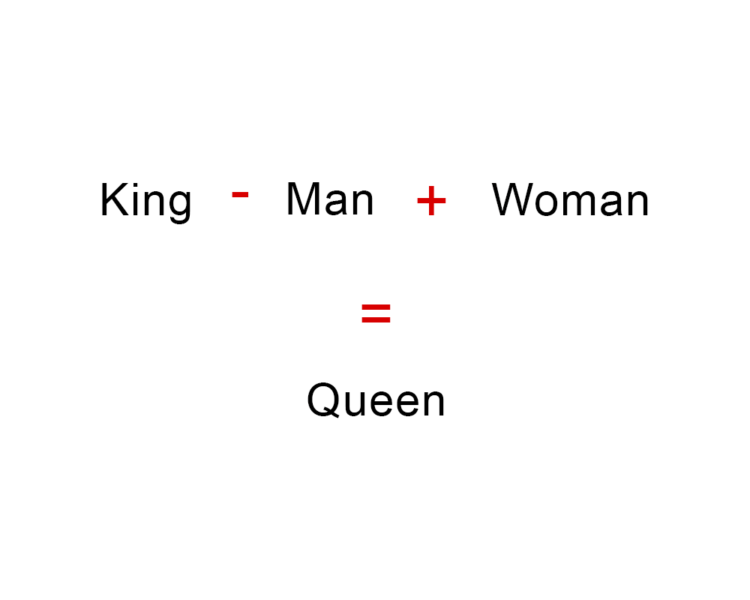

Adding and subtracting vectors

One of the most frequently used examples of the power of these vectors is shown below. Since the words are converted into numerical vectors, and there are semantic relationships in the position of the vectors, this means that you can use simple arithmetic on the vectors to find additional meaning. In this example, the words “King,” “Man” and “Woman” are turned into GloVe vectors prior to addition and subtraction, and “Queen” is very close to the resulting vector.

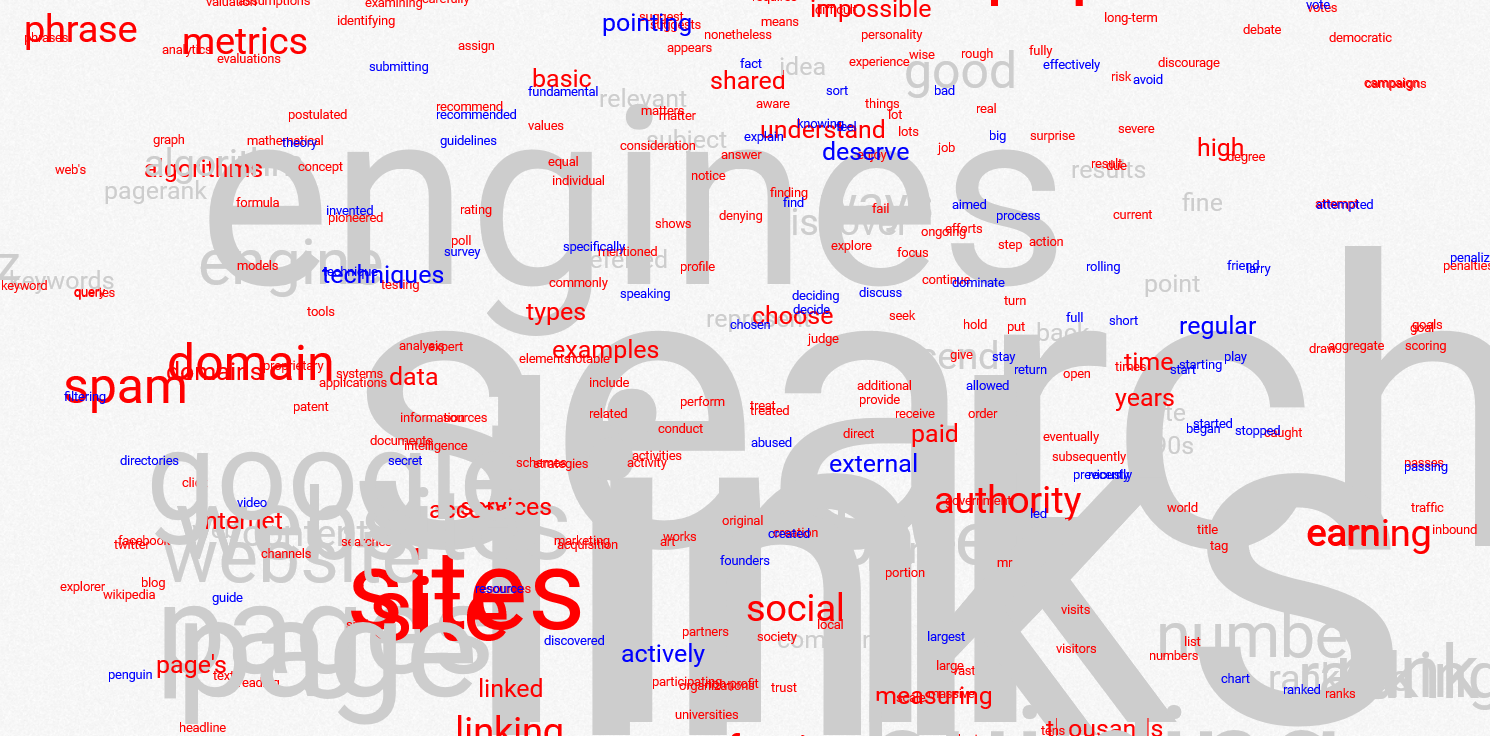

Visualization

Once we are able to turn a document of text into its resulting vectors, we are able to plot those words using a very cool library called t-SNE along with d3.js. We have put together a simple demo that will allow you to enter a keyword phrase and two ranking URLs to view the difference in vector space using GloVe vectors.

It’s important to point out a few things to look for when using the demo.

Look at the relationships between close words

Notice how groupings of words are not simply close variations or synonyms, but rather unique words that just belong together.

Use pages with a good amount of content

The tool works by extracting the content on the page, so if there is not much to work with, the result will not be great. Be careful using home pages, pages that are listings of excerpts or mostly image-based content.

Small words don’t mean small value

The size of the resulting words is based on the frequency with which the word was encountered, not the importance of the word. If you enter a comparison URL that is ranking higher than you for the same term, take note of the color differences to see topics or topic areas that you may be missing on your page.

Wrapping it up

Obviously, from an SEO perspective, it is beneficial to create content that covers a topic as thoroughly as possible and that ensures a good experience for your visitor. While we don’t expect all SEOs to run out and learn Python, we think knowing that there is amazing power to be leveraged to that end is an important point to relay. GloVe vectors are one of the many tools that can be leveraged to give you an edge on the competition.

Finally, for those who are fans of latent dirichlet allocation (LDA), Chris Moody released a project this year called LDA2Vec that uses LDA’s topic modeling, along with word vectors, to create an interesting way to assign and understand the various topics within a corpus of text.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land