Google Search Quality Raters Instructions Gain New “Page Quality” Guidelines

In the wake of Google’s Panda algorithm update, its cadre of human search quality raters has a new task: giving Google specific quality ratings for individual landing pages. The new “Page Quality Rating Guidelines” section adds a whopping 32 pages to the handbook that Google provides to those human raters (via contractors like Lionbridge and […]

In the wake of Google’s Panda algorithm update, its cadre of human search quality raters has a new task: giving Google specific quality ratings for individual landing pages.

In the wake of Google’s Panda algorithm update, its cadre of human search quality raters has a new task: giving Google specific quality ratings for individual landing pages.

The new “Page Quality Rating Guidelines” section adds a whopping 32 pages to the handbook that Google provides to those human raters (via contractors like Lionbridge and Leapforce). An existing section on “URL Rating Tasks with User Locations” has been expanded from 12 to 16 pages, so that what was a 125-page document in early 2011 is now 161 pages long.

Version 3.27 of Google’s guidelines for search quality raters — dated June 22, 2012 — was recently leaked online. It’s actually one part of a larger leak that seems to have happened via private forums, and includes not only the rater guidelines, but also additional information and screenshots from some of the ratings tasks that the group performs. More on that later. Below, a look at the new section of the rater’s handbook, which was written about yesterday by Razvan Gavrilas on CognitiveSEO.com.

Google’s Page Quality Rating Guidelines

Here’s how Google explains the concept of Page Quality to its raters:

You have probably noticed that webpages vary in quality. There are high quality pages: pages that are well written, trustworthy, organized, entertaining, enjoyable, beautiful, compelling, etc. You have probably also found pages that seem poorly written, unreliable, poorly organized, unhelpful, shallow, or even deceptive or malicious. We would like to capture these observations in Page Quality rating.

Raters are asked to give each landing page (or web document like PDFs) an overall grade that might include Highest, High, Medium, Low or Lowest, but individual aspects of the page/document — like “main content” and “layout” are also graded, as shown on this screenshot from the guidelines:

Raters are told to ignore the location of the page when rating its quality and to avoid thinking about how helpful the page/document might be for a search query. “Page Quality rating is query-independent, meaning that the rating you assign does not depend on a query,” Google says. That’s because, the guidelines later say, queries help determine the overall utility of a page/document, but the purpose of a page helps determine its quality.

Google: Low or Lowest Quality Main Content (Pandas!)

There’s a humorous example in the new material that you have to think Google used purposely. In teaching how to identify “Low or Lowest Quality Main Content” on a page, one of Google’s bullet items is

Using a lot of words to communicate only basic ideas or facts (“Pandas eat bamboo. Pandas eat a lot of bamboo. It’s the best food for a Panda bear.”)

If that isn’t one of the hallmarks of a page that you’d find on a content farm, what is, right? Content farms were a primary target of the Panda algorithm change — an update that aimed to remove “thin” or low quality pages from Google’s index. Now, this new 32-page section of the Google search quality raters guidelines shows how Google grades page quality. It might be the closest thing available to an official, post-Panda quality content guide.

Google: High or Highest Quality Main Content

There are no panda (the animal, not the algo update) references in Google’s section describing “High or Highest Quality Main Content.” The four bullet items in this section offer examples related to medical content (“written by people or organizations with medical accreditation”), hobbies (“highest quality content is produced by those with a lot of knowledge and experience who then spend time and effort creating content to share with others who have similar interests”), videos and even social networking pages.

On the last point, Google says a social networking profile can be high/highest quality if it’s “frequently updated with lots of posts, social connections, comments by friends, links to cool stuff, etc.” That’s an important thing to consider in light of the mess earlier this over Google’s Search Plus Your World feature — specifically, the accusations that Google was favoring even unused Google+ profiles over active Facebook, Twitter and other social profiles in its search results. (Google stopped doing that to some degree when it put Knowledge Graph results where Google+ profiles used to appear.)

Also keep in mind that Google announced, in its March search quality update, that it was doing a better job of indexing social profile pages. Quality, as described in this raters’ document, would clearly play a role in that.

Website Reputation as a Page Quality Signal

In addition to the Panda reference above, there’s another well-known event that appears in the new section on page quality. On the screenshot above, there’s a question near the bottom where human raters are asked to indicate the reputation of the website that’s associated with the page/document that they’re reviewing.

Google goes into great detail to teach raters how to discover a website’s reputation, and one of the suggestions is to do searches like [homepage reviews] and [homepage.com complaints].

The example site that Google uses? DecorMyEyes.com.

That’s the site that made headlines in late 2010 when its owner, Vitaly Borker, bragged to the New York Times that he went out of his way to harass customers because he believed that their bad reviews posted online were improving his Google search results. (Borker was just sentenced yesterday to four years in prison and ordered to pay almost $100,000 in fines and restitution.)

What Else Has Leaked?

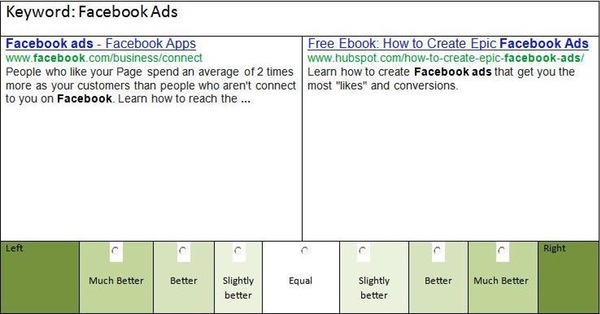

As I said above, the 161-page raters’ guidelines isn’t the only thing that has leaked recently. Additional documents and screenshots related to the human rater program reportedly began spreading last month via private forums. Gordon Campbell, a UK-based online marketer, recently contacted us with a link to his blog post showing screenshots of some of the side-by-side tasks that raters perform — in this case, a “basic” and an “advanced” side-by-side task. The basic task screenshot is first, followed by the advanced task.

Side-by-side tasks ask raters to compare either individual (basic) or groups (advanced) of search results based on a given query. They’re also used to compare Google’s current search results with “new” results that may be used after an algorithm change. I recently contacted the search quality rater that I interviewed earlier this year with a link to Gordon Campbell’s post and screenshots, and the rater confirmed that those are legitimate screenshots of a side-by-side task.

Depending on how widely the other material has leaked, we may be seeing additional blog posts around the web related to Google’s human search quality raters, the new guidelines document and the rating tasks they do.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land