Have Keywords Stopped Being A Proxy To The Customer?

It’s said that keywords are a proxy for a customer, right? So let’s talk about the customer. Schema this, canonical that, and black and white zoo animals… if you don’t have a search expert on payroll, you’re already behind the Joneses. I get emails like the one below all the time, this one being from […]

It’s said that keywords are a proxy for a customer, right? So let’s talk about the customer. Schema this, canonical that, and black and white zoo animals… if you don’t have a search expert on payroll, you’re already behind the Joneses.

I get emails like the one below all the time, this one being from the talented owner of Castleview 3D Architectural Renderings:

“I need your help. I realy don’t get the whole keywords/SEO/web marketing thing — but I know enough to know that I shouldn’t and don’t want to tackle it myself. I’m a small business (small = just me) and maybe I can’t afford you. But I’d like to find out. I get solicited by 2 or 3 SEO firms a week, but something about them and their approach really puts me off.”

Therein lies the problem. There’s an old joke that “99% of lawyers give the other 1% a bad name.” I think that’s just as true with SEOs. There’s 1% of SEOs out there that actually know what they are doing and do it well. Of those, about one third are black hat and about two-thirds are white hat (that’s not scientific, just an educated guess).

Now consider the average starting fee for SEO services. According to SEOMoz’s 2011 survey, the average retainer fee for an SEO agency is $2500-$5000 a month.

So what is a small business like the one above to do? The popular answer would be to hire a single SEO – maybe one of the many consultants out there charging between $100-$150 per hour.

But for many small businesses, even that is too much. How do they compete with the huge companies who are able to spend 5K and up a month on services? And here’s the kicker…

Google Is Making It Worse

Google has implemented so many elements to their algorithm that rely on webmasters knowing detailed SEO (not just basics) that they are making it harder and harder for SMBs to compete.

Here is just a small list:

- Favoritism in logged-in listings given to sites that have Google+ profiles

- Pay to play Google Shopping

- Enhanced listings with schema.org tags

- Duplicate content reduction and consolidation with canonical tags

- Authorship tagging

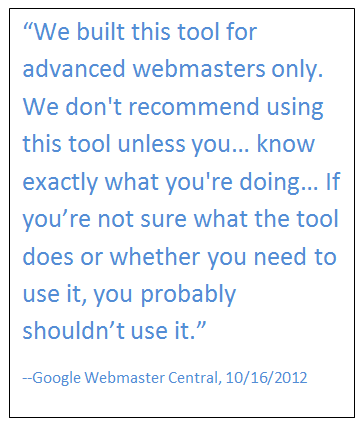

- Link disavow tool (which even Google says not to use unless you know advanced SEO)

- Data sharing and control with Google Webmaster Tools*

*No algorithmic impact, but the ability to influence things like sitelinks that the majority of SMBs don’t even know exists.

When & Where Does It Stop?

I’ve asked at plenty of conferences if this is the new normal. Are the days of being able to just put up a website with great content and getting it ranked over?

The answer I always hear is that Google just needs help from us while it catches up to the technology available. I say that’s a cop-out. I don’t see Google doing anything to catch up to the technology. I see them continuing to target people who don’t know any better – SMBs who have unwittingly hired one of those “2 or 3 SEO firms that solicit [them] each week.”

Sure, Panda and Penguin are targeting spam, and that’s good for all of us “white hats,” but in most cases, the people who fall victim to that spam are the SMBs. Generally, the big brands have plenty of layers between the SEO and the decision maker to rely on plausible deniability. I’d like to see Google focus more on improving their ability to understand all types of content rather than continuing on this witch hunt.

And in the meantime? The number-one result in my area for “architectural rendering company” is a site with keyword stuffed text in their description tag and at the bottom of their home page. And Bing isn’t much better.

The #1 result there is for a different firm (located in Toronto, and I’m in the US), with keyword stuffed description tag and spammy anchor text links at the bottom of the home page. So clearly, the improvements to reduce spam aren’t working.

Side note: someone should tell the “SEOs” these sites hired that the description tag doesn’t impact ranking.

So my question to you, dear reader, is: have keywords stopped being a proxy to a customer?

In other words, if you create great content, can you get it ranked? Or will we allow authorship, canonicals, schema, and manipulation of keywords to become the new normal?

One more thought before you share your comments, and I hope you will. The following statement appears in Google’s current quality guidelines, and has been there since the first time they were published:

“Make pages [primarily] for users, not for search engines.” Primarily was added in June of 2008, and Matt Cutts (head of Google’s webspam team) had this to say about it: “the spirit of that guideline is that users should be the primary consideration. But it is fine to do some things that don’t affect users but do help search engines.”

So, it’s ok to do these many things to help search engines understand your pages. But where do we (as users) draw the line? At what point are we designing pages more for search engines than for users?

Please share your thoughts in the comments below.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land