UK Prime Minister Attacks Google, Bing & Yahoo On Child Porn, Demands Better Efforts By October

UK prime minister David Cameron unveiled a wide-range of efforts today to protect children against pornography on the Internet. A significant part of his speech attacked the search engines of Google, Bing and Yahoo for not doing enough and demanding changes by October. Below is Cameron’s speech, which I’ve annotated with observations on how difficult […]

UK prime minister David Cameron unveiled a wide-range of efforts today to protect children against pornography on the Internet. A significant part of his speech attacked the search engines of Google, Bing and Yahoo for not doing enough and demanding changes by October.

Below is Cameron’s speech, which I’ve annotated with observations on how difficult the things he demands might or might not be to implement.

Search Engine Already “Deter” But How Can’t Be Said

Cameron starts out somewhat softly, saying that the major search engines are already involved in a “major campaign” to “deter” people searching for child porn, but that he can’t give more details about what’s being done:

The internet service providers and the search engine companies have a vital role to play and we have already reached a number of important agreements with them.

A new UK-US taskforce is being formed to lead a global alliance with the big players in the industry to stamp out these vile images.

I have asked Joanna Shields, CEO of Tech City and our Business Ambassador for Digital Industries, who is here today, to head up engagement with industry for this task force and she will work with both the UK and US governments and law enforcement agencies to maximise our international efforts.

Here in Britain, Google, Microsoft and Yahoo are already actively engaged on a major campaign to deter people who are searching for child abuse images.

I cannot go into detail about this campaign, because that would undermine its effectiveness but I can tell you it is robust, it is hard-hitting, and it is a serious deterrent to people looking for these images.

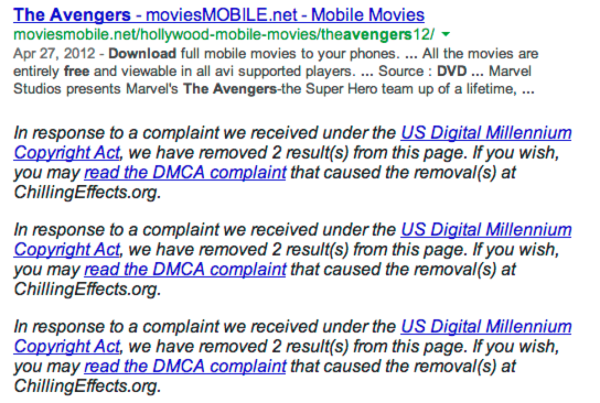

One immediate question is that Google has a long-standing program of telling people who search if material has been censored for any reason. For example, if there’s a removal for copyright reasons in the US, a notice appears to searchers, similar to how this one for an “avengers dvd download free” search shows:

Google has used a similar mechanism to flag if it has had to censor material about Nazi sites, as required under German law for Google Germany, or for when it was censoring results and when it did this, to meet Chinese demands.

Given this, either Cameron is unaware that Google itself is likely revealing when it removes material for certain searches in response to this campaign or Google has departed from its usual practices of trying to be transparent about censorship.

Search Engines Need To Proactively Block

Cameron goes on to chastise the search engines for only reacting when they are notified about child porn images. They’re not “doing enough to take responsibility” by actively spotting illegal images and removing those:

Where images are reported they are immediately added to a list and blocked by search engines and ISPs, so that people cannot access those sites.

These search engines also act to block illegal images and the URLs, or pathways that lead to these images from search results, once they have been alerted to their existence.

But here, to me, is the problem.

The job of actually identifying these images falls to a small body called the Internet Watch Foundation.

This is a world-leading organisation, but it relies almost entirely on members of the public reporting things they have seen online.

So the search engines themselves have a purely reactive position.

When they’re prompted to take something down, they act.

Otherwise, they don’t.

And if an illegal image hasn’t been reported – it can still be returned in searches.

In other words, the search engines are not doing enough to take responsibility.

Indeed in this specific area they are effectively denying responsibility. And this situation has continued because of a technical argument.

Now, the search engines do have various image filter options to try and prevent anyone (adult or child) from getting pornographic images in response to searches. With Bing SafeSearch, this is on by default and, to some degree, proactive:

Interestingly, Google SafeSearch used to be on by default for images. But checking today, that’s not so. Also gone are options to have filtering just for images versus Web search results. These seem part of the changes Google made last December.

So, Google’s not as proactive on the protection front as it had been, it might seem — except that it also made other changes last year to purposely keep porn content out of results unless people were going out of their way to seek it out, using explicit terms.

Regardless, such filters are easily overridden, which is why Cameron wants things stopped from getting into the search results at all.

Search Engines Should Be Smart Enough To Spot The Bad

This leads Cameron to the same type of argument that Hollywood has often fired at search engines in general and Google in particular. If they’re so smart, why can’t they just stop illegal stuff from getting listed at all?

Cameron wants the big brains at the search engines to understand if they have a child porn image and prevent it from making it into the search engines at all:

It goes that the search engines shouldn’t be involved in finding out where these images are that they are just the ‘pipe’ that delivers the images and that holding them responsible would be a bit like holding the Post Office responsible for sending on illegal objects in anonymous packages.

But that analogy isn’t quite right.

Because the search engine doesn’t just deliver the material that people see it helps to identify it.

Companies like Google make their living out of trawling and categorising content on the web so that in a few key-strokes you can find what you’re looking for out of unimaginable amounts of information.

Then they sell advertising space to companies, based on your search patterns.

So to return to that analogy, it would be like the Post Office helping someone to identify and order the illegal material in the first place – and then sending it onto them in which case they absolutely would be held responsible for their actions.

So quite simply: we need the search engines to step up to the plate on this.

The difficulty is that this approach will produce false positives. There will absolutely be images that are not child porn that will be blocked, because understanding what images really are is a touch search challenge. It’s even harder when you get into judgment calls of “art” versus “porn.”

Don’t get me wrong. I’m not trying to argue for anything that grants a loophole for actual child porn. I’m just saying that a politician thinking there’s some magic wand that can be waved is a politician doing what politicians do best, making grand statements that aren’t always easily backed up.

Very likely, what will happen is that in the UK, the search engines — if forced to comply — will shift to very protective filtering, where false positives will be tolerated and perhaps restored after an appeals process. On the upside, perhaps that’s the price the UK will be willing to pay for further protection against child porn online.

Search Engines Should Warn Users Even If They Search

Cameron goes on to suggest that search engines should warn people off searching for child porn. In other words, even though he also wants no child porn to exist in the search engines at all, he also wants anyone who even tries to find such material to be put-off:

We need a situation where you cannot have people searching for child abuse images and being aided in doing so where if people do try and search for these things, they are not only blocked, but there are clear and simple signs warning them that what they are trying to do is illegal and where there is much more accountability on the part of the search engines to actually help find these sites and block them.

On all these things, let me tell you what we’ve already done and what we are going to do.

What we have already done is insist that clear, simple warning pages are designed and placed wherever child abuse sites have been identified and taken down so that if someone arrives at one of these sites, they are clearly warned that the page contained illegal images.

These splash pages are up on the internet from today, and this is a vital step forward.

But we need to go further.

These warning pages should also tell those who’ve landed on it that they face consequences, such as losing their job, their family, even access to their children if they continue.

And vitally, they should direct them to the charity campaign ‘Stop It Now’, which can help them change their behaviour anonymously and in complete confidence.

Search Engines Should Have A “Black List” Of Terms

I suppose that’s a sort of double-protection system. But then again, as he goes on, it opens more complications. Cameron wants search engines to suggest alternative searches for those who they guess might be seeking child porn and preventing any results at all appearing for some searches entirely:

On people searching for these images there are some searches where people should be given clear routes out of that search to legitimate sites on the web.

So here’s an example.

If someone is typing in ‘child’ and ‘sex’ there should come up a list of options:

‘Do you mean child sex education?’

‘Do you mean child gender?’

What should not be returned is a list of pathways into illegal images which have yet to be identified by CEOP or reported to the IWF.

Then there are some searches which are so abhorrent and where there can be no doubt whatsoever about the sick and malevolent intent of the searcher that there should be no search results returned at all.

Put simply – there needs to be a list of terms – a black list – which offer up no direct search returns.

At this point, it’s probably useful to explain that those seriously seeking child porn don’t, as Cameron suggests, type in “child sex.” I know this, because about 10 years ago, I talked with his aforementioned Internet Watch Foundation, when I lived in the UK, about some of the challenges it faces in combating child porn.

One of the chief issues, I learned, is that there’s an entire language of synonyms that may be used to reference certain things, so that those seeking illegal child porn aren’t using the type of words that might get what they want filtered or themselves caught.

So, making Cameron’s “black list” might not be as easy as he assumes. It potentially means, if language changes, that the list might grow to impact some searches that are not abhorrent at all, just common words that might get co-opted to get around the black list.

“Child Sex” On Google & Bing

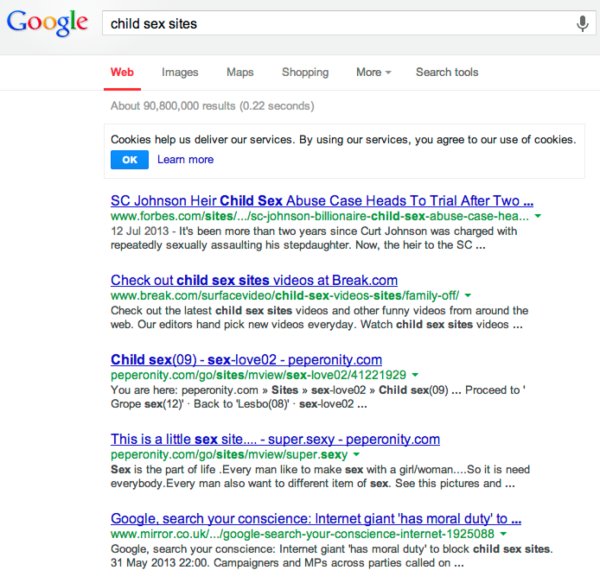

I think it’s also useful to see what Google and Bing are suggesting now, using his own example:

That’s from Google UK, for a search on “child sex.” The results themselves — with no SafeSearch-enabled — are very clean. But at the bottom, note the related searches, where there’s a “child sex sites” link. Select that, and you get this:

Here, you can see examples of where I suspect many might agree with Cameron that Google could do more. Results 2, 3, and 4 all purport to offer child porn. Wow.

As it turns out, none of these actually seem to have child porn. They seem instead fodder sites promising that in hopes of a click from the search engines, similar to how many sites purporting to offer illegal movies or TV shows don’t actually deliver those.

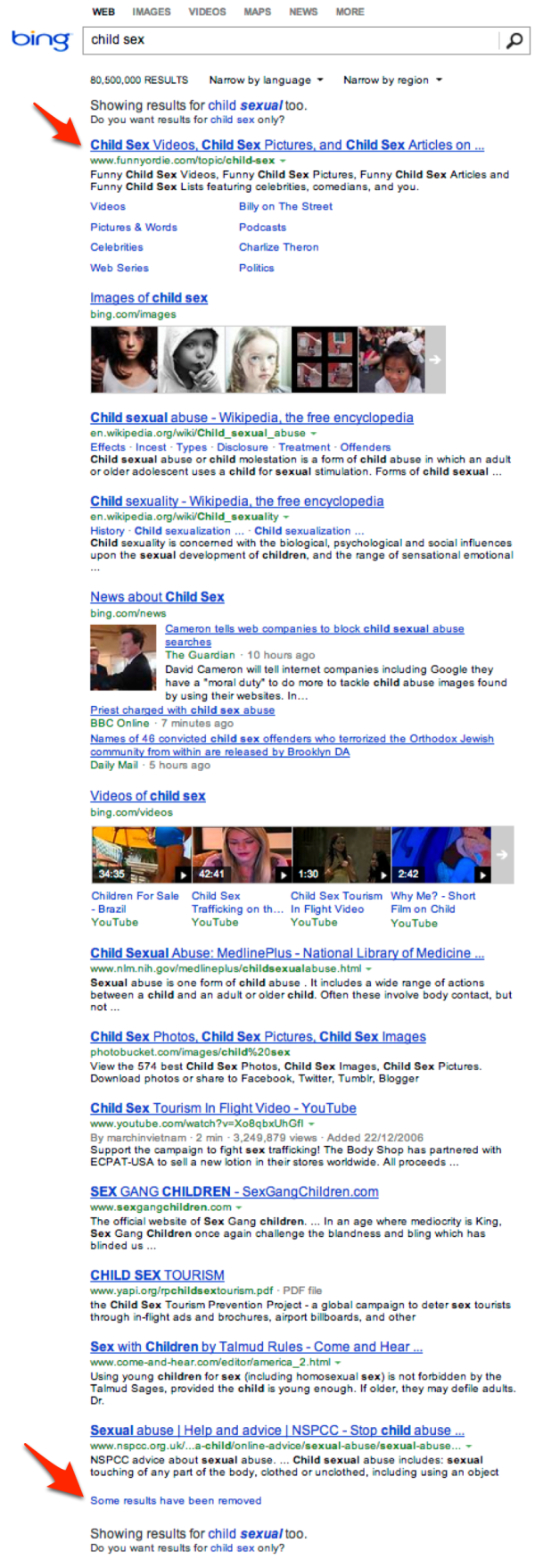

Still, it’s pause for thought on how these sites are ranking so well. Meanwhile, over at Bing:

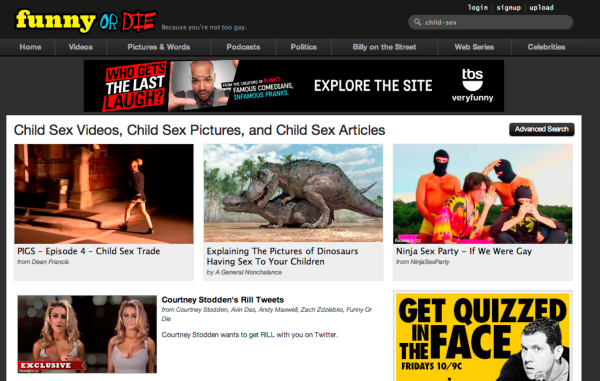

The first thing that stuck me was that “Funny Or Die” has a page about child sex videos? Really? Yep:

Maybe that’s not so funny and should die. Of course, the page itself doesn’t appear to deliver any of that actual content, so I guess Funny Or Die founders Will Ferrell, Adam McKay and Chris Henchy can relax a bit. It might even be some automated process that created this page, in response to searches. But it’s not classy. And it’s likely to be on Cameron’s hit list.

Further down, note the “Some results have been removed” message. This is a disclosure similar to what I discussed Google doing, where Bing is explaining that it has removed some content for child abuse reasons and explains the process.

An October Deadline & Be Responsible

Cameron concludes with his wish that the search engines give him an update on how they can do more, by October, and a last chiding that they are “not separate” from society and so must play a “responsible role” in proactively ridding the Internet of child porn:

So I have a very clear message for Google, Bing, Yahoo and the rest.

You have a duty to act on this – and it is a moral duty.

I simply don’t accept the argument that some of these companies have used to say that these searches should be allowed because of freedom of speech.

On Friday I sat with the parents of Tia Sharp and April Jones.

They want to feel that everyone involved is doing everything they can to play their full part in helping to rid the internet of child abuse images.

So I have called for a progress report in Downing Street in October, with the search engines coming in to update me.

The question we have asked is clear:

If CEOP give you a black-list of internet search terms, will you commit to stop offering up any returns to these searches?

If in October we don’t like the answer we’re given to this question if the progress is slow or non-existent then I can tell you we are already looking at the legislative options we have to force action.

And there’s a further message I have for the search engines.

If there are technical obstacles to acting on this, don’t just stand by and say nothing can be done; use your great brains to help overcome them.

You’re the people who have worked out how to map almost every inch of the earth from space who have developed algorithms that make sense of vast quantities of information.

You’re the people who take pride in doing what they say can’t be done.

You hold hackathons for people to solve impossible internet conundrums.

Well – hold a hackathon for child safety.

Set your greatest brains to work on this.

You are not separate from our society, you are part of our society, and you must play a responsible role in it.

This is quite simply about obliterating this disgusting material from the net – and we will do whatever it takes.

Google: We Have Zero Tolerance & Committed To Dialog

Reaction from the search engines? I’m still waiting to hear back from Bing. As for Google, it gave me this statement:

We have a zero tolerance attitude to child sexual abuse imagery. We use our own systems and work with child safety experts to find it, remove and report it. We recently donated 5 million dollars to groups working to combat this problem and are committed to continuing the dialogue with the Government on these issues.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land