5 Ways To Crawl A Staging Server Before Important Site Changes Go Live (To Save SEO)

You can uncover many potential SEO problems with a thorough crawl of the staging environment, but what if it's not readily accessible? Columnist Glenn Gabe shares some tips.

There you are. A big redesign or CMS migration is looming, and you’re ready to unleash a crawl on the new site in a test environment. You fire up your favorite crawling tool and trigger the crawl… and it lasts all of three seconds.

Yes, there’s an obvious problem. The staging server is protected and behind some type of security measure that inhibits you from freely crawling it. Sweat begins to form on your brow as you wonder how you will get the crawl completed.

At this point, you could choose to manually check all the pages, but you might end up in a padded room whispering something about header response codes taking over the world.

Or you could continue to click “crawl” and repeatedly crawl a login page, but that won’t help either. OR you could snap out of it and figure out a way to crawl the site in staging, which would enable you to analyze the crawl data and save SEO. Yes, that’s the ticket.

Some of you might be saying, “Hey, this is easy to get around!” Well, it’s important to understand that it’s not so easy sometimes. In my experience, I’ve helped a number of clients that used a staging setup that was just not easy to access and crawl. And for those situations, you might need to use alternative methods.

How To Crawl A Staging Server

Below, I’ll cover five methods for crawling a staging server ranging from using basic authentication to VPN access to creating custom user agents. I’ll end with some key takeaways and tips. Let’s begin!

1. Basic Authentication

If the staging server is using basic authentication, then you’ll be happy to know that the top crawling tools support this method when setting up a crawl.

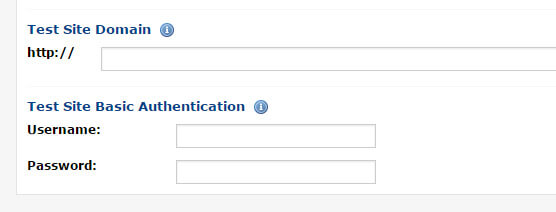

For example, my favorite crawling tools are DeepCrawl (where I’m on the customer advisory board) and Screaming Frog. Both tools provide the option to provide login details so you can crawl away.

Handling Basic Authentication in DeepCrawl:

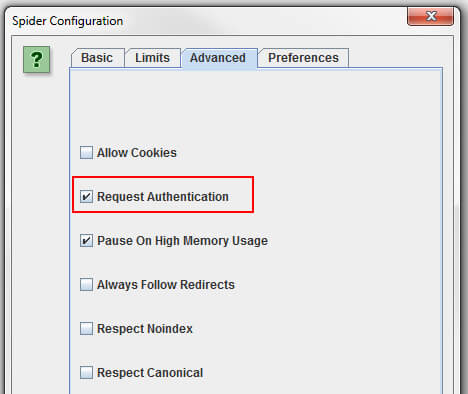

Selecting the “Request Authentication” setting in Screaming Frog:

2. VPN Access

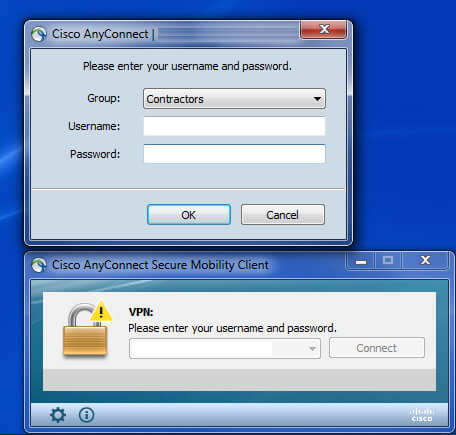

I’ve had some clients that keep their staging servers behind a firewall (on their company network and not publicly available). For a situation like that, I’ve sometimes been given VPN access so I could crawl the server. Once I connect via VPN, I could crawl away with any tool that was local (working on my systems in my office).

The upside is you can crawl staging with local tools. The downside is that you probably can’t use enterprise-level crawlers which aren’t located on your own network. And that could be important, especially if it’s a large-scale website.

Accessing a staging server via VPN:

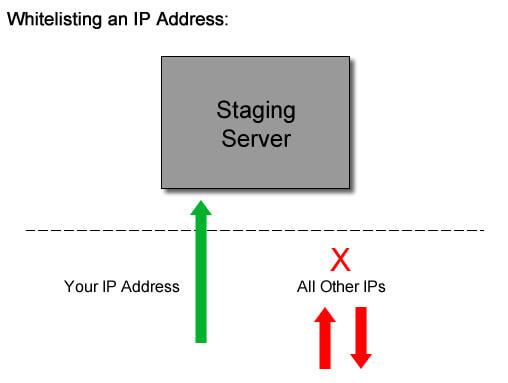

3. Whitelist An IP Address

I’ve also had some clients that used a staging platform that redirected all users to a common login, which then redirects you back to the specific staging server you wanted to access. Unfortunately, many of the tools that support basic or digest authentication will not work here, as the redirect throws a wrench into situation.

But you could request that the platform whitelist your IP address for the staging server you are trying to access. Your client would simply be providing access to your specific IP address to the staging server for a short period of time — for example, one day, or just a few days of access — while excluding all other IPs.

4. Create A Custom User Agent

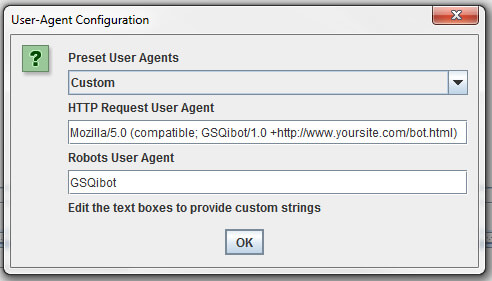

You’ve heard of Googlebot and Bingbot, but have you heard of GSQiBot? That’s one of the custom user agents I’ve set up for client crawls. Using the top crawling tools, you can create a custom user agent that you can pass along to your clients.

Then they can whitelist that specific user agent while blocking all other access. It’s similar to the IP address method, but it whitelists a user agent versus an IP address.

Setting up a custom user agent in DeepCrawl:

Setting up a custom user agent in Screaming Frog:

5. IRL – Going Old-School

Yes, you read that correctly. In certain situations, I’ve had to go old-school and actually visit clients “in real life.” Whoa, the horror!

If staging is not accessible from the outside, and your client will not open up access for some reason, then you might have to go visit their office.

Once you do, you can crawl away from within their network. This obviously has some geographic restraints, but I’ve done this before for clients located in the Northeast. (I’m in Princeton, NJ.)

Key Takeaways & Quick Tips

Now that I’ve covered five different ways to crawl a staging server, I’ll provide some key takeaways and tips based on my experience helping clients.

- Do not bypass the crawl. It’s too important to overlook. There are many problems you can uncover with a strong crawl of staging. And that means you can nip serious SEO problems in the bud. Emphasize the importance of a crawl to your client, their digital marketing team and dev team.

- Be flexible and work with your client’s dev team to gain access. Don’t demand a certain method. Understand their situation and work towards gaining access. The goal is to crawl staging to give the green light. It’s not to boost your ego.

- When you gain access, fire away. Perform both enterprise-level crawls and surgical crawls (if possible). Be prepared with what you need to do and which crawls you would like to perform. You might only have access for a day or two, so make the most of it. Again, I like to use DeepCrawl for enterprise crawls and Screaming Frog for surgical crawls.

- Double-check your crawl data before losing access to staging. Make sure you captured the data you need to complete the analysis. If for some reason the initial crawl data isn’t sufficient, refine your settings and crawl again. For example, exclude unimportant directories that are hogging the crawl, use different starting URLs, ensure the proper crawl restrictions are set up, make sure the right report settings are selected and so on.

- Make sure your client understands that there probably will be changes to implement based on the crawl analysis of staging, and that they should leave time for developers to make those changes. This is not a “crawl once and launch” type of process (although that can happen in a best-case scenario). It’s more of a “crawl, find problems, fix problems and crawl again” process. You don’t want to push SEO problems to production. Googlebot may not be as nice as GSQibot.

Summary: There’s More Than One Way To Access Staging

As I explained earlier, it’s critically important to crawl staging before key changes are pushed to production. You could very well uncover SEO technical problems during the crawl that will cause serious issues if pushed live.

My recommendation is to gain access to staging at all costs. The good news is that there are several methods you can choose from, as I documented above. Work with your client, and with their dev team, to gain access. That’s how you win. Now crawl away.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories