Up Close @SMX Advanced: The Periodic Table Of SEO Elements

This SEO ranking factors session has become a much-anticipated tradition at SMX Advanced, as the substantial early morning crowd in attendance this year testified. Each year, a panel of SEO data experts present their up-to-date findings, test results, and best guesses as to what factors are primary in Google’s ranking algorithm. There are always surprises, […]

Each year, a panel of SEO data experts present their up-to-date findings, test results, and best guesses as to what factors are primary in Google’s ranking algorithm.

There are always surprises, interesting revelations, and a touch of controversy in this session, and this year did not fail to provide those elements.

Panelists for the 2014 session were:

- Matthew Brown, Moz (@MatthewJBrown)

- Marianne Sweeny, Portent (@msweeney)

- Marcus Tober, Searchmetrics Inc. (@marcustober)

Danny Sullivan Introducing the SEO Ranking Factors panel

Panel Moderator: Danny Sullivan (@dannysullivan)

Q&A Coordinator: Bill Hunt, Back Azimuth (@billhunt)

Twitter hashtags: #smx #11a

Marcus Tober: Annual SearchMetrics Ranking Correlation Study

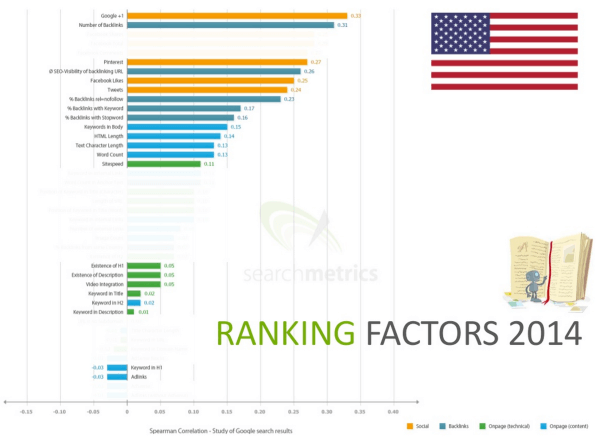

Each year, SearchMetrics conducts an extensive study of search ranking factors and reports on the elements with the highest correlation to higher rankings. The SMX audience got an early look at this year’s findings, which will be published at searchmetrics.com in late June.

Here are their overall correlations for 2014:

Onsite Factors

Life In The Fast Lane

Marcus reported that the strongest increase in correlation they observed over the 2013 study was in site speed. This is not surprising as Google has been saying frequently that it is taking a closer look at the loading speed of websites, especially as mobile moves toward being the predominant search mode.

Later in the day at the “You & A” session, Matt Cutts emphasized as his main takeaway that sites must optimize user experience for mobile. Speed is certainly an aspect of that; but, he also urged such things as making sure that forms are as easy as possible to fill out with minimal typing (implement auto completes wherever possible).

Brands Have More Fun

SearchMetrics continued to see recognized brands having an advantage at getting the top one or two positions, but the brand effect drops quickly after that. It is therefore imperative that companies seek to become known and talked about as a brand, as that could lead eventually to Google recognizing them as such.

An Inside Look At Internal Links

A new factor SeachMetrics looked at this year is the number of internal links on a page. They found that too many or too few internal links on a page can hurt a site’s ability to rank.

In a post-presentation chat with me, Marcus said they saw that 180 links seemed to be the “sweet spot.” But he cautioned that this was an average across hundreds of thousands of pages examined, and should not be taken as a rule. In other words, just putting around 180 internal links on a page will not guarantee a ranking effect. Rather you should always keep in mind the user experience. Have just the amount of internal links on the page that will be useful to a user, keeping them engaged with your site without appearing spammy or annoying.

Marcus believes this is part of Google’s commitment to serve up sites with a better user experience for their searchers. He strongly recommended that sites do some housekeeping to clear out unnecessary, irrelevant, or broken links.

Marcus compared sites with internal link bloat to older universal remotes for entertainment centers, loaded with so many buttons the user is overwhelmed and ends up not using it.

Don’t Be Content with Weak Content

However, Marcus emphasized that all the optimization in the world for things like speed and links won’t do much good if a site’s content is poor. He reported on some of the more prominent content-related factors that correlated highly with high search rankings.

They found that that longer text (in terms of characters) did better in 2014. Length by word count was also more highly correlated than in 2013.

But always keep user experience and utility in mind! Bottom line: not just longer, but more holistic, more relevant content is what you need.

New content elements that showed up in their survey this year:

- Having keywords in the content body strongly correlated.

- Relevant and Proof keywords showed a high correlation to better rankings.

- Relevant keywords are keywords that are often mentioned together. Content creators should learn what their relevant terms are and use them in their content.

- Proof keywords are keywords that appear together more often on the first page. They “prove” the relationships of the Relevant keywords.

- Readability of content: there was no apparent correlation with complexity of readability

- Panda 4.0 update. Aggregators were big losers. Example: PRnewswire lost a lot of visibility, especially for keywords leading to thin or irrelevant content.

Social Slips

Google+ +1’s and Facebook shares continued to correlate highly, but not as much as last year.

Marcus noted that good content, the kind that typically earns the signals we know cause higher ranking, is shared more often. He also pointed out a similarity to the brand effect noted above, as Facebook shares and Google+ +1’s are significantly higher for position-one results.

No Missing Link

At the top of backlink-related factors this year are the number and quality of backlinks to a page.

While quality of links rose in importance over 2013, the number of links dropped a bit, but high rankers still have more backlinks on average than lower ranking pages. Good links from high authority pages are still highly correlated.

SearchMetrics also looked at some new features for backlinks in their 2014 study, all of which showed good correlation to higher rankings. In descending order of correlation strength, they were:

- Number of referring domains to the home page

- New (or “fresh” backlinks from the past 30 days)

- URL brand anchors

- Domain brand anchors

Brands can get higher with fewer fresh links, but the correlation with fresh links actually gets stronger beyond the top positions. Once again, Marcus emphasized the importance of becoming a known brand.

Going Mobile

SearchMetrics compared mobile to desktop in factors such as site speed. They saw a very similar correlation pattern for site speed for both. They also saw that sites required less text length than on desktop to rank higher.

For mobile, the number of backlinks had a significant effect only for first five or six positions.

So, where is the biggest difference between desktop and mobile? Only 64% of the URLs examined appeared in top 20 for both desktop and mobile.

Getting Used

SearchMetrics studied a half million URLs to look for user behavior and traffic elements and any effect they might have on rankings. Their findings:

- Time on site – slightly positive correlation

- No correlation for bounce rate.

- CTR has high correlation.

Marcus concluded by saying that we ought to think of SEO now as Search Experience Optimization. It’s all about the user experience.

Here’s Marcus’s slide deck for this presentation:

Marianne Sweeney: How UX Affects SEO Ranking Factors

Search Experience

Marianne talked about what she calls “Distracted Search User Experience.” Searchers have become more lazy. A Pew Trust study in 2012 revealed that 56% of searchers construct poor queries. Search has gotten better but searchers have not.

UX (user experience) and SEO got off on the wrong foot. Specialists in each too often have conflicting goals, but the search engines are emphasizing UX more and more.

Marianne referenced a 2011 interview with Vanessa Fox by Eric Enge that discussed how important UX was in the first Panda rollout.

Marianne thinks that it is unfortunate that design models don’t take SEO more into consideration.

There are four important search-UX relevance inputs:

- Selection (do people choose you?)

- Engagement

- Content

- Links

More Details On Selection: Search engines found that people don’t bother to read the results much beyond the top 3. So Google reduced the number of characters and fiddled with the pixel spacing to make results easier to scan. This makes using keywords in context very important.

Remember, people read left to right, so position keywords in title tags and meta descriptions as far to the left as possible. Meta descriptions don’t influence ranking, but they do influence whether people will pick your result. Write them to sell the relevance of your content to the query!

Engagement: Information Architecture

The Fold: user studies show that people are not inclined to scroll down unless induced to do so.

Proto-Typicality: Does it look like what I’m used to seeing? Don’t be “innovative” with things like navigation. If people don’t see things where they expect them to be, they get confused and are more likely to leave sooner.

Visual Complexity: It’s important to consider the ratio of images to content. Images are useful but shouldn’t overwhelm. Customers prefer text over images; too much visual complexity is less appealing.

Navigation: Users are accustomed to ignoring it. The locus has changed from nav to search. So keep navigation minimal and crystal clear.

Maximize Locus Of Attention: The center of the page is where users look first. Make sure your most important message is there.

The farther you are from an authority page, the less relevant you are, so group similar content together. Stuff in your attic is less important than what is in your kitchen drawer. Don’t bury important stuff down many levels in your navigation. Make nav categories simple and easy to understand.

Content

Start with what you have. Eliminate old stuff that doesn’t matter anymore. Make sure you have conducted a content inventory audit. Assign owners to each piece of content who promise to keep, kill or revise each piece with a commitment to keep them up in the future.

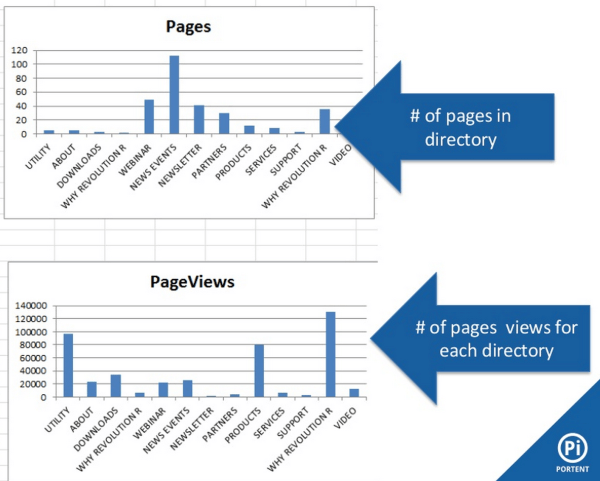

Put content where users actually are going on your site. Too many sites have the bulk of their content in parts of their site users rarely visit. For example:

As far as keywords are concerned, you need to know the user behind the keywords. Keyword research is not dead, but has changed and become harder. Ask the client what they would use to find their own site, and see if those keywords are actually represented in the data. Then go to Google Trends to see what actual customers are using. In light of Hummingbird, where Google is “rewriting” queries, use Google Correlate to find how real people have iterated their queries.

Google Webmaster Tools has shown that the fold is no longer the real dividing point of a page. Ninety percent (90%) of views are actually in the upper left quad of a page. What do you have there?

Portent found that when a user scrolls, bounce rate goes down significantly. What are you doing to make it worth scrolling down the page?

Matthew Brown: SEO Success Factors

“Clean” SERPs with no features are very rare. The SERPs aren’t as easy to backsolve any more. It’s difficult to know what makes various search features appear.

Hummingbird was a whole new engine, a user-intent engine. In a Hummingbird world, the high ranking factors are more complexly interrelated.

What about the correlations studies that some interpret as showing direct ranking factors? We often hear “correlation isn’t causation,” but Edward Tufte said it better: “Correlation is not causation, but it sure is a hint.”

Cyrus Shepard got it right:

You can still bank on backlinks. Matt Cutts has said that they will still count for years to come, but Google wants to figure out how an expert user would evaluate a page, “and sometimes backlinks matter for that.”

External anchor text still makes a big difference; same with internal anchor text. Improving site navigation and internal links is important. See “Old School SEO Tests in Action” by Bill Sebald.

Kinda Iffy factors

Matthew listed some factors that are not as certain as important factors as they may once have been:

- Keyword strings in titles and meta tags are getting less important. Google is rewriting title tags when it understands user intent.

- Optimizing for multiple keyword variations (“hotels in Paris,” “Paris hotels,” “top Paris hotels” etc.)

- Too much markup trying to get snippets can take you out on the basis of user intent. Use markup only when it matches the likely user intent.

- Absolute rankings are increasingly meaningless, so dependent on what features appear in search. It’s more important to look at what features are occurring around your results.

New Kids: (Potentially Important)

- Entity-based optimization (semantic search) and Knowledge Graph results. Matthew recommended an article by Bill Slawski on how Google decides what entities to associate. The Alchemy API shows entity associations. You need to get yourself and your brand related to entities important to you. Also recommended: AJ Kohn on Knowledge Graph optimization. He showed how Google is using Google+ to find out identity location affinities (such as realtors who have a relationship to Zillow being shown in a local Zillow search).

- The location level of Google has been turned way up. Matthew searched the common word “rejuvenation” in Portland and got lots about the hardware store Rejuvenation in Portland. This is being driven by the rapid rise of mobile. All the search engines are moving that way.

- Knowledge Graph moving into local. Google will start pulling Knowledge Graph data more and more from bases like Yelp.

Unknown Unknowns

Hummingbird means all our previous assumptions need to be retested.

Recommended: 10 SEO Experiments that will change the way you do SEO. Takeaway: Optimize content around entities and relationships.

We need to do more “Unique Snowflake SEO.” Every site has its own set of success factors. This is where SEO will be important in the future, not optimizing around conventional wisdom, but around what works for the site.

Here’s Matthew’s presentation:

Highlights From The Q & A

Don’t get hyped about any one factor. Speed is important, but speed with poor content won’t do you any good.

What single metric would the speakers use if they had to pick one?

- Matthew Brown: Domain Authority is pretty good, but not perfect. Use common sense. Use a multiplicity; no one metric is getting everything.

- Danny Sullivan: Use that social correlation because it’s so strong (not as a factor, but as an indicator of sites that do well).

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories