Technical SEO checklist: 7 essential tips to implement now for 2017

In 2016, there’s been a lot of speculation on the value of technical SEO. It was called makeup; some of it was proclaimed dead; but ultimately, it was brought back to life gracefully and conclusively with outstanding examples of technical SEO tactics resulting in major traffic boosts. So why are opinions on this seemingly uncontroversial […]

In 2016, there’s been a lot of speculation on the value of technical SEO. It was called makeup; some of it was proclaimed dead; but ultimately, it was brought back to life gracefully and conclusively with outstanding examples of technical SEO tactics resulting in major traffic boosts.

So why are opinions on this seemingly uncontroversial topic so divided? The problem may lie in the definition of technical SEO. If we refer to it as “the practices implemented on the website and server that are intended to maximize site usability, search engine crawling, and indexing,” then (we hope) everyone can agree that technical SEO is the necessary foundation of top search engine rankings.

In this post, we’ll focus on the seven fundamental steps to technical SEO success in 2017. Some of these have been relevant for a while; others are fairly new and have to do with the recent search engine changes.

Let’s get rollin’!

1. Check indexing.

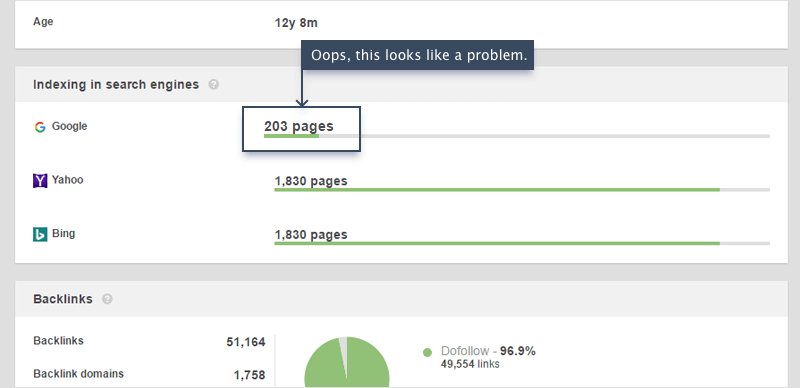

Let’s start with the number of your site’s pages that are indexed by search engines. You can check this by entering site:domain.com in your target search engine or by using an SEO crawler like WebSite Auditor.

Ideally, this number should be largely proportional to the total number of pages on your site, minus the ones you don’t want indexed. If there’s a bigger gap than you expected, you’ll need to review your disallowed pages. Which brings us to the next point.

2. Make sure important resources are crawlable.

To check your site’s crawlability, you may be tempted to simply look through robots.txt; but often, it’s just as inaccurate as it is simple. Robots.txt is just one of the ways to restrict pages from indexing, so you may want to use an SEO crawler to get a list of all blocked pages, regardless of whether the instruction was found in the robots.txt, noindex meta tag or X-Robots-Tag.

Remember that Google is now able to render pages like modern browsers do; that’s why in 2017, it’s critical that not only your pages, but all kinds of resources (such as CSS and JavaScript) are crawlable. If your CSS files are closed from indexing, Google won’t see pages the way they’re intended to look (and more likely than not, their styleless version is going to be a UX disaster). Similarly, if your JS isn’t crawlable, Google won’t index any of your site’s dynamically generated content.

If your site is built using AJAX or relies on JavaScript heavily, you’ll need to specifically look for a crawler that can crawl and render JavaScript. Currently, only two SEO spiders offer this option: WebSite Auditor and Screaming Frog.

3. Optimize crawl budget.

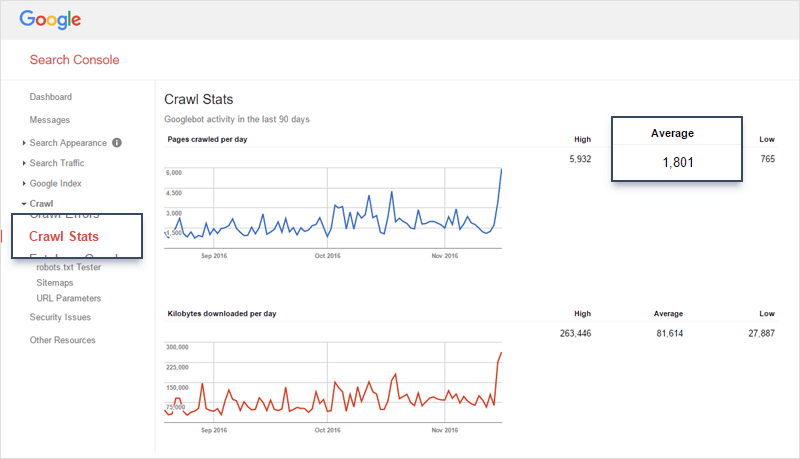

Crawl budget is the number of a site’s pages that search engines crawl during a given period of time. You can get an idea of what your crawl budget is in Google Search Console:

Sadly, Google Search Console won’t give you a page-by-page breakdown of the crawl stats. For a more detailed version of the data, you’ll need to look in the server logs (a specialized tool like WebLogExpert will be handy).

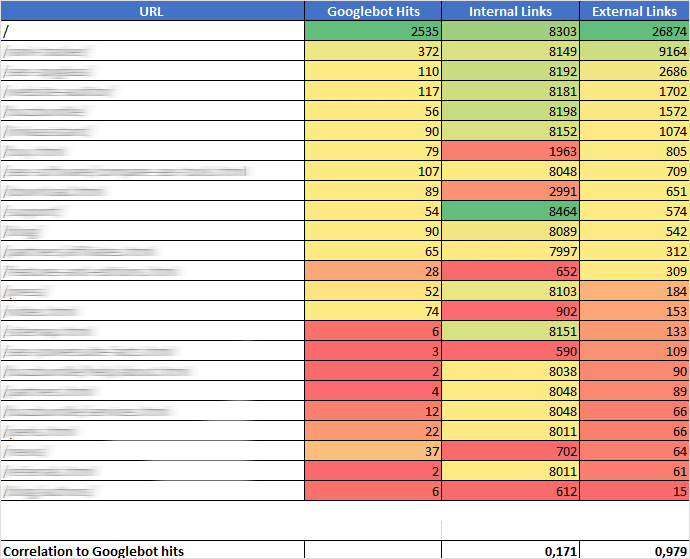

Once you know what your crawl budget is, you must be wondering if there’s a way to increase it. Well, there is, kind of. SEOs don’t know for sure how Google assigns crawl budget to sites, but the two major theories state that the key factor is (1) the number of internal links to a page, and (2) its number of backlinks from other sites.

Our team recently tested both theories on our 11 websites. We looked at backlinks pointing to all of the sites’ pages in SEO SpyGlass, internal links to them and the crawl stats.

Our data showed a strong correlation (0,978) between the number of spider visits to a page and its backlinks. The correlation between spider hits and internal links proved to be weak (0,154).

But obviously, you can’t grow your backlink profile overnight (though it’s still a good idea to keep building links to the pages you want to be crawled more frequently). Here are the more immediate ways to optimize your crawl budget.

• Get rid of duplicate pages. For every duplicate page that you can afford to lose — do it. In terms of crawl budget, canonical URLs aren’t of much help: search engines will still hit the duplicate pages and keep wasting your crawl budget.

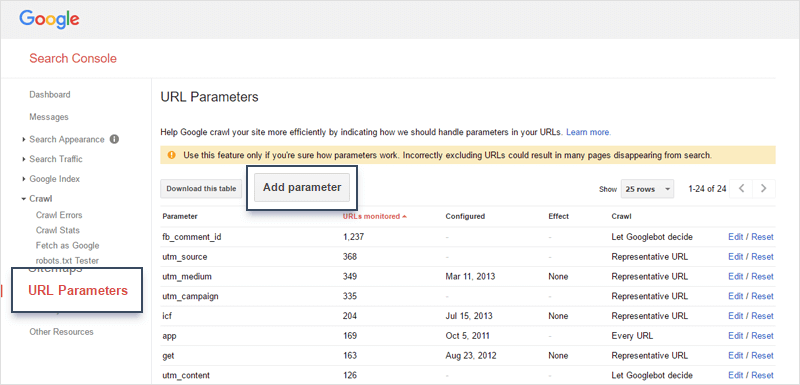

• Prevent indexation of pages with no SEO value. Privacy policies, terms and conditions and expired promotions are good candidates for a Disallow rule in robots.txt. Additionally, you may want to specify certain URL parameters in Google Search Console so that Google doesn’t crawl the same pages with different parameters separately.

• Fix broken links. Whenever search bots hit a link to a 4XX/5XX page, a unit of your crawl budget goes to waste.

• Keep your sitemap up to date, and make sure to register it in Google Search Console.

4. Audit internal links.

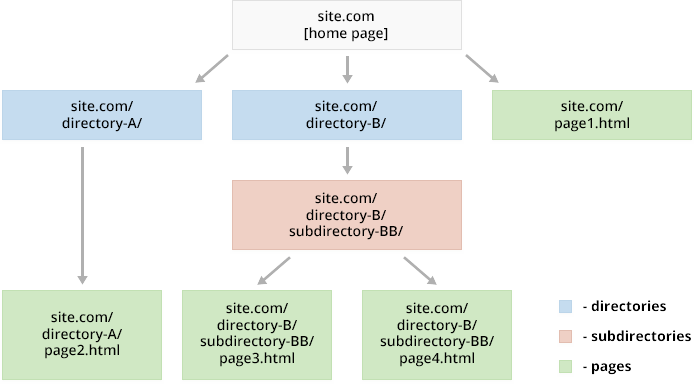

A shallow, logical site structure is the prerequisite of great UX and crawlability; internal linking also helps spread ranking power (or PageRank) around pages more efficiently.

Here are the things to check when you’re auditing internal links.

• Click depth. Keep your site structure as shallow as possible, with your important pages no more than three clicks away from the home page.

• Broken links. These confuse visitors and eat up pages’ ranking power. Most SEO crawlers will show broken links, but it can be tricky to find all of them. Apart from the HTML elements, remember to look in the tags, HTTP headers and sitemaps.

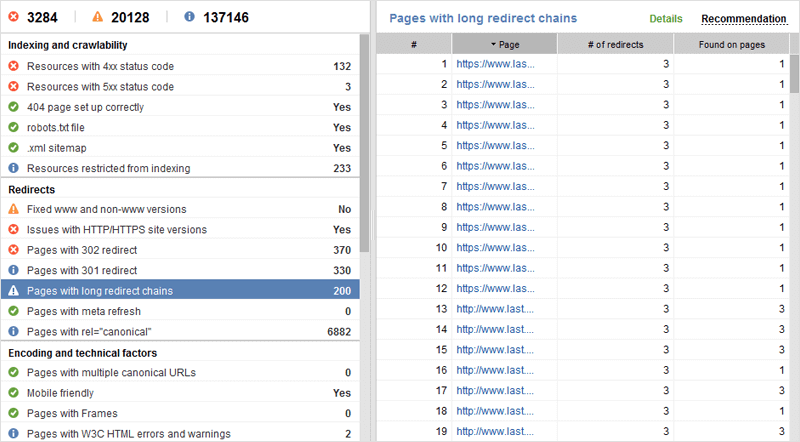

• Redirected links. Even if the visitor eventually lands on the right page, taking them through a number of redirects will negatively affect load time and crawl budget. Look for chains of three or more redirects, and update the links to redirected pages as soon as you discover them.

• Orphan pages. These pages aren’t linked to from other pages of your site — and thus are hard to find for visitors and search engines.

5. Review your sitemap.

You already know how important sitemaps are. They tell search engines about your site structure and let them discover new content faster. There are several criteria to check your sitemaps against:

• Freshness. Your XML sitemap should be updated whenever new content is added to your site.

• Cleanness. Keep your sitemap free from garbage (4XX pages, non-canonical pages, redirected URLs, and pages blocked from indexing) — otherwise, you may risk having the sitemap ignored by the search engines completely. Remember to regularly check your sitemap for errors right in Google Search Console, under Crawl > Sitemaps.

• Size. Google limits its sitemap crawls to 50,000 URLs. Ideally, you should keep it much shorter than that so that your important pages get crawled more frequently. Many SEOs point out that reducing the number of URLs in sitemaps yields more effective crawls.

6. Test and improve page speed.

Page speed isn’t just one of Google’s top priorities for 2017, it’s also its ranking signal. You can test your pages’ load time with Google’s own PageSpeed Insights tool. It can take a while to manually enter all your pages’ URLs to check for speed, so you may want to use WebSite Auditor for the task. Google’s PageSpeed tool is integrated right into it.

If your page doesn’t pass some of the aspects of the test, Google will give you the details and how-to-fix recommendations. You’ll even get a download link with a compressed version of your images if they’re too heavy. Doesn’t that say a lot about just how much speed matters to Google?

7. Get mobile-friendlier.

A few weeks ago, the news broke that Google’s starting the “mobile-first indexing of the web,” meaning that they will index the mobile version of websites instead of its desktop version. The implication is that the mobile version of your pages will determine how they should rank in both mobile and desktop search results.

Here are the most important things to take care of to prepare your site for this change (For more mobile SEO tips, jump here).

• Test your pages for mobile-friendliness with Google’s own Mobile Friendly Test tool.

• Run comprehensive audits of your mobile site, just like you do with the desktop version. You’ll likely need to use custom user agent and robots.txt settings in your SEO crawler.

• Track mobile rankings. Finally, don’t forget to track your Google mobile ranks, and remember that your progress will likely soon translate to your desktop rankings as well.

Those are our top 7 technical SEO tips for 2017. What are your thoughts on the technical SEO of tomorrow? Which tactics have you seen to be most effective recently? Shoot us a message on Twitter and let us know what you think!

Related stories