4 mistakes to avoid during a website audit

A good website audit is important for getting a holistic view of your site's SEO health. Columnist Pratik Dholakiya explains which mistakes to avoid when conducting a website audit.

As all SEO experts know, ranking in the search engines is a notoriously unpredictable pursuit. Each update can result in a strong, unforeseeable impact. The days of ranking by simply adding keywords to your title tags, header tags and content to get a few backlinks are over. The harsh truth is that a great many businesses struggle to keep up with the changes.

There are a lot of factors that the search engines take into account when determining your standing. Some of the big ones are:

- content quality

- authority

- responsiveness

- website speed

Knowing how exactly to improve upon these is a lot easier said than done. Therefore, there will always be a need to perform a quality audit. Keep in mind that an in-depth audit is not a task you can do in a couple of hours. Depending on the size of your business website, it can take a few days to complete.

Throughout the auditing process, there are several key oversights that can spell disaster for your rankings on the SERPs. Let’s talk about four of them.

1. Walking before you crawl

First off, in order to diagnose problems on your website, you need to have a clear and complete picture of what exactly you are working with. Therefore, it is essential that you do a thorough crawl of the entire website. If you omit crawling before making changes, you are basically taking shots in the dark.

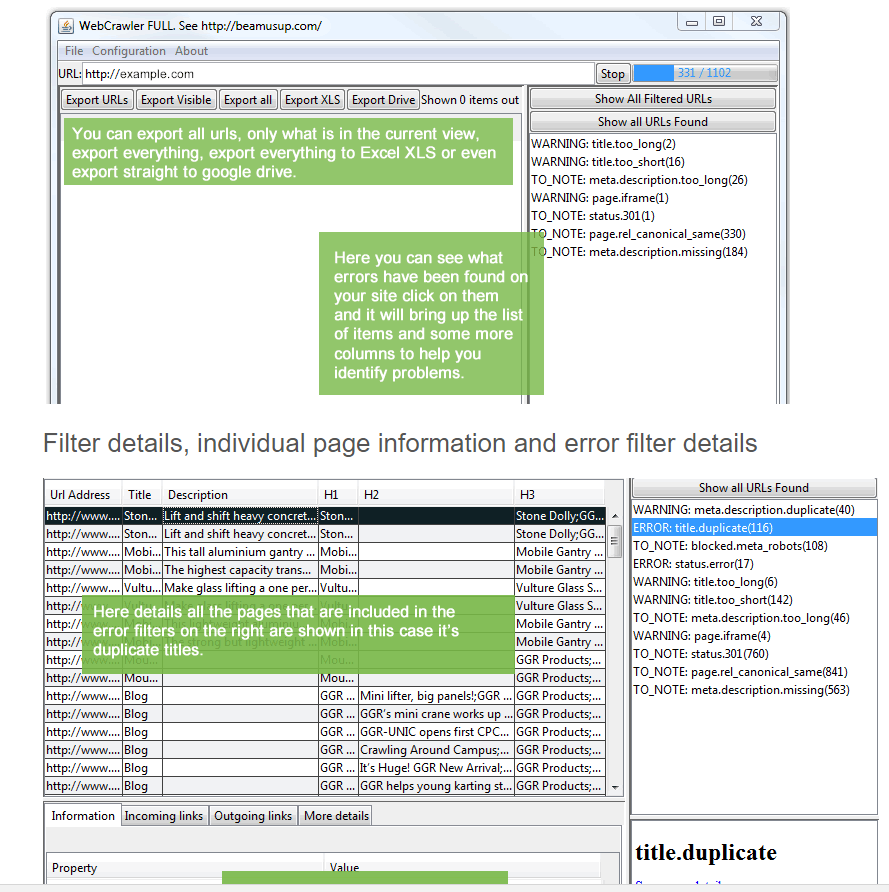

If you’re new to auditing, start by choosing a good crawling tool. I recommend Screaming Frog’s SEO Spider due to its simplicity and reasonable price. However, if you are looking for free, BeamUsUp is another great tool to use.

Start by entering your website into the URL field.

You can also choose your subdomains (if applicable) to crawl.

This process is a key ingredient to a successful audit. It forms the road map for the rest of the task and should always be step one.

2. Not prioritizing accessibility

Simply put, if search engines and users cannot access your website, all the time and resources you put into designing your landing pages and creating your branded content is basically worthless. With that being said, you want to put the search engines in the best position to index your website.

Now, you don’t need search engines to crawl and index each and every file on your site. You can use your robots.txt file to specify which pages and folders to crawl and which ones not to. Here is what a robots.txt file might look like:

In the screen shot above, the website owner has “disallowed” certain sections of the website from being crawled. It’s important to check this file to ensure that you are not restricting crawler access to important sections or pages of your website, as this may not bode well in your rankings.

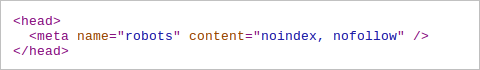

Individual pages can be blocked from search engine indices using the meta robots noindex tag. Therefore, it is critical to look for pages that may have mistakenly implemented this tag. Here is what that meta tag would look like:

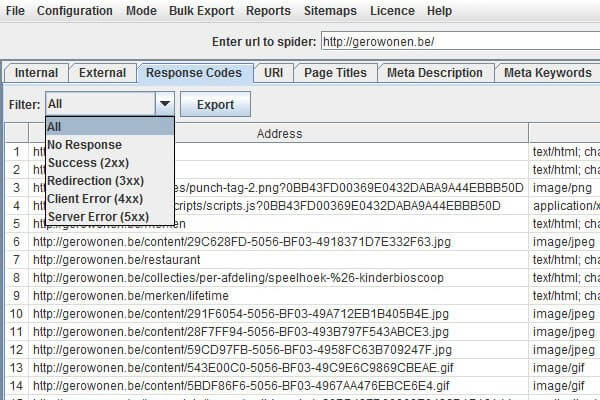

Your initial website crawl will most likely reveal a number of HTTP status codes. If you’re using Screaming Frog’s SEO Spider, you’ll need to look into these under the “Response Codes” tab.

Here are some of the HTTP status codes to keep an eye out for:

- 200: Ok — Everyone (both crawlers and users) arrived at the desired page. This is code for “correct.”

- 301: Permanent redirect — Both crawlers and users are redirected to a new location, which is considered permanent.

- 302: Temporary redirect — Both crawlers and users are redirected to a new location, but the new page is considered temporary.

- 404: Not found — The desired page is missing.

- 503: Service unavailable — The server is currently unable to process the request.

Start off by looking into all the redirects (301 and 302). Google insists that there is no PageRank dilution when using a 30x redirect of any kind, meaning that 301 and 302 redirects should both preserve PageRank value. However, many SEOs are skeptical of this claim. For now, it’s best practice to ensure that you are using 301 redirects across the board, unless a redirect truly is temporary.

Next, the 404 error can be addressed in several ways. You can tell the server to redirect the 404ing page to a different page. In some cases, you may even want to restore pages that you have deleted if it appears they still hold value for searchers. While 404 errors don’t cause any irreparable harm, they can make your website look sloppy. I recommend looking out for these at least once a month — if you have a large website, once a week.

503 errors mean the server is down. These are typically used during outages and let the search engines know to come back shortly.

In addition to errors, you’ll need to keep a close eye on your XML Sitemap. This provides a path for the search engine crawlers to easily find all of the pages on your website. Search engines expect a certain format for Sitemaps. If yours doesn’t conform, it runs the risk of not being correctly processed. If there are pages listed in the Sitemap that do not appear in the crawl, it could mean that you need to find a location for them in the site architecture and ensure they have at least one internal backlink.

Finally, regularly monitoring your website’s performance is a MUST. Keep in mind that users have very short attention spans these days. Believe it or not, the average these days is around eight seconds. Search engine crawlers are not known for their patience, either. They have a limited amount of time allocated to each website. The faster your website loads, the more it will be indexed. Pingdom is a simplistic tool to use for testing loading speed.

It will give you an itemized list of everything on your pages and how they affect your loading times. From there, it provides suggestions on how to reduce the snags and bottlenecks to improve overall performance.

Accessibility is one of the influential parts of a website audit. Failing to put a significant effort into optimizing this area can be a deal-breaker for your rankings.

3. Ignoring on-page ranking factors

As far as on-page ranking factors go, there are many things on your website to look at under a microscope.

For starters, and perhaps most importantly, the content of a page (and the titles that lead to it) is what makes your website worthy of its position in the search results. Ultimately, it is the reason people visit and stay on your platform. At a foundational level, creating stellar, authentic content needs to be your top priority.

From an SEO standpoint, there are many things to keep in mind.

- Is the material substantial? As a rule of thumb, it’s recommended that each page of content has at least 300 words.

- Does your content provide value to the reader? This is a bit of a gray area. Basically, you need to make sure your material is well-written, relevant and compelling.

- Are the images optimized? Your website should provide image metadata so that the search engines can factor them into your rankings.

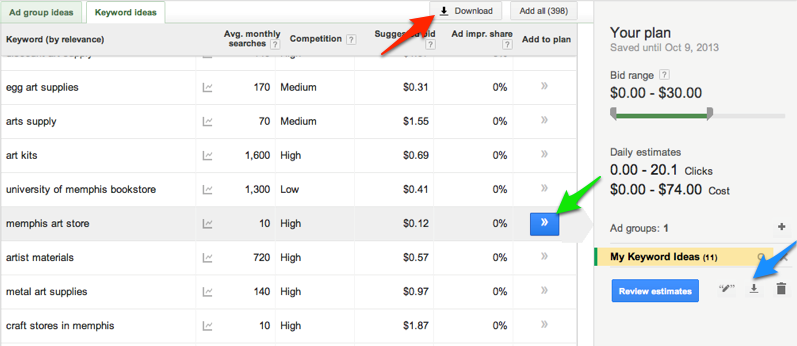

- Are you using targeted keywords? Do your research and determine which terms users are searching for in relation to your industry.

- Is the content readable? Use tools like Gunning Fog Index to gauge how well your text can be understood by the average reader.

- Can the search engines process it? Make sure content is not bogged down with complex images, excessive JavaScript, or Flash.

When combing through your website’s messaging, there are three harmful culprits you’ll want to keep a close eye on.

- Duplicate content. This is not doing you any favors and can be confusing for search engine crawlers, as they will have to decide which version of the content to rank. You can pinpoint these pieces during the website crawl. Or tools like Copyscape identify duplicates across the web.

- Information architecture. This defines how exactly your website presents its messaging. It should serve a purpose, as well as utilize a healthy number of targeted keywords.

- Keyword cannibalism. This grim metaphor describes the situation where you have multiple pages that target the exact same keyword. This will create confusion for both the users and the search engines. Avoid this by creating a keyword index for everything on your website.

Next, you’ll need to examine URLs. Generally speaking, they should be under 115 characters long. Also, it’s important that the URLs briefly describe the content it links to. There are many practices to consider for URL structuring. Take your time with them.

Outlinks are another necessary factor to look at. These are what connect your pages to other sites. In short, it’s like an endorsement. During an audit, you will want to make sure those links go to trustworthy, reputable sites. A strategy I use is to look up the website on Twitter. If the account is verified, it’s typically a qualified source.

In addition to being decisive for search rankings, on-page factors are what visitors interact with when they go on your website. Therefore, overlooking even a few of them can be extremely costly in the long run.

4. Ignoring off-page ranking factors

Even though these factors are from external sources, they are vital to your rankings and should not be ignored or placed on the back burner.

First, look at your overall popularity. How much traffic is your website getting? For this, you will need to keep regular tabs on your analytics. If you use Google Analytics, look to make sure you are not losing a significant amount of organic traffic over time.

Now, do some competitive analysis and see how your site’s popularity is faring against similar platforms. Quantcast is a great tool to use to gauge your audience and overall uniqueness against competitors.

You can also measure website popularity by the number of backlinks you receive from other websites using metrics like mozRank.

Google’s motto was once “Don’t be evil.” With that in mind, you can imagine how significant trustworthiness is when determining rankings. When reviewing the websites that are linking to yours, look for untrustworthy behavior like spam or malware. Some of the red flags to look for when identifying these things are:

- Keyword stuffing — Unnatural number of keywords within the content.

- Cloaking — Showing different versions of a website to search engines and users.

- Hidden Text — Presenting content to search engines that is not visible to users.

If you are getting a link from a website with any of the above problems, use Google Search Console to disavow those inbound links.

Last, but certainly not least, social engagement is believed by some to play an indirect a role in rankings. SEO is becoming more social by the day. Each platform has its own form of social currency (likes, retweets, shares and so on). When you’re evaluating social interactions, you’ll want to take note of how well you are accumulating these forms of currency. Keep in mind, there are over 2 billion social media users worldwide. This trend is not going anywhere any time soon. Therefore, establishing a social presence is no longer an option for brands.

Final thoughts

The search engines are infamous for changing and updating their algorithms with practically no warning. For that reason, it is imperative that you keep up on the trends. Personally, I’ve made a habit of catching up on the latest SEO news for 30 or so minutes over my morning coffee. Staying on top of this evolving landscape is one of the best ways to avoid mistakes and pick up on valuable tips.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land