7 Ways To Use Splunk For Technical SEO

Contributor Eli Schwartz recommends several ways to use Splunk, a tool which can help users derive actionable insights from server logs and other machine data.

Anyone with a passion for technical SEO strives to have a deep understanding of a website’s architecture and aims to be very familiar with how Google relates to the URIs on a site.

In my quest to be the best technical SEO I can be, I have discovered that by spending time doing deep analysis of site access logs, I can learn a great deal about how search engines understand the site. To that end, my favorite tool for this task is Splunk.

Splunk is a fantastic hosted or cloud-based tool that allows you to parse large amounts of data quickly and easily to make important decisions. If you have ever tried sorting 10,000 rows in an Excel spreadsheet, you will understand the need for a tool like Splunk. For many websites, the free version of Splunk, which allows you to upload 500 MB per day, should be more than enough for you to analyze your site access logs.

Here are the 7 ways I use Splunk to help me with technical SEO efforts:

1. Discover Whether A URL Has Been Crawled By Googlebot (Or Any Other Bot)

When launching a new web page, webmasters may anxiously check the Google cache of a page to see if it has been crawled (and is therefore on the way to ranking on some of the desired queries).

The problem with relying on the cache as a signal for a successful Google crawl is that it could be days (or even weeks) after Googlebot’s initial crawl of a page before Google shows a cache of the page in the index. (The cache of any page is found by searching [cache:web address].)

A faster and more accurate way of knowing if a page is indexed is simply to look for the exact title in a Google search. If the page appears for a search of its title then Google has crawled the page.

However, the most accurate (and fastest) way of knowing whether Googlebot has discovered a page is to simply search in your web logs to see if Googlebot accessed the page.

Once you have your logs uploaded to Splunk, here’s how you run this query:

-

First choose your time period. Shorter time periods return results faster, so use the shortest time period possible.

-

Type the following into the query box:

Index = {the name of your index} url stub AND googlebot

For example, if your index is called “primary” and your URL is “free-trial.html,” this would be your query:

Index=primary free-trial.html AND googlebot

2. Find 404 Pages

404 error pages are wasted visits. Every time a user hits a 404 page instead of the page they are meant to see, you are missing an opportunity to show them the content they were seeking. And at the same time, they are having a subpar experience with your site.

You can always proactively find 404s with a crawling tool like ScreamingFrog, but if you have a lot of broken links across your site, fixing them might not be a realistic goal. Additionally, this doesn’t help you find a 404 that is linked incorrectly on a site not under your control.

In this scenario, log parsing becomes very helpful as you can discover 404’ed URLs that are frequently accessed by users and choose to either fix them or redirect the traffic to a working page.

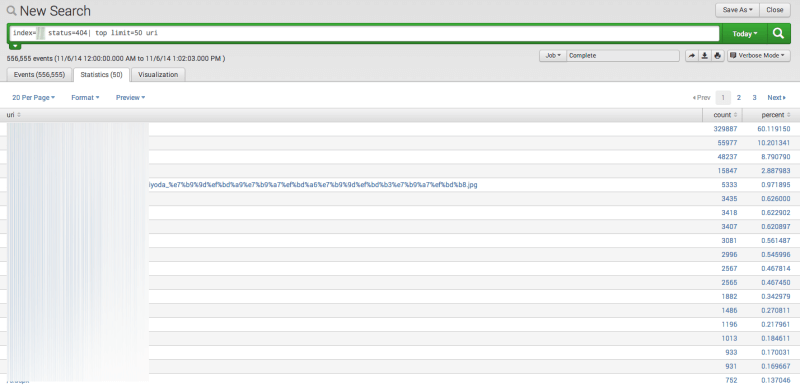

Here’s how you set up the query to find the 404 pages:

-

First, choose your time period. For this type of query, I usually use 30 days, but you can choose whatever you want.

-

Type the following into the query box:

Index = {the name of your index} status = 404 | top limit = 50 uri

Your limit can be whatever you want, but I like to work with 50 URLs. Once this query completes, click the statistic tab, and you will see all the URLs that you need to urgently address laid out in a table.

3. Find Googlebot 302s

A 302 redirect is a temporary redirect — different from a 301 (permanent) redirect.

In some of my testing, I have seen 302s that do pass link value and have the redirected page rank where the previous page did, but 302s should only used when a redirect is actually only meant to be temporary.

302 redirects can make it into your site in a variety of different ways — including as a native part of a platform such as .NET — and it is always prudent to regularly keep an eye out for traffic that is being 302 redirected when it should really have a 301 redirect.

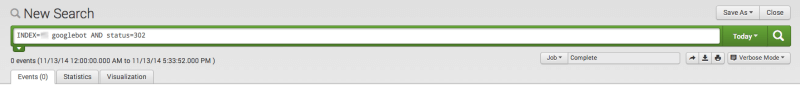

Here’s how you set up the query to find the 302 pages:

-

First choose your time period. For this type of query, I usually use 30 days, but you can choose whatever you want.

-

Type the following into the query box:

Index = {the name of your index} status = 302 | top limit = 50 uri

Just like before, the limit can be whatever you want to find the most useful data set.

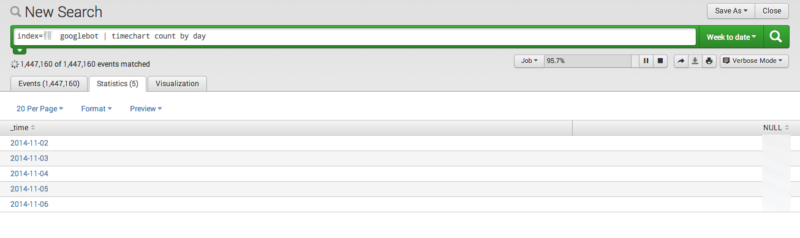

4. Calculate The Number Of Pages Crawled By Google Every Day

If you use Google’s Webmaster Tools, then you are probably familiar with the screen where Google shows how many URL’s they crawl per day. This data may or may not be accurate, but you won’t know until you look in your logs to see how many URL’s Google actually crawls per day. Getting a daily crawl amount is very easy:

-

Choose a time period of 30 days (or 7 if you have a lot of data)

-

Type the following query:

index ={name of your index} googlebot | timechart count by day

Once the query completes, click the Statistics tab, and you will have the true amount of pages crawled by Googlebot each day. For added fun, you can check out the visualization tab to see how this changes over the searched time period.

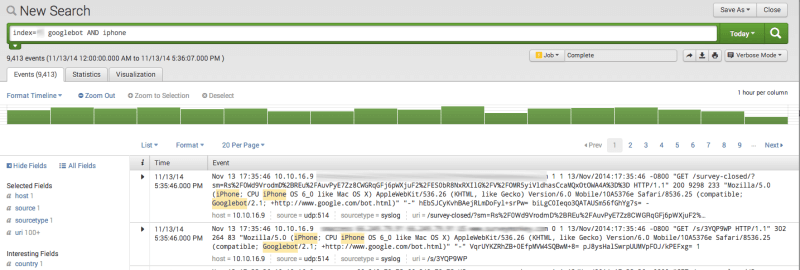

5. Calculate The Number Of Pages Crawled By Googlebot MOBILE Every Day

If you wanted to know how many URLs per day that Google’s mobile bot crawls, there is no resource in Webmaster Tools to discover this information. The only place you could see this number is your access logs.

Interestingly, the smartphone Googlebot crawls the web as if it is an iPhone, so we just need to search for Googlebot and iPhone:

-

Choose a time period of 30 days (or 7 if you have a lot of data)

-

Type the following query:

index ={name of your index} googlebot AND iphone | timechart count by day

Once the query completes, click the statistics tab, and you will have the true amount of pages crawled by the smartphone Googlebot each day. Just like in the earlier query, you can use the visualization tab to see how crawl activity changes during a specific time period.

6. Find Rogue URLs Wasting Crawl Budget

As most SEOs (should) know, Google allots a crawl budget to each site based on their PageRank — not the visible one, but the real one only Google knows. If Googlebot wastes some of your valuable budget on URLs you don’t care about, it obviously has less bandwidth to use on more important URLs.

Without knowing where Googlebot is spending time, you can’t know if your budget is being used effectively. Splunk can help you quickly discover all the URLs Googlebot is crawling, and then you will have the data to make a decision about what should be added to your robots.txt file:

-

Choose your time period. This can be any amount of time, and you should keep trying different time periods to find problematic URLs.

-

Type in the following query:

index={name of your index} googlebot uri_stem=”*”| top limit=20 uri

You can set the limit to whatever you want, but 20 is a manageable number. Once the query completes, click the statistic tab, and you will have a table showing the top URLs that Google is crawling. Now you can make a decision about any pages that should be removed, blocked by a robots file, or noindexed on the page.

7. Set Up An Alert For 500s By Googlebot

When a server is incapable of fulfilling an HTTP request, it will send back a 500 response to the requesting browser or service.

Frequent 500 errors have the potential to cause SEO issues since Google wants to show error-free sites in the results. Due to the impact on rankings, Google will send a message about outages to webmasters through Webmaster Tools; however, these notifications could take more than 24 hours to arrive.

Aside from the impact on rankings, frequent 500 errors provide a very poor experience to users, and this is an issue that you will likely want to address immediately. If you subscribe to Splunk’s Enterprise plan, you can set real-time alerts for 500 errors.

Here’s how you set up this alert:

-

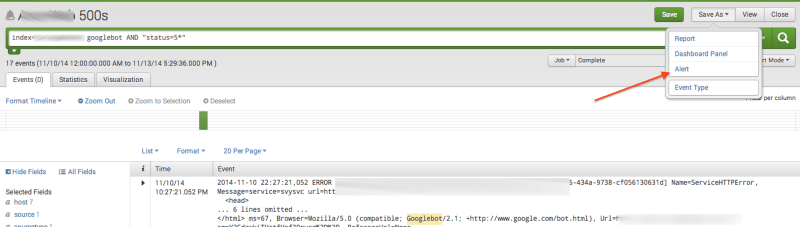

Search the following query:

index={name of your index} AND “status=5*”

-

Click “Save As” and select “Alert” in the dropdown menu.

-

Name your Alert.

-

Change the alert type to “Real Time.”

-

Click “Next.”

-

On the next screen, check the box that says “Send Email.”

-

Add your email into the box, and click “Save.”

Conclusion

I use Splunk in more than a dozen different ways to help me accomplish various SEO tasks, and these are just seven of my most common uses. Log file analysis will not only help you be the most efficient marketer possible, but it always teaches you a great deal about how search engines and websites work.

If you aren’t already including log file analysis efforts in your SEO, now is the time to start.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land