Google AdWords Geo-Targeting: Have We All Been Doing It Wrong?

It’s rare that a true challenge to a best practice (and a seemingly straightforward one at that) arises. But, when Marta Turek, Group Manager, Performance Media at Mediative presented case studies showing what happened when they tested an opt-out versus the traditional opt-in approach to geo-targeting in AdWords at SMX Advanced this year, jaws dropped. […]

It’s rare that a true challenge to a best practice (and a seemingly straightforward one at that) arises.

It’s rare that a true challenge to a best practice (and a seemingly straightforward one at that) arises.

But, when Marta Turek, Group Manager, Performance Media at Mediative presented case studies showing what happened when they tested an opt-out versus the traditional opt-in approach to geo-targeting in AdWords at SMX Advanced this year, jaws dropped. Eyes bulged. (Editor’s Note: Turek’s complete presentation is now embedded at the end of this piece, the author highlights key points throughout the article.)

I recently caught up with her to talk more about their findings. As often happens with light bulb moments, Mediative didn’t set out to change the way they geo-target. The agency was running a state-wide campaign in Colorado for a large client. When the client wanted to run a campaign targeting Denver users only, Mediative took Denver out of the state campaign and set up a campaign to target just the city of Denver in AdWords.

What Happened Next Stunned Them

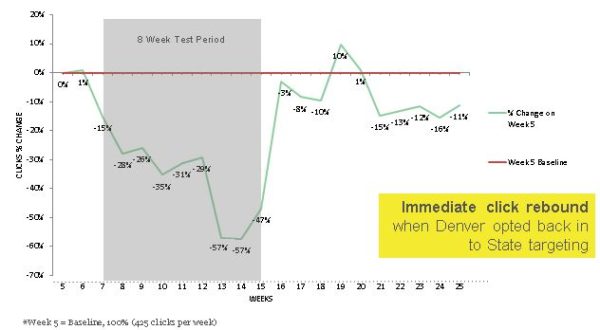

The team started to realize that in making the change to a city-targeted campaign, impressions and clicks in Denver actually fell — and fell hard. Over the 8 week test period of targeting Denver impressions fell off 21 percent. Clicks dropped by 57 percent.

Here’s Turek’s graph showing what happened to clicks in the 8 week test period in which they ran the Denver-only campaign and what happened immediately after the team added Denver back into the state-wide campaign.

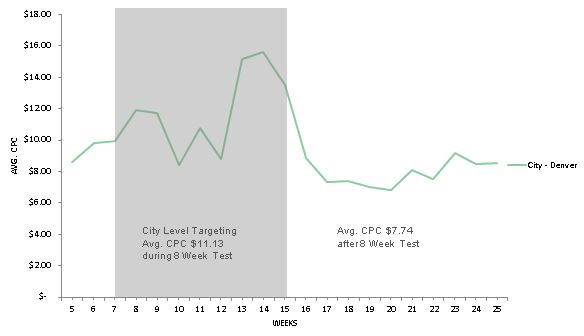

The impression and click drop offs were startling, but the rise in cost-per-click (CPC) really surprised them. The client’s CPCs in Denver rose during the test period and then fell back by $3.39 (30 percent) almost immediately after Mediative added Denver back into the state-wide campaign. “We were just shocked at how much CPCs when up when we did that,” says Turek.

What’s Going On Here?

The opt-in approach triggered a significant loss in volume and budget. “IP coverage is a huge issue and everyone knows and agrees with that factor,” Turek told me.

“There is also the fact of increasing your competition for a finite audience. We weren’t sure if there was an algorithmic system that says if you are narrowing your geographic targeting or adding other specifics about demos, you’re becoming more granular, essentially signaling to Google you are willing to pay more. Google says that’s not it at all,” said Turek.

Turek says two Google employees chatted with her after her talk, and one even told her he would recommend the opt-out approach to his clients.

A Second Test, Same Results

Mediative then tested the new approach with the same client in North Carolina in which they wanted to target just two DMAs. Turek says after seeing what happened in the Denver campaign, “we realized they were going to lose budget, clicks and impressions. We wanted to protect budget and volume.” So, they tested targeting the two DMAs versus running a state campaign and opting-out of the non-target DMAs.

They ran the test for 8 weeks. “We started seeing the efficiencies again with the opt-out approach,” said Turek.

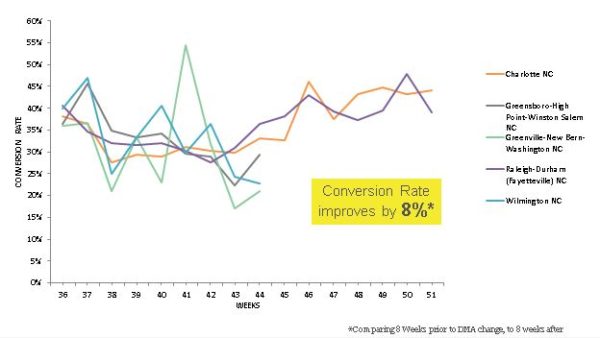

They were able to maintain traffic volume in the target DMAs in the opt-out test campaign. CPCs fell 12 percent. Better yet, conversions rose by 20 percent, and conversion rate rose 8 percent.

“Absolute conversions in target areas increased, and so we see increased account efficiency,” said Turek.

In the graph charting conversion rate changes below, you can see it rise in the two target DMAs (Charlotte in orange and Raleigh-Durham in purple) immediately after implementing the opt-out campaign after week 44.

Moving Forward With A New Approach & More Testing

Mediative has adopted the opt-out approach as a standard practice and rolled out the strategy across all states the client is targeting. Turek says they had segmented this client’s account at the state level and then targeted DMAs. Now they have segmented the account by DMA and exclude at the zip code and city level.

“Next we’ll be testing with enhanced campaigns, so we will probably have new findings in a few months,” says Turek.

Marty Weintraub, Founder and Evangelist of aimClear was a fellow panelist with Turek at the SMX Advanced session. He mentioned he would be testing this approach, so I followed up with him to get his thoughts. Weintraub wrote,

“We’ve seen case studies that replicated her results in small to mid-size crucibles. The smaller the data, the less predicable the results. The bigger the data, the bigger (seemingly) the effect.

We’re about to test Marta’s theories on one of the largest AdWords accounts in America, so the data’s not in. At scale, the implications are massive. This was one the freshest ideas we’ve seen in recent memory, fitting for SMX Advanced. I could see numerous jaws drop to the floor from my position on the podium, even over the glare of SMX lights. Thanks to Marta for upholding our industry’s reputation for being magnanimous with such data.

Now it’s the same race we’ve seen so many times before…until Google takes note of the seam in the system and shuts it off. Cash in while you can people.”

Time will tell if Google takes action or addresses this new approach in some way. (The fact that one of the Google representative told Turek he’d recommend that approach to his clients is certainly interesting.)

In the meantime, enhanced campaigns are here either to add another wrinkle or iron them out, depending on your view. It will be fascinating to see more testing in this area.

It’s not everyday a best practice gets shaken to its core.

Complete SMX Advanced Presentation By Marta Turek

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land