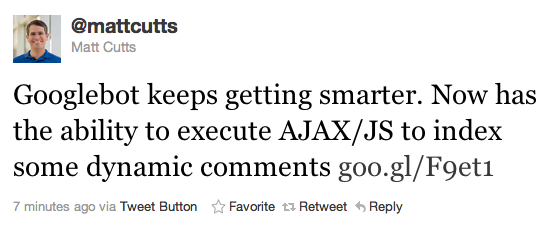

Google Can Now Execute AJAX & JavaScript For Indexing

This morning we reported that the comments on Facebook are being indexed by Google. Google’s Matt Cutts just confirmed on Twitter that Google is now able to “execute AJAX/JS to index some dynamic comments.” This gives Google’s spider, GoogleBot, the ability to read comments in AJAX or JavaScript, such as Facebook comments or Disqus comments […]

Barry Schwartz on November 1, 2011 at 1:57 pm | Reading time: 1 minute

This morning we reported that the comments on Facebook are being indexed by Google. Google’s Matt Cutts just confirmed on Twitter that Google is now able to “execute AJAX/JS to index some dynamic comments.”

This gives Google’s spider, GoogleBot, the ability to read comments in AJAX or JavaScript, such as Facebook comments or Disqus comments and others that are dynamically loaded via AJAX or JavaScript. In addition, this means, Google is better at seeing the content behind more of your JavaScript or AJAX.

Postscript: Google now has an official blog post up with more details.

Related Stories:

- Google Offers A Proposal To Make AJAX Crawlable

- Google Offers SEO Advice On AJAX Coding

- Google May Be Crawling AJAX Now – How To Best Take Advantage Of It

- It’s Official: Google’s Proposal For Crawling AJAX URLs is Live

- An Update On Javascript Menus And SEO

- Google Now Crawling And Indexing Flash Content

Related stories

New on Search Engine Land