Google Signals Upcoming Algorithm Change, Asks For Help With Scraper Sites

Google is calling for help in identifying a long-running problem: scraper sites in its search results — and particularly scraper sites that are ranking higher than the original page. Matt Cutts, the head of Google’s spam fighting group, put out the call for help on Twitter this morning: Scrapers getting you down? Tell us about […]

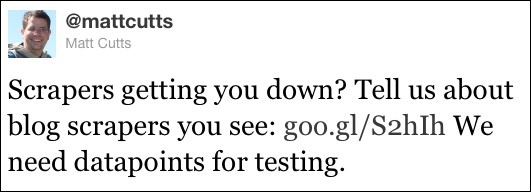

Google is calling for help in identifying a long-running problem: scraper sites in its search results — and particularly scraper sites that are ranking higher than the original page. Matt Cutts, the head of Google’s spam fighting group, put out the call for help on Twitter this morning:

Scrapers getting you down? Tell us about blog scrapers you see: https://goo.gl/S2hIh We need datapoints for testing.

The link leads to a Google Doc form that asks for the exact query where there’s a “scraping problem,” along with the exact URLs of the original and scraper pages. The form explains that Google “may use data you submit to test and improve our algorithms.”

It’s not entirely unusual for Google to call for help like this, but it’s noteworthy because the issue of scraper sites has been particularly prominent in recent months.

Google vs. Scraper Sites

Google has always had critics but, within the last year, many of them grew more vocal about what they perceived as a decline in quality of Google’s search results. Google responded early this year with a blog post in which it disagreed that search results had grown worse, but also promised “new efforts” to fight spam. Among other types of spam, that post called out scraper sites:

And we’re evaluating multiple changes that should help drive spam levels even lower, including one change that primarily affects sites that copy others’ content and sites with low levels of original content.

Cutts then announced the algorithm change on his own blog a week later, saying that “slightly over 2% of queries change in some way” after the update. He added that “searchers are more likely to see the sites that wrote the original content rather than a site that scraped or copied the original site’s content.”

Panda Update vs. Scraper Sites

But the scraper problem didn’t go away. In fact, after the Panda update rolled out in February, many webmasters flooded Google’s help forums with reports that it had gotten worse.

A few months later, during our SMX Advanced conference in Seattle, Cutts confirmed that the newest Panda update would target scraper sites. That update — Panda 2.2 if you’re scoring at home — rolled out in mid-June.

And that pretty much brings us up to today — where Google is “testing algorithmic changes for scraper sites (especially blog scrapers),” and apparently looking for some examples that it thinks it may have missed. If nothing else, it’s a chance for everyone who’s been so vocal about the scraper site problem to report exactly what they’re seeing in Google’s search results.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories