How Google Instant’s Autocomplete Suggestions Work

It’s a well known feature of Google. Start typing in a search, and Google offers suggestions before you’ve even finished typing. But how does Google come up with those suggestions? When does Google remove some suggestions? When does Google decide not to interfere? Come along for some answers. Google & Search Suggestions Google was not […]

It’s a well known feature of Google. Start typing in a search, and Google offers suggestions before you’ve even finished typing. But how does Google come up with those suggestions? When does Google remove some suggestions? When does Google decide not to interfere? Come along for some answers.

Google & Search Suggestions

Google was not the first search engine to offer search suggestions, nor it is the only one. But being the most popular search engine has caused many to look at Google’s suggestions more closely.

Google has been offering “Google Suggest” or “Autocomplete” on the Google web site since 2008 (and as an experimental feature back since 2004). So suggestions — or “predictions” as Google calls them — aren’t new.

What Google suggests for searches gained new attention after Google Instant Search was launched last year. Google Instant is a feature that automatically loads results and changes those results. That interactivity caused many to take a second look at suggestions, including an attempt to list all blocked suggestions.

Suggestions Based On Real Searches

The suggestions that Google offers all come from how people actually search. For example, type in the word “coupons,” and Google suggests:

- coupons for walmart

- coupons online

- coupons for target

- coupons for knotts scary farm

These are all real searches that have been done by other people. Popularity is a factor in what Google shows. If lots of people who start typing in “coupons” then go on to type “coupons for walmart,” that can help make “coupons for walmart” appear as a suggestion.

Google says other factors are also used to determine what to show beyond popularity. However, anything that’s suggested comes from real search activity by Google users, the company says.

Suggestions Can Vary By Region & Language

Not everyone sees the same suggestions. For example, above in the list is “coupons for knotts scary farm.” I see that, because I live near the Knott’s Berry Farm amusement park in Orange County, California, which holds a popular “Knott’s Scary Farm” event each year.

If I manually change my location to tell Google that I’m in Des Moines, Iowa, that particular suggestion goes away and is replaced by “coupons for best buy.”

Similarly, if I go to Google UK, I get suggestions like:

- coupons uk

- coupons and vouchers

- coupons for tesco

Tesco is a major UK supermarket chain, just one reflection of how localized those suggestions are.

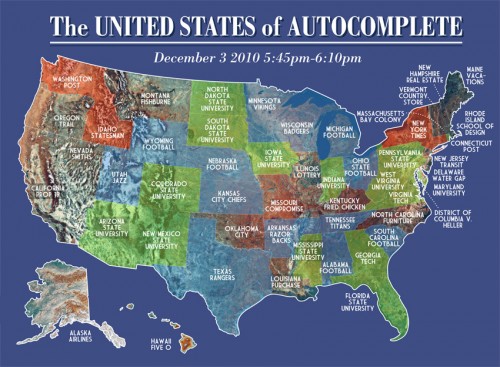

This is also why something like the Google Instant Alphabet or The United States of Autocomplete (shown below) — while clever — aren’t accurate and never can be, unless you’re talking about the suggestions shown in a particular region.

In short, location is important. The country you’re in, the state or province, even the city, all can produce different suggestions.

Language also has an impact. Different suggestions will appear if you’ve told Google that you prefer to search in a particular language, or based on the language Google assumes you use, as determined by your browser’s settings.

Previously Searched Suggestions

Google’s suggestions may also contain things you’ve searched for before, if you make use of Google’s web history feature.

- rollerblade parts

- Rollerblade 2009 Speedmachine 110

- rollerblades

- rollerblade wheels

- rollerblade

The first two come from my search history. That’s why they have the little “Remove” option next to them.

Personalized suggestion like these have been offered since May 2009. The only change with Google Instant was that they were made to look different, shown in purple similar to how links look at some web sites, to indicate if you’ve clicked on them before.

How Suggestions Are Ranked

How are the suggestions shown ranked? Are the more popular searches listed above others? No.

Popularity is a factor, but some less popular searches might be shown above more popular ones, if Google deems them more relevant, the company says. Personalized searches will always come before others.

Deduplicating & Spelling Corrections

For example, if some people are typing in “LadyGaga” as a single word, all those searches still influence “Lady Gaga” being suggested — and suggested as two words.

Similarly, words that should have punctuation can get consolidated. Type “ben and je…” and it will be “ben and jerry’s” that gets suggested, even if many people leave off the apostrophe.

Freshness Matters

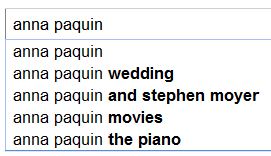

A good example of this was when actress Anna Paquin was married. “Anna Paquin wedding” started appearing as a suggestion just before her big day, Google says. That was useful to suggest, because many people were starting to search for that.

If Google had relied solely on long-term data, then the suggestion wouldn’t have made it. And today, it no longer appears, as it didn’t maintain long-term popularity (though “anna paquin married” has stuck).

How short-term is short-term? Google won’t get into specifics. But suggestions have been spotted appearing within hours after some search trend has taken off.

Why & How Suggestions Get Removed

As I said earlier, Google’s predictions have been offered for years, but when they were coupled with Google Instant, that sparked a renewed interest in what was suggested and what wasn’t. Were things being removed?

Yes, and for these specific reasons, Google says:

- Hate or violence related suggestions

- Personally identifiable information in suggestions

- Porn & adult-content related suggestions

- Legally mandated removals

- Piracy-related suggestions

Automated filters may be used to block any suggestion that’s against Google’s policies and guidelines from appearing, the company says. For example, the filters work to keep things that seem like phone numbers and social security numbers from showing up.

Since the filters aren’t perfect, some suggestions may get kicked over for a human review, Google says.

Hate Speech & Protected Groups

For example, “i hate my mom” and “i hate my dad” are both suggestions that come up if you type in “i hate my.” Similarly, “hate gl” brings up both “hate glee” and “hate glenn beck.”

Instead, hate suggestions are removed if they are against a “protected” group. So what’s a protected group?

- race or ethnic origin

- color

- national origin

- religion

- disability

- sex

- age

- veteran status

- sexual orientation or gender identity

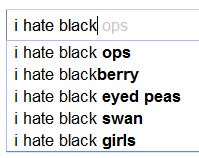

Even “majority” groups such as whites get covered by this, under the “color” category. That seems to be why “i hate white” doesn’t prompt a suggestion for “i hate whites,” just as “i hate black” doesn’t suggest “i hate blacks.”

However, in both cases, other hate references do get through (“i hate white girls” and “i hate black girls” both appear). This is where a human review may happen, if the reference is noticed.

Legal Cases & Removals

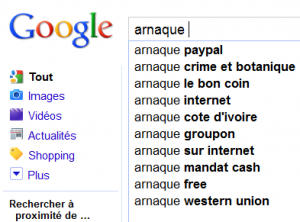

Google blocks some suggestions for legal reasons. For example, last year, Google lost two cases in France involving Google Autocomplete.

Google appears to have done this, when I checked today. Google would not say if it is appealing the case or whether this applies to preventing the word “arnaque” from appearing next to any company’s name.

From some limited testing, I think Google is preventing from “arnaque” from appearing after any company name but not before (“arnaque paypay” and “arnaque groupon” are suggestions).

In the second French autocomplete case, a plantiff — whose conviction was on appeal — sued and won a symbolic 1 euro payment in damages over having the words “rapist” and “satanist” appearing next to his name.

The plantiff’s name wasn’t given in the case, so I can’t check that the terms were removed as ordered. Last year, Google said it would appeal the ruling. The company gave me no update on things when I asked for this article. It doesn’t seem likely that this has caused Google to drop having such terms appear next to the names of other people.

Yesterday, news broke about Google losing a case in Italy involving suggestions. Here, a man sued over having the Italian words for conman and fraud appearing next to his name.

I can’t check if Google has complied with the ruling, because the man’s name was never given — nor does his lawyer make clear if Google has complied. It’s also unclear if this ruling is causing such terms to be dropped in relation to anyone’s name (this seems unlikely).

I asked Google about this but was only given a standard statement:

We are disappointed with the decision from the Court of Milan. We believe that Google should not be held liable for terms that appear in Autocomplete as these are predicted by computer algorithms based on searches from previous users, not by Google itself. We are currently reviewing our options.

In the US, Google won a case last month waged by a woman unhappy that the words “levitra” and “cialis” appeared near her name. That case largely involved arguments about commercial infringement, rather than taking a libel stance.

Postscript: Sean Carlos of Antezeta has more on the Milan case here.

Controversial Cases

Google tells me it doesn’t typically comment more in these cases, because it doesn’t want to be in the position of having to issue a detailed response for any oddity that someone spots. Still, Google did open up about two examples of strange suggestions that have come up in the past.

Blame that aforementioned freshness layer, says Google. Back when this all happened, the freshness layer had a gap that allowed spiking queries to appear for a short period of time, then disappear unless they gained more long term popularity.

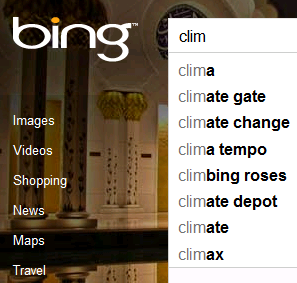

That gap has since been reduced. Spiking queries stay around longer, then drop unless they gain long-term traction. The “climategate” suggestion didn’t catch on and so disappeared. It wasn’t manually removed, as some assumed, Google said.

Interestingly, looking today, “climategate” still hasn’t gained enough long term popularity to come up as a suggestion at Google. But over at Bing — which, of course, uses its own unique suggestion system — it is offered.

As it turned out, there was a human error involved, Google told me.

But in fact, Google Autocomplete does not consider religions to be protected groups (I’ll get back to this). So other religions didn’t have a filter established for them.

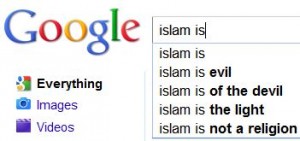

Today, “islam is” brings back some negative suggestions, just as is the case with other religions.

Nationalities Briefly Protected; Religions Not

Remember when I listed what a protected group was, according to Google, above? That included religions, but that’s the definition that Google AdWords uses, not Google Autocomplete.

Similarly, Google’s YouTube has its own definition of protected groups:

Protected groups include race or ethnic origin, religion, disability, gender, age, veteran status, and sexual orientation/gender identity.

National origin isn’t on that list. Indeed, it wasn’t on the unpublished list that Google Autocomplete uses until last May, when Google began to filter suggestions related to nationality. Search for “americans are,” for example, and you got nothing.

Google gave me this statement on the topic (the brackets aren’t me removing words but instead how Google indicates a search term):

Simply put, nationalities refer to individuals, religions do not. Our hate policy is designed to remove content aimed at specific groups of individuals. So [islamics are] and [jews are] or [whites are] would possibly be filtered, while queries such as [islam is] and [judaism is] would not because the suggestions are directed at other entities, not people.

Sorry, I’m not convinced by this. Worse, when I did some double-checking today, the previously established nationality filter — which the statement defends — appears to be turned off. Yes, Americans are again fat, lazy and ignorant, as Google’s “predictions” suggest, and the French are lazy cowards.

Can You Request Removals?

As you can imagine, some people would like to have negative suggestions removed. However, as explained, Google only does this in very specific instances. The company doesn’t even have a form to request this (though there is a help page on the topic, that suggests leaving comments in Google’s support forums).

Should businesses be allowed to request removal of suggestions? It’s not something that Google wants to arbitrate. Jonathan Effrat, a product manager at Google who works on Google Instant, told me:

Unfortunately, we won’t do a removal in those situations. A lot of times people are searching for it, and there’s a legitimate reason. I had a friend who used to work for a company, and the company name plus “sucks” was a suggestion, and that was the reality. It’s not really our place to say you shouldn’t be searching for that.

There are signs that Google has been pulling back by suggesting “scam” along with company names, but despite these reports, you can still find examples where this still happens. Google hasn’t commented if it’s actually made any change like this, by the way.

What About Piracy?

That took out — and continues to take out — suggestions for some sites that may also be used for legitimate reasons. To be clear, suggestions were removed, not the sites themselves.

Want to read the Wikileaks files directly? BitTorrent or uTorrent have software that will allow you to do this. But today, Google won’t auto-suggest their names as you begin to type, deeming them too piracy related.

Aside from taking out some potentially innocent parties, the whole thing feels kind of hypocritical. Why does Google feel it needs to go over-and-above to protect searchers piracy-related suggestions when there are a range of other potential harmful ones out there?

The answer, in my view, is that this is a PR battle Google wants to win as studios and networks accuse it of supporting piracy and seek to enlist the aid of the US Congress. Dropping piracy suggestions is an easy gift, especially when Google’s not proactively removing the real issue, sites that host pirated content in its own results. It’s also a gift that might help it get network blocking of Google TV lifted.

And Fake Queries?

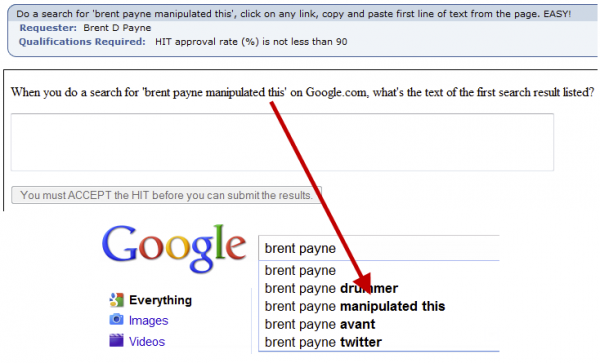

Meanwhile, another issue has gained fresh attention — the ability for people to “manufacture” suggestions. In particular, Amazon’s Mechanical Turk is a well-known venue where people can request that others do searches. When enough searches happen, then suggestions start appearing.

Brent Payne is probably one of the most notable examples of someone deliberately doing this “above the radar,” so to speak. He ran a series of experiments where he hired people on Mechanical Turk to do searches, which (until Google removed them) caused suggestions to appear:

Tempted to try it? Aside from potentially violating Mechanical Turk’s terms, Google says doing so is something it deems spam and will take corrective action against, if spotted.

What action? So far, that seems to be limited to removing the manufactured suggestions.

Postscript: Payne’s study has apparently gone away, but another study done in March 2012 showed that using Mechanical Turk can still have an impact.

A Suggestion For Google’s Suggestions

As I said, Google Instant prompted renewed attention about Google’s suggestions — along with debate about whether Google should be offering suggestions at all, given the reputation nightmare they can bring to some companies and individuals, as well as offense they bring to other groups. On the flipside, there’s the usefulness of them.

Here’s a case that illustrates the balancing act. Last year, a skydiving company contacted me, concerned that searches for its name brought up a suggestion of its name plus the words “death” or “accident.” Yes, the company had someone who died in a jump.

That’s something harmful to the company, even if true. Skydiving is by its nature an extremely dangerous sport, and the suggestion gives no guidance about whether the company was somehow at fault. It just immediately suggests there’s something wrong with the company.

However, it’s also incredibly useful for searchers, as a way for them to refine their queries in ways they might not expect.

This is a suggestion for all the major search engines, by the way. Enough singling out Google, when these types of examples can be found easily on Bing and Yahoo, also.

If there are negative things that people want to discover about a person, company or group, those will come out in the search results themselves, and mixed in with more context overall — good, bad or perhaps indifferent.

Yes, many Americans know they’re stereotypically seen as fat. Other nationalities and religious groups also know that there are many hurtful stereotypes about them. But who wants Google seeming to tell them that?

Yes, Google’s correct in saying that the suggestions it shows reflect what many people are searching for — and thus think.

Still, parroting harmful thoughts “searched” by others doesn’t make those things any less hurtful or harmful. And by repeating these things, there’s an argument that search engines simply makes the situation worse.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories