6 Ways To Shatter The Ceiling With An Enterprise Site Quality Audit

Enterprise SEO has always emphasized visibility, authority and relevance. If you’re optimizing a site with more than 10,000 pages, you now need a streamlined way to audit site quality. Quality matters, and it matters even more on large sites, because you can repeat the same issues so many times. That drags down your entire site. […]

Enterprise SEO has always emphasized visibility, authority and relevance. If you’re optimizing a site with more than 10,000 pages, you now need a streamlined way to audit site quality.

Quality matters, and it matters even more on large sites, because you can repeat the same issues so many times. That drags down your entire site.

Here’s what I include in any large site quality audit:

1. Check For Busted Links

Well, duh. Hopefully I don’t have to write about this.

Do make sure you’re checking both onsite and outbound links, though.

2. Check For Duplicate Content

Again, duh. You can use Google to do this with a site: search, or use a tool like Screaming Frog. Distilled has a great tutorial on analyzing crawl data in Excel that gives you everything you need.

3. Look For Typos

If you can scrape site pages or get an export of your site’s content, run them through a grammar/spelling error checker, like After The Deadline.

I don’t necessarily trust this kind of automated stuff, but no one’s going to proofread 4,000 pages about server software. Automated is better than nothing, and we know for certain Google quality raters look at this stuff. It makes sense that Google would do the same thing algorithmically.

4. Check Reading Grade Level

I know: Grade level? WTH? Bear with me.

The reading grade level itself doesn’t matter. But huge fluctuations in grade level from one page to the next may hint at:

- Near-duplicate content: Many times, writers stuck with rewriting existing content raise/lower the grade level of the content.

- Writing quality problems: If one page is written at a graduate level, and the next is written at a 7th-grade level, someone may have caught thesaurus-itis or thrown in a lot of run-on sentences.

Again, if you can scrape site pages or get a data ‘dump’ of all content, you can run them through a grade level assessment. There are lots of code snippets out there that’ll let you do it, or you can learn to calculate Flesch-Kincaid readability on your own.

5. Assess Purpose

One of the top issues Google calls out in their quality rater guidelines is ‘purpose.’ Pages with ‘clear purpose’ get higher ratings than pages with a lack of purpose.

That doesn’t mean you should have each page start with “The purpose of this page is…”

It does mean it every page should have a clear reason for existence. Which makes you groan, I best—exactly how are you going to check 150,000 pages for clarity of purpose?

- First, do it in small chunks, and start with pages that are already generating organic traffic, but could rank higher for their terms.

- Second, keep track of pages you’ve checked, so you don’t have to duplicate effort.

- Third, use your crawler! Export title tags and headings. Pop them all into a spreadsheet and zip down the list. If the title/heading tells you exactly why you’d want to read that page, sweet! You’re all set. If the title/heading read like Shakespeare did when you were 13, you need to check that page.

- Fourth, look at bounce rates. Pages with bounce rates far above your site average may be unclear.

6. Look For Near-Duplicate Content

You know that duplicate content is bad. You know that the best solution is to fix it. What you may not know is that near-duplication is bad, too.

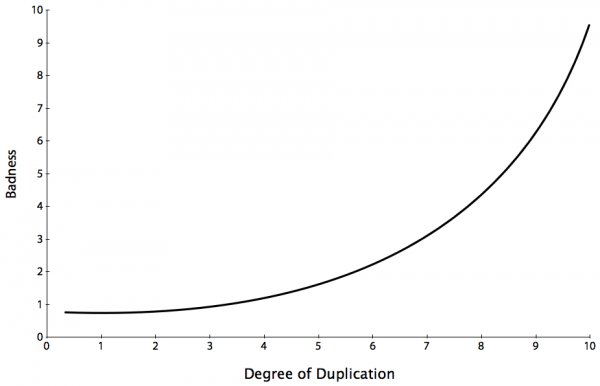

Please do not ask me ‘how much duplication is ok?’ It makes me want to use vise-grips on my own brain. Assume near-duplication is a scale, like this:

So more is worse. Less is better.

Find duped supplemental content

The easiest first step for reducing near-dupes: Remove supplemental content. Disclaimers, specifications shared by huge numbers of products and author biographies can all be linked instead of placed on every relevant page.

If you suspect a chunk of supplemental content is duplicated throughout your site:

- Copy a sentence or three from that content.

- Paste it into a text editor.

- Put quotes around it.

- Add site:www.yoursite.com[space] before the quoted phrase.

- Paste the whole thing into Google.

The search result should show all pages on your site that have that same phrase. If dozens of pages share content, think about moving the shared stuff to a single, central location and linking instead.

Find rewrites

Ah, the good old days, when men were men and you could spin 250 words into 10 different articles, and Google would rate them all as unique. Them days are gone, sparky. Time to deal with it.

There aren’t any super-easy ways to find rewritten content, but you can automate it. At Portent, we try to save our sanity by:

- Scraping large numbers of site pages.

- Putting the content into a monster database.

- Crunching the text, looking for actual duplicate phrases, sentences and paragraphs (that’s the easy stuff).

- If it’s really dire, we’ll use a bit of natural language processing to look for structural and semantic matches: If five pages all talk about potatoes, then french fries, then catsup, and then how to mix them up, it’s worth checking (I’m blogging hungry, sorry).

This can be a lot of work. But we’ve seen improvement in indexation and content visibility with a determined effort at near-duplicate reduction.

Quality Matters

In the last year, I’ve reviewed and audited a few dozen sites of 10,000 or more pages. On some, recommendations led to higher rankings. On others, they didn’t. All were large sites with high visibility, few technical problems and no penalties.

The one difference between them: Content quality.

In every case, the sites had at least 2 of the following problems:

- Thousands of pages of rewritten content;

- Hundreds of pages of duplicate content;

- Really bad writing;

- Utterly purposeless writing, clearly keyword-stuffed and placed on pages for rankings.

Quality matters. Tackle it and you can shatter the ceiling on your organic search traffic.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land