How to check which URLs have been indexed without upsetting Google: A follow-up

How can we determine which of our site pages aren't indexed without running afoul of Google's guidelines? Columnist Paul Shapiro shares his methods.

Back in October 2016, I wrote about how you can use a Python script to determine whether a page has been indexed by Google in the SERPs. As it turns out, Google’s webmaster trends analyst Gary Illyes wasn’t too happy with the technique that was being utilized by the script, so I cannot endorse this method:

I'll just leave this here: https://t.co/NO4s6JbSfJ https://t.co/qRhIGXcG7g

— Gary 鯨理/경리 Illyes (so official, trust me) (@methode) October 5, 2016

Shortly after, Sean Malseed and his team at Greenlane SEO built a similar tool based in Google Sheets (among other awesome tools like InfiniteSuggest), and Googler John Mueller expressed reservations:

https://twitter.com/JohnMu/status/808953946169606145

How could I learn which pages weren’t indexed by Google, and do it in a way that didn’t break Google’s rules? Google doesn’t indicate whether a page has been indexed in Google Search Console, won’t let us scrape search results to get the answer and isn’t keen on indirectly getting the answer from an undocumented API. (That was Sean Malseed’s clever solution and scraping workaround.) Let’s explore some solutions.

The analytics solution

Mark Edmondson provided an R script solution that works by doing the following:

- It authenticates with your Google Analytics accounts.

- It looks to see if there are pages found within your site’s XML sitemap but not found in Google Analytics for organic Google results, from the last 30 (or more) days.

The methodology assumes that if a URL is not found in analytics for Google organic search results, then it likely hasn’t been indexed by Google.

Interlude: How to do this without R

While I personally like scripted solutions, I know many people do not. You don’t need to figure out R to do this analysis. You can easily head over to Google Analytics and do a similar analysis — or, even more easily, head over to Google Analytics Query Explorer and run it with these settings. Download the table as a TSV:

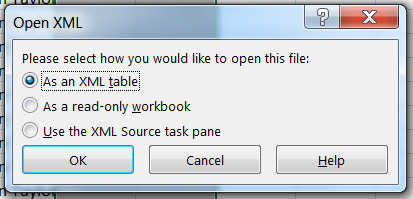

You can then download your XML sitemap locally and open it into Excel. Next, drag it into the Excel window, and you will get the “Import XML” dialogue box. If it asks you to “Open the file without applying a stylesheet,” select OK:

Then, choose to open the file “As an XML table”:

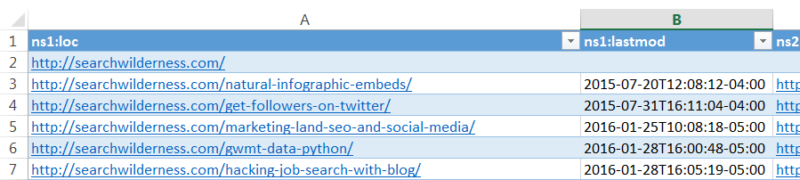

You can remove the extraneous columns, keeping only the “ns1:loc” (or “loc”) column:

Then, you simply need to do a VLOOKUP or other form of Excel matching and find the URLs in the sitemap that aren’t present in the analytics data.

I thought this was a simple yet clever solution, and although a good starting place, I feared it wouldn’t accurately show which pages were indexed by Google. It is not that uncommon for pages to receive little or no traffic even if they are indexed. It may be an indication that the page isn’t indexed, but it also may just show that the page has a tagging issue, has become irrelevant, is in need of some optimization to improve its visibility or simply is not present in the XML sitemap. (Alternatively, you can use a crawl, rather than your XML sitemap, to make these comparisons.)

The log file solution

Server log files are an excellent source of data about your website that is often inaccessible via other means. One of the many pieces of information that can be derived from these log files is whether or not a certain bot accessed your website. In our case, the bot we are concerned with is Googlebot.

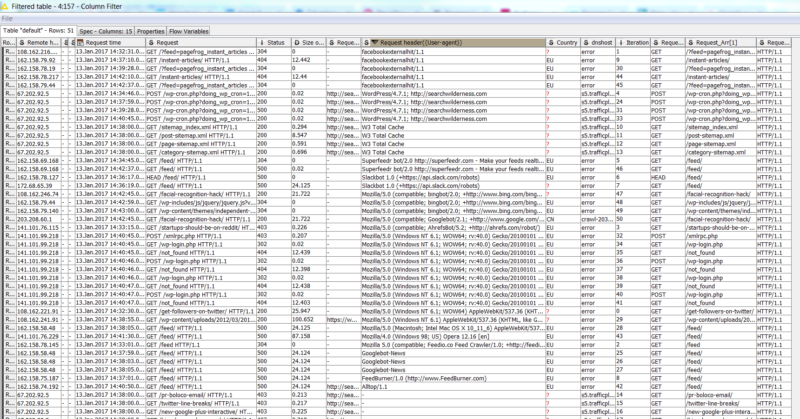

Analyzing our server log files allows us to ascertain whether Googlebot has ever visited a certain page on our website. If Googlebot has never visited a certain page, then it cannot have been indexed by Google. I personally tend to use KNIME for this purpose, with the built-in Web Log Reader node, but feel free to use your favorite solution.

Example of using KNIME to process server log files

Make sure to verify Googlebot, and do not simply rely on the reported user-agent. Many bots will spoof the Googlebot user-agent, which may invalidate your findings. To avoid this, I use a simple Python snippet within KNIME:

import socket

try:

output_table['dnshost'] = socket.gethostbyaddr(str(ipaddressvariable))[0]

except Exception:

output_table['dnshost'] = "error"

For a decent guide about log file analysis, please check out this guide by Builtvisible.

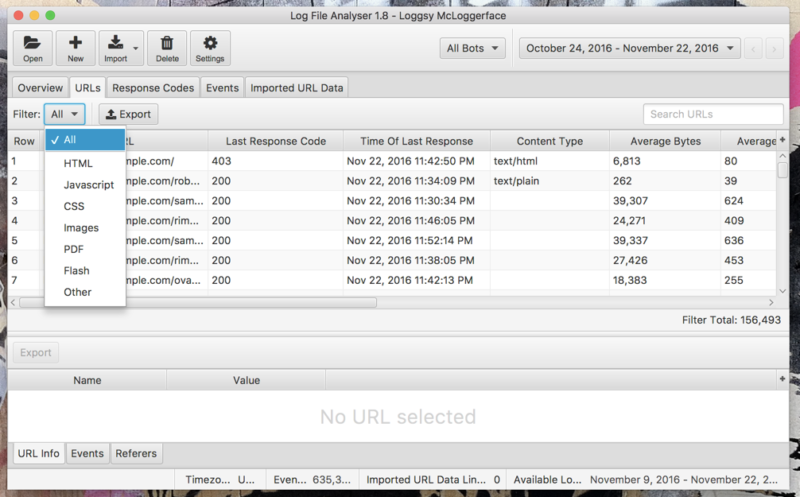

If this is all a bit much, I recommend checking out The Screaming Frog SEO Log File Analyzer — or, for an enterprise solution, Botify.

Screaming Frog Log File Analyzer provides an easier solution for log file analysis.

Like the Google Analytics solution, the log file analysis isn’t foolproof. It may be possible for Googlebot to visit a page but not actually include it in its index (<meta name=”robots” content=”noindex, follow”>), but it will help us narrow down our list of possibly non-indexed web pages.

Combining your data

To narrow down our list of pages that may not be indexed by Google as much as possible, I recommend combining data captured using the Google Analytics technique with the log file analysis methods above.

Once we have our list, we can do some spot-checking by manually searching Google for “info:”, which won’t upset Google. It’s much easier to manually check, because we’ve been able to narrow down our list significantly.

Conclusion

Since Google does not provide a tool or data on whether a web page has been indexed or not, and we aren’t allowed to use an automated solution like the one I previously wrote about, we must rely on narrowing down our list of URLs that may not be indexed.

We can do this by examining our Analytics data for pages that are on our website but not receiving organic Google traffic, and by looking in server log files. From there, we can manually spot-check our shortened list of URLs.

It’s not an ideal solution, but it gets the job done. I hope that in the future, Google will provide a better means of assessing which pages have been indexed and which ones have not.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories