Google Is Not Broken

In spite of what many think, Google is not broken. But wait, naysayers will say, Look at this search result, it stinks! This spammer is succeeding in ranking high, they emerged from nowhere and are now in the top three results! It’s true — there are many such examples that you can point to. Making […]

In spite of what many think, Google is not broken. But wait, naysayers will say, Look at this search result, it stinks! This spammer is succeeding in ranking high, they emerged from nowhere and are now in the top three results!

It’s true — there are many such examples that you can point to. Making sense of this landscape can be quite confusing, but that’s what I will attempt to do in today’s post.

Firstly, there are two basic reasons why Google can be quite slow to address some of the problems you might find.

1. They Can Afford To Be Thoughtful And Patient

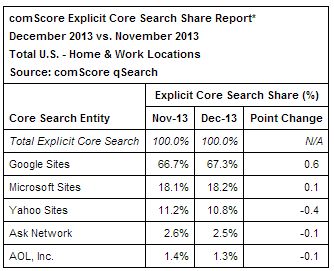

Why, you ask? They have dominant market share. Here is the December 2013 market share data from comScore:

comScore notes that “‘Explicit Core Search’ excludes contextually driven searches that do not reflect specific user intent to interact with the search results.” In my experience, the practical impact of adjusting for this is that the Google search market share is a bit higher. Most sites I look at show a larger percentage of their organic search coming from Google than 67%.

In addition, Google’s stock price seems to be doing pretty well, too (source: Yahoo):

Their business does not face an immediate mortal threat, and the presence of some bad results is not going to change that. I am not saying this makes them complacent — that is definitely not the case. They employ a lot of people on working search quality and they take that task extremely seriously. It just means that they will not make knee-jerk reactions to specific individual problems. They will take a much more measured and careful approach.

2. Unintended Side Effects Are Very Difficult To Avoid

Search is incredibly complex. While pretty much everyone in the industry understands that, we still have a tendency to underestimate just how complex it really is:

- The web has more than 100 trillion web pages (Matt Cutts told me at Pubcon 2011 that Google knew about 100 trillion web pages, so I presume the number has grown since then.)

- Google crawls a very large number of these pages (though they probably skip crawling a large percentage they consider insignificant, as well)

- A semantic analysis is performed on all the pages they do crawl

- They build a complete map of all the interlinking they see between pages

- They throw this into a series of databases spread across the globe

- Users can enter any search query they desire, at any time, from anywhere on the planet

- Google responds with an answer in 0.4 seconds or less

As you can imagine, all that makes tinkering with the algorithm incredibly complex. Any change they make undoubtedly impacts many parts of their search results at the same time. There is no such thing as a simple change.

Their past behavior illustrates this point well. Back in 2007 and 2008, I began actively wondering why Google did nothing about link building using article directory sites. This truly baffled me. The problem with these types of links was obvious, so why didn’t they act?

They finally did with the initial release of the Penguin algorithm on April 24, 2012. Why did it take so long? Simply because it is very, very hard to make any changes to one thing without breaking something else.

In fact, a major reason for the development of the new Hummingbird platform was to allow them to implement new types of algorithms more easily. Does this mean we will see changes happen more quickly in the future? Maybe, but they will still be very hard to make, and it will still seem slow to those of us on the outside.

The massive complexity of all this is why manual tinkering is not possible, either. To say that it does not scale, while true, does not do the real situation justice. Making manual changes to various SERPs would be akin to moving the Sahara Desert one grain of sand at a time.

Wait… Sometimes Google Does Move Fast!

The recent Rap Genius incident is a case in point. Sometimes, individual actions are taken. They were punished because Rap Genius was outed, and Google was forced into taking action.

However, even though the penalty was merited, this was bad for Google’s search results. Rap Genius is a very popular service. People who were looking for it were not able to find it, and that was a problem.

While it makes people angry that Google fast tracked them through their penalty, the reality is that it had to be done. Searchers looking for the company were not finding what they were looking for.

On the other side of the coin, when a small business or lesser known brand is hit by a penalty their road back is far more difficult. Few people are missing them in the search results. It is a hard and bitter reality, but it all comes back to the same underlying facts:

- Search is massively complex

- Search quality is king

- It is why action is quite slow most of the time…

- …except when the problem lessens their current search performance in a material way

How Does This Affect You?

As a publisher, it is important to understand the landscape around you. Google will always act to protect the quality of their search results. In some cases, this will cause them to move very slowly, and in other cases it will cause them to move quite quickly. Either way, the complexity of the task they face means it will always be possible to find crappy sites in various search results.

In addition, Google will steadily work to make their results improve. This comes in the form of new algorithms that their testing shows makes the results better in aggregate, provided that there are a minimum of specific unacceptable side effects. Whenever these new algorithms are implemented, there will always be some specific results that actually get worse. Search is far too complex to avoid that.

When you see quick individual actions, such as Rap Genius, that is because of a very specific set of circumstances. The site was penalized because they were outed and fast tracked back in due to the fact that its penalization caused a search quality problem.

In short, whether the action is fast or slow all depends on the same core focus: what is good for search quality?

From your perspective, as a publisher, you can’t take the risk of playing games to improve search rankings. If you are a known brand, you risk public outing, and if you are not a known brand and your rankings go away, the road back is likely to be very painful!

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land