Google Search Console adds daily bulk data exports to BigQuery

This will allow you to potentially do more analysis with your data in other platforms.

Google is rolling out a feature over the next week that will allow you to automate a daily bulk export of your Search Console performance data to BigQuery. This will allow you to run complex queries over your data to an external storage service, where you can do deeper analysis in a more automated fashion, Google announced.

The export. Google said you could configure an export in Google Search Console “to get a daily data dump into your BigQuery project.” This includes all of your Search Console performance data but not the anonymized queries. The daily data row limit does not impact this data, so you can extract more data using this method.

The cool part is not just the reduction of data limits but also that this is an ongoing daily export that can be automated.

Who this helps. Google said this is likely more helpful for larger sites with larger datasets. “This data export could be particularly helpful for large websites with tens of thousands of pages, or those receiving traffic from tens of thousands of queries a day (or both!),” Google wrote.

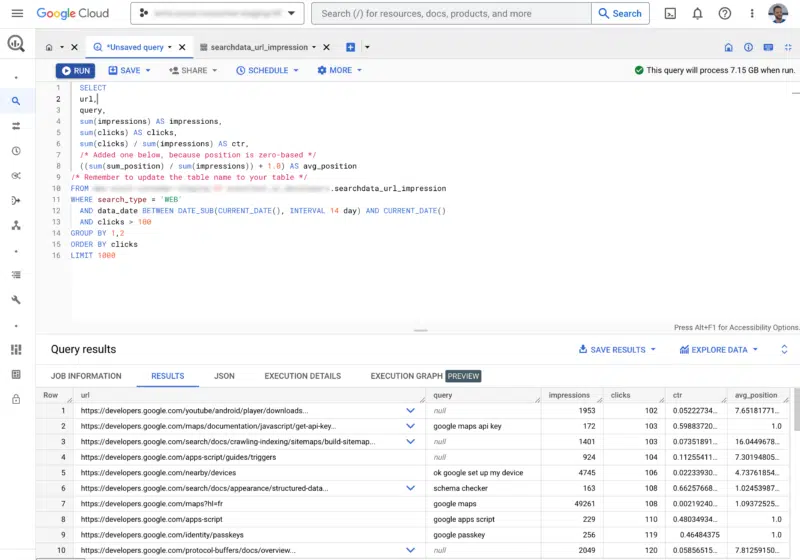

How to export. Google created a help document to walk you through how to export your data to BigQuery, it is somewhat technical, and you may need assistance from a developer or programmer. The higher-level stages of this work as follows:

- Prepare your Cloud project (inside Google Cloud Console): this includes enabling the BigQuery API for your project and giving permission to your Search Console service account.

- Set export destination (inside Search Console): this includes providing your Google Cloud project ID, and choosing a dataset location. Note that only property owners can set up a bulk data export.

This export is daily, and the first time it will start within 48 hours. If the export simulation fails, you should receive an immediate alert on the issue detected; here’s a list of possible export errors.

The data. Google provided a quick description of the three tables that will be available to you:

searchdata_site_impression: This table contains data aggregated by property, including query, country, type, and device.searchdata_url_impression: This table contains data aggregated by URL, which enables a more detailed view of queries and rich results.ExportLog: This table is a record of what data was saved for that day. Failed exports are not recorded here.

BigQuery. BigQuery is Google’s fully managed, serverless data warehouse that enables scalable analysis over petabytes of data. It is a service that supports querying using ANSI SQL. It also has built-in machine learning capabilities.

Why we care. The more data you have access to in your own platform, the more you can do with analysis of your search performance. That means you can slice and dice your data in ways you probably could not prior. This should allow you to develop new ideas to improve your site and user experience.

Also, third-party data tools may find useful ways to build out new reports with this. Google said, “We hope that by making more Google Search data available, website owners and SEOs will be able to find more content opportunities by analyzing long-tail queries. It’ll also make it easier to join page-level information from internal systems to Search results in a more effective and comprehensive way.”

Postscript. This is now available to everyone as of February 28, 2023.

Postscript 2. Google now expanded this feature to allow multiple properties to export to one Google Cloud project:

Search Engine Land is owned by Semrush. We remain committed to providing high-quality coverage of marketing topics. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.