8 SEO tools to get Google Search Console URL Inspection API insights

Discover some free tools, SEO crawler integrations, and scripts to leverage with Google Search Console URL Inspection API insights.

Google announced the launch of a new Google Search Console URL Inspection API one week ago. This change provides access to third-party applications for in bulk access to information only accessible for a single URL at a time through the Google Search Console interface of the URL Inspection tool.

With a quota per Search Console property (which can also be subdomains or subdirectories, as well as domains) of 2,000 queries per day and 600 queries per minute, the release opens doors to SEO tools and platforms to integrate Google’s index coverage information, such as:

- Crawlability and indexability status

- Last crawling time

- Sitemaps inclusion

- Google selected canonical URL

- Identified structured data for rich results eligibility

- Mobile usability status

All of which include other useful information that facilitates technical SEO analysis and debugging.

This opportunity has already started to be leveraged by a few SEO professionals who have already developed free new tools and shared scripts and established SEO crawlers integrating this data with their own insights. Here are a few:

Free tools

Free tools can be the best way to validate a specific group of URLs’ status quickly.

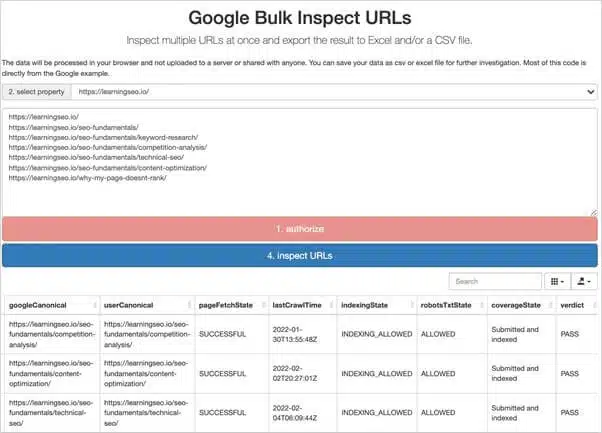

1. Google Bulk Inspect URLs by Valentin Pletzer

Pletzer has developed a free new tool called “Google Bulk Inspect URLs” that provides what’s likely the easiest way to obtain the URL Inspection API data. There’s no need to register or complex configuration. You need to authorize access to your Google account linked to Google Search Console, select the desired property to check, and paste the URLs you want to validate.

The tool, which processes the data in the browser, shows the obtained status of the different fields available from the URL Inspection API in a table that allows you to browse or export the values in CSV or Excel.

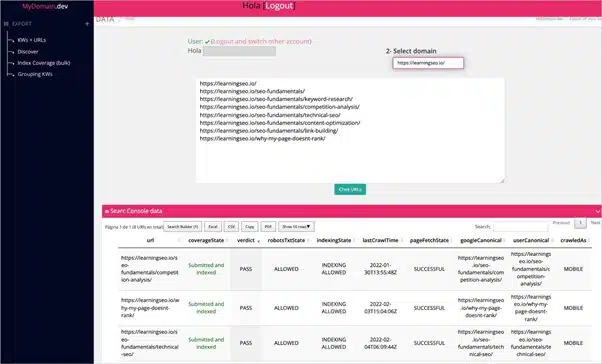

2. MyDomain.Dev by Lino Uruñuela

MyDomain.dev, developed by Lino Uruñuela, is a free-to-use tool. It requires registration, allowing you to access the Google Search Console data available via API without the constraints of the Search Console interface. Reports segment and group the data to make it easier to analyze.

Besides the existing reports for performance data, the tool now also provides access to the URL Inspection insights through a new section. First, grant access to your Google Account linked to Search Console when registering. Next, go to the “Index Coverage (bulk) section to select the desired property to check, and then paste the URLs to validate to obtain their status in an easy to browse table that allows you to filter the data, copy or export as CSV, Excel or PDF.

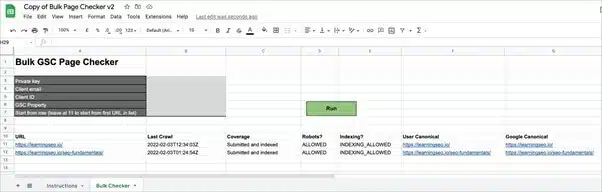

3. URL Inspection API in Sheets by Mike Richardson

For those who don’t want to use a new tool and prefer Google Sheets, Mike Richardson has developed and made available for free a new Google Sheets Template using App Scripts that you can copy and follow the instructions shared directly there to create a free Google Service Account to run it.

Once you have added the required key, email, client ID, and property information, paste the URLs to check and obtain their last crawl information, coverage, robots, indexing, user, and Google selected canonical status.

SEO crawlers integration

SEO crawlers can be the best way to obtain and integrate the data from your page’s Google Coverage status when doing a fuller technical SEO analysis to complement (and validate) the data of your SEO crawling simulations.

However, it’s important to keep in mind the API daily quota when using SEO crawlers. You might want to do crawls per areas/categories, list crawls of your most valuable URLs, or enable new properties for categories/sub-categories directories, as their quotas are counted independently.

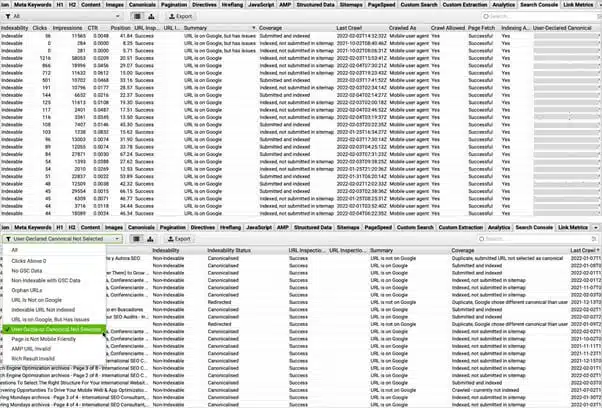

1. Screaming Frog SEO Spider [16.6 Update]

Screaming Frog was the first SEO crawler to support the new URL Inspection API integration, announcing a new version (16.6) codenamed “Romeo”.

The integration is straightforward and explained in the release notes, describing how to select the option within the already existing Google Search Console API access to populate new columns in the “Search Console” tab (as well as included in the overall “Internal” tab).

The report also includes more filters to directly obtain those URLs suffering from Google coverage issues, which can also be assessed along with the Google Search Console “Performance” data, also included via API integration.

2. Sitebulb [Version 5.7]

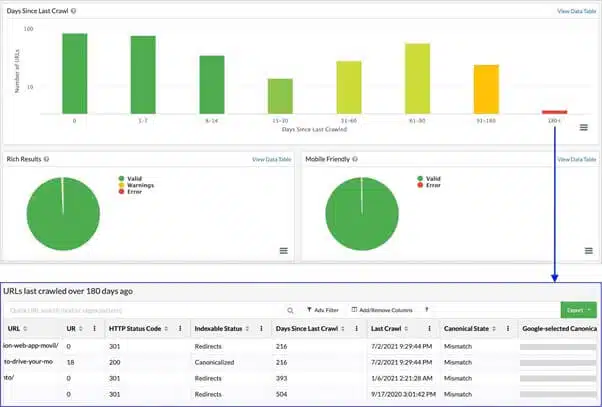

The other “superfast” release yesterday from SEO crawlers to support the new Google URL Inspection API was from Sitebulb, which announced a new 5.7 version. It leverages the existing Google Search Console integration and only requires checking on the “Fetch URL Data from Search Console URL Inspection API” option when configuring a crawl.

Sitebulb has now enabled a whole new “URL Inspection” report. They feature many clickable charts and tables for the different fields, segmenting their values to facilitate their analysis rather than aggregating them all in a single table.

When clicking the charts in different fields, you’ll be taken directly to the report showing those URLs. You can also combine with other metrics available through the tool by adding columns to the table or clicking on the “open URL inspection” option to be taken directly to the Google Search Console report to see the page information there.

3. FandangoSEO

Another SEO crawler that announced the URL inspection API integration yesterday is FandangoSEO, a cloud-based crawler. Aside from retrieving the URL inspection data to show the “Google Index Status” of the pages, it will also notify whenever Google changes the indexing status of pages.

Free scripts

If you’re a bit more technical and prefer to run a script in the terminal, there are also alternatives for you.

1. Google Index Inspection API Node.js script by Jose Luis Hernando

Jose Luis Hernando has developed and made available a free script via Github with step-by-step instructions. Make sure to have Node.js on your machine install the necessary modules and the OAuth 2.0 Client IDs credentials from your Google Cloud Platform account.

2. Google URL Inspection API with Python

If you prefer Python, Jean-Christoph Chouinard has written a tutorial with the Python code to interact with the URL Inspection API. The tutorial described the whole process, from steps to creating your service account credentials, going through the structure of the API Response, as well as creating the API project with a Google service account.

Dig deeper into your Google coverage status

After seeing the fast adoption after just a few days, many more SEO tools and platforms will be integrating the new Google Search Console URL Inspection API insights. I’m looking forward to using them!

Although, at the moment, the API might have a limited daily quota, remember that this is per property, not domain (you can register your categories/sub-categories directories as properties too). It’s already a significant first step to obtain direct crawlability and indexability status much more quickly from Google than ever before.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land