2011: The Year Google & Bing Took Away From SEOs & Publishers

Increasingly over the years, search engines — Google in particular — have given more and more support to SEOs and publishers. But 2011 marked the first significant reversal that I can recall, with both linking and keyword data being withheld. Here’s what happened, why it matters and how publishers can push back if Google and Bing don’t […]

Increasingly over the years, search engines — Google in particular — have given more and more support to SEOs and publishers. But 2011 marked the first significant reversal that I can recall, with both linking and keyword data being withheld. Here’s what happened, why it matters and how publishers can push back if Google and Bing don’t change things.

Where We Came From

When I first started writing about SEO issues nearly 16 years ago, in 1996, we had little publisher support beyond add URL forms. Today, we have entire toolsets like Google Webmaster Central and Bing Webmaster Tools, along with standalone features and options, which allow and provide:

- Ability to submit & validate XML sitemaps

- Ability to view crawling & indexing errors

- Ability to create “rich” listings & manage sitelinks

- Ability to migrate a domain

- Ability to indicate a canonical URL or preferred domain

- Ability to set crawl rates

- Ability to manage URL parameters

- Ability to view detailed linkage information to your site

- Ability to view keywords used to reach your site

- Notifications of malware or spam issues with your site

There’s even more beyond what I’ve listed above. The support publishers enjoy today was simply unimaginable to many veteran SEOs who were working in the space a decade ago.

The advancement has been welcomed. It has helped publishers better manage their placement in those important venues of the web, the search engines. It has helped search engines with errors and problems that would hurt their usability and relevancy.

That’s why 2011 was so alarming to me. After years of moving forward, the search engines took a big step back.

The Loss Of Link Data

One of the most important ways that search engines determine the relevancy of a web page is through link analysis. This means examining who links to a page and what the text of the link — the anchor text — says about the page.

However, for years Google has deliberately suppressed the ability for outsiders to see what links tell it about any particular page. Want to know why THAT result shows up for Santorum? Why Google was returning THAT result for “define English person” searches? Sorry.

Google won’t help you understand how links have caused these things. It refuses to show all the links to a particular page, or the words used within those links to describe a page, unless you are the page’s owner.

Why? Google’s rationale has been that providing this information would make it harder for it to fight spam. Potentially, bad actors might figure out some killer linking strategy by using Google’s own link reporting against it.

It’s a poor argument. Despite withholding link data, it’s painfully easy to demonstrate how sites can gain good rankings in Google for competitive terms such as “SEO” itself by simply dropping links into forums, onto client pages or into blog templates.

Given this, it’s hard to understand what Google thinks it’s really protecting by concealing the data. But until 2011, there was an easy alternative. Publishers and others could turn to Google-rival Yahoo to discover how people might be linking to a page.

Goodbye Yahoo Site Explorer

Yahoo launched its “Yahoo Site Explorer” back in September 2005, both as part as a publicity push to win people away from Google and to provide information to publishers. The tool allowed anyone to see what link data Yahoo had about any page in its listings.

Today, Yahoo still supposedly wants to win people away from Google. But because Yahoo’s web search results are now powered by Bing, Yahoo has little reason to provide tools to support publishers. That’s effectively Bing’s problem now.

Yahoo closed Yahoo Site Explorer at the end of last November, saying as it still does on the site now:

Yahoo! Search has merged Site Explorer into Bing Webmaster Tools. Webmasters should now be using the Bing Webmaster Tools to ensure that their websites continue to get high quality organic search traffic from Bing and Yahoo!.

That’s not true. Yahoo Site Explorer was not merged into Bing Webmaster Tools. It was simply closed. Bing Webmaster Tools doesn’t provide the ability to check on the backlinks to any page in the way that Yahoo Site Explorer allowed.

The closure supposedly came after Yahoo “listened to your feedback” about what publishers wanted, as it posted earlier this year. I don’t know what feedback Yahoo was hearing, but what I’ve heard has been people desperately pleading with Yahoo or Bing to maintain the same exact features that Yahoo Site Explorer provided — and pleading for well over a year.

Yahoo-Bing Deal Has Reduced Competition & Features

When the US Department Of Justice granted its approval for Yahoo to partner with Microsoft, that was supposed to ensure that the search space stayed competitive. From what the Department Of Justice said in 2010:

After a thorough review of the evidence, the division has determined that the proposed transaction is not likely to substantially lessen competition in the United States, and therefore is not likely to harm the users of Internet search, paid search advertisers, Internet publishers, or distributors of search and paid search advertising technology.

I’d say dropping Yahoo Site Explorer did harm to both users of internet search and internet publishers. Yahoo Site Explorer was a distinctive tool that only Yahoo offered, allowing both parties named by the DOJ to better understand the inner workings of the search engines they depend on. It also reduced competitive pressure for Google to offer its own tool.

Indeed, things have gotten worse since Yahoo Site Explorer closed. At the end of last December, Bing officially confirmed that it no longer supports the link command in its help forum.

Next To Go, The Link Command?

The link command allows you to enter any page’s web address prefaced by “link:” in order to find links that point at that page. It’s a long-standing command that has worked for many major search engines as far back to late 1995, when AltaVista launched.

Google still supports this command to show some (but not all) of the links it knows about that point at pages. I’d link to Google’s documentation of this, but the company quietly dropped that some time around May 2008. Here’s what it used to say:

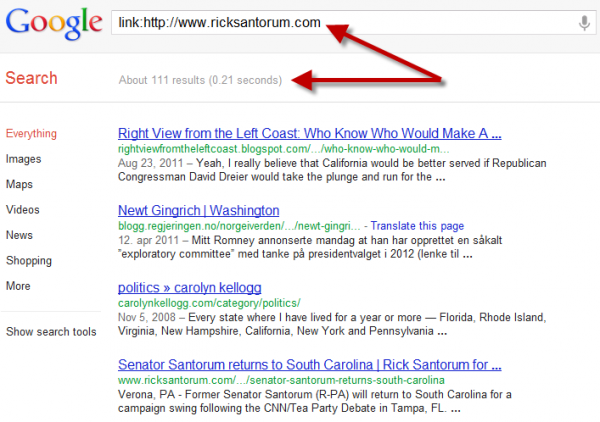

Here’s how the command still works at Google. Below, I used it to see what links Google says point to the home page of the official Rick Santorum campaign web site:

The first arrow shows you how the command is being used. The second arrow shows you how Google is reporting there are 111 links pointing to the page. Imagine that. Rick Santorum, currently a major Republican candidate for US president, and Google says only 111 other pages link to his web site’s home page.

The reality is that many more pages probably link over. Google’s counting them but not showing the total number to people who care about what exactly is being considered. I’ll demonstrate this more in a moment, but look at the worse situation on Bing:

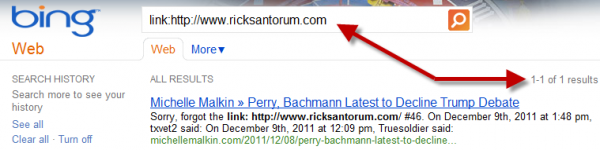

One link. That’s all Bing reports that it knows about to those in the general public who may care to discover how many links are pointing to the Rick Santorum web site.

It’s Not Just An SEO Thing

People do care, believe me. I actually started writing this article last Monday and got interrupted when I had to cover how Google might have been involved with a link buying scheme to help its Chrome browser rank better in Google’s own search results.

I doubted that was really the main intent of the marketing campaign that Google authorized (Google did err on the side of caution and punished itself), but the lack of decent link reporting tools from Google itself left me unable to fully assess this as an independent third-party.

As soon as that story was over, renewed attention was focused on why Rick Santorum’s campaign web site wasn’t outranking a long-standing anti-Santorum web site that defines “santorum” as a by-product of anal sex.

Major media outlets were all over that story. My analysis was cited by The Economist, CNN, The Telegraph, The New York Times, MSNBC and Marketplace, to name only some.

But again, I — or anyone who really cared — was unable to see the full links that Google knew about pointing at both sites, much less the crucial anchor text that people were using to describe those sites. Only Google really knew what Google knew.

Third Party Options Good But Not The Solution

If you haven’t heard more complaints over the closure of Yahoo Site Explorer, and the pullback on link data in general, that’s because there are third-party alternatives such as Majestic Site Explorer or the tool I often use, SEOmoz’s Open Site Explorer.

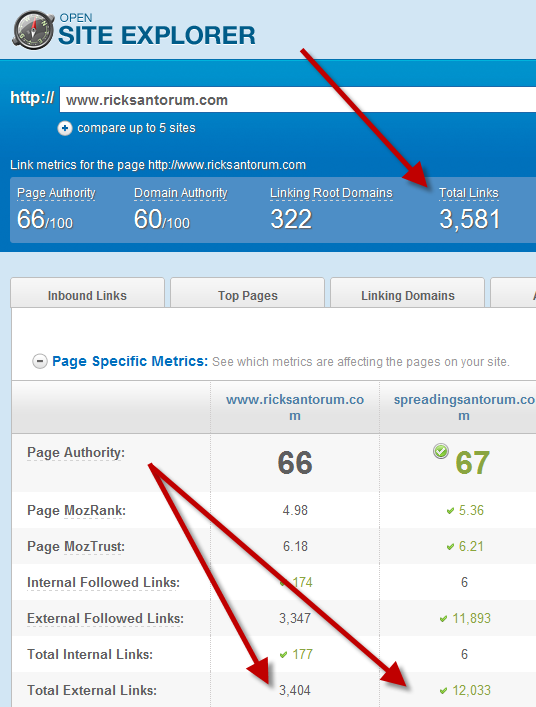

These tools highlight just how little the search engines themselves show you. Consider this backlink report from Open Site Explorer for the Rick Santorum campaign’s home page:

The first arrow shows how 3,581 links are seen pointing at the page. Remember Google, reporting only 111? Or Bing, reporting only 1?

The next two arrows show the “external” links pointing at both the Santorum home page and the anti-Santorum home page. These are links from outsiders, pointing at each page. You can see that the anti-Santorum page has four times as many links pointing at it than the Santorum campaign page, a clue as to why it does so much better for a search on “santorum.”

But it’s not just number of links. Using other reports, I can see that thousands of links leading to both sites have the text “santorum” in the links themselves, which is why they both are in the top results for that word.

Because the anti-site has so many more links that say “santorum” and “spreading santorum,” that probably helps it outrank the campaign site on the single word. But because the official site has a healthy number from sources including places like the BBC saying “rick santorum” in the links, that — along with its domain name of ricksantorum.com — might help it rank better for “rick santorum.”

It’s nice that I can use a third party tool to perform this type of analysis, but I shouldn’t have to. It’s simply crazy — and wrong — that both Google and Bing send searchers and publishers away from their own search engines to understand this.

For one, the third party tools don’t actually know exactly what the search engines themselves are counting as links. They’re making their own estimates based on their own crawls of the web, but that doesn’t exactly match what Google and Bing know (though it can be pretty good).

Not Listing Links Is Like Not Listing Ingredients

For another, the search engines should simply be telling people directly what they count. Links are a core part of the “ingredients” used to create the search engine’s results. If someone wants to know if those search results are healthy eating, then the ingredients should be shared.

Yes, Google and Bing will both report link data about a publisher’s own registered site. But it’s time for both of them to let anyone look up link data about any site.

The Blekko search engine does this, allowing anyone logged in to see the backlinks to a listed page. Heck, Blekko will even give you a badge you can place on your page to show off your links, just as Yahoo used to. But for Google, it’s “normal” for its link command to not show all the links to a page. Seriously, that’s what Google’s help page says.

Google, in particular, has made much of wanting people to report spam found in its search results. If it really wants that type of help, then it needs to ensure SEOs have better tools to diagnose the spam. That means providing link data for any URL, along with anchor text reporting.

Google has also made much about the need for companies to be open, in particular pushing for the idea that social connection should be visible. Google has wanted that, because until Google+ was launched, Google had a tough time seeing the type of social connections that Facebook knew about.

Links are effectively the social connections that Google measures between pages. If social connections should be shared with the world, then Google should be sharing link connections too, rather than coming off as hypocritical.

Finally, it doesn’t matter if only a tiny number of Google or Bing users want to do this type of link analysis. That’s often the pushback when this issue comes up, that so few do these type of requests.

Relatively few people might read the ingredients labels on the food they eat. But for the few that do, or for anyone who suddenly decides they want to know more, that label should be provided. So, too, should Google and Bing provide link data about any site.

Goodbye Keyword Referrer Data

Link data has long been suppressed by Google. Holding back on keyword data is a new encroachment.

Google has said this was done to protect user privacy. I have no doubt many in the company honestly believe this. But it if was really meant to protect privacy, then Google shouldn’t have deliberately left open a giant hole that continues to provide this data to its paid advertisers.

Worse, if Google were really serious about protecting the privacy of search terms, then it would disable the passing of referrers in its Chrome browser. That hasn’t happened.

Unlike the long examination of link data above, I’ll be far more brief about the situation with Google withholding link data. That’s because I’ve already written over 3,000 words looking at the situation in depth last October, and that still holds up. So please see my previous article, Google Puts A Price On Privacy, to understand more.

Google’s Weak Defense

Since my October story, the best defense that Google’s been able to concoct for withholding keyword data from non-advertisers is a convoluted, far-fetched argument that makes its case worse, not better.

Google says that potentially, advertisers might buy ads for so many different keywords that even if referrer data was also blocked for them, the advertisers could still learn what terms were searched for by looking through their AdWords campaign records.

For example, let’s say someone did a search on Google for “Travenor Johannisoon income tax evasion settlement.” I’ve made this up. As I write this, there are no web pages matching a Google search for “Travenor Johannisoon” at all. But…

- If this were a real person, and

- someone did that search, and

- if a page appeared in Google’s results, and

- someone clicked on that page…

then the search terms would be passed along to the web site hosting the page.

Potentially, this could reveal to a publisher looking at their web analytics that there might be a settlement for income tax evasion for involving a “Travenor Johannisoon.” If the publisher starting poking around, perhaps they might uncover this type of information.

Of course, it could be that there is no such settlement at all. Maybe it’s just a rumor. Anyone can search for anything which doesn’t make it into a fact.

More likely, the search terms are so buried in all the web analytics data that the site normally receives that this particular search isn’t noticed at all, much less investigated.

Extra Safe Isn’t Extra Safe

Still, to be extra safe, Google has stopped passing along keyword data when people are signed-in. Stopped, except to its advertisers. Like I said, Google argues that potentially advertisers might still see this information even if they were also blocked.

For instance, say someone runs an ad matching any searches with “income tax evasion” in them. If someone clicked on the ad after doing a search for “Travenor Johannisoon income tax evasion settlement,” those terms would be passed along though the AdWords system to the advertiser, even though the referrer might pass nothing to the advertiser’s web analytics system.

So, why bother blocking?

Yes, this could happen. But if the point is to make things more private, then blocking referrers for both advertisers and non-advertisers would still make things harder. Indeed, Google still has other “holes” where “Travenor Johannisoon” might find his privacy exposed just as happens potentially with AdWords.

For example, if someone did enough searches on the topic of Travenor and tax evasion, that might cause it to appear one of Google Instant’s suggested searches.

So why bother blocking?

Also, while Google blocks search terms from logged-in users in referrer data, those same searches are not blocked from the keyword data it reports to publishers through Google Webmaster Central. That means the Travenor search terms could show up there.

So why bother blocking?

Nothing has changed my view that, despite Google’s good intentions, its policy of blocking referrers only for non-advertisers is incredibly hypocritical. Google purports this is done to protect privacy, but it puts its own needs and advertisers desires above privacy.

Blocking referrers is a completely separate issue from encrypting the search results themselves. That’s good and should be continued. But Google is deliberately breaking how such encryption works to pass along referrer data to its advertisers. Instead, Google should block them for everyone or block them for no one. Don’t play favorites with your advertisers.

What Google & Bing Should Do

Made it this far? Then here’s the recap and action items for moving forward.

Bing should restore its link command, if not create a new Bing Site Explorer. Google should make sure that its link command reports links fully and consider its own version of a Google Site Explorer. With both, the ability for anchor text reports about any site is a must.

If there are concerns about scraping or server load, make these tools you can only use when logged in. But Yahoo managed to provide such a tool. Blekko is providing such statistics. Tiny third-party companies are doing it. The major search engines can handle it.

As for the referrer data, Google needs to immediately expand the amount of data that Google Webmaster Central reports. Currently, up to 10,000 terms (Google says up to 1,000, but we believe that’s wrong) for the past 30 days are shown.

In November, the head of Google’s spam team Matt Cutts — who’s also been involved with the encryption process — said at the Pubcon conference that Google is considering expanding the time period to 60 days or the queries to 2,000 (as said, we think — heck, we can see, they already provide more than this). Slightly more people wanted more time than more keywords shown.

I think Google should do more than 60 days. I think it should be providing continuous reporting and holding that data historically on behalf of sites, if it’s going to block referrers. Google is already destroying historical benchmarks that publishers have maintained. Google’s already allowed data to be lost for those publishers, because they didn’t begin to go in each day and download the latest information.

So far, all Google’s done is provide an Python script to make downloading easier. That’s not enough. Google should provide historical data, covering a big chunk of the terms that a site receives. It’s the right thing to do, and it should have been done already.

What Publishers Can Do

This doesn’t mean that publishers are powerless, however.

Bing is desperate to be seen as the “good” search engine against “evil” Google. Publishers should, whenever relevant, remind Bing that it’s pretty evil not to have maintained its own version of Yahoo Site Explorer much less to have closed the link command.

Mention it in blog posts. Mention it in tweets. Bring it up at conferences. Don’t let it die. Ask Bing why it can’t do what little Blekko can.

As for Google, pressure over link data is probably best expressed in terms of relevancy. Why is Google deliberately preventing this type of information from being studied? Is it more afraid that doing so will reveal weaknesses in its relevancy, rather than potential spam issues? Change the debate to relevancy, and that gets Google’s attention — plus the attention of non-publishers.

There’s also the issue of openness. Google shouldn’t be allowed to preach being “open” selectively, staying closed when it suits Google, without some really good arguments for remaining closed. On withholding link data, those “closed” arguments no longer stand up.

As for the referrer data, Google should be challenged in three ways.

First, the FTC will be talking to publishers as part of its anti-trust investgation into Google’s business practices. Publishers, if asked, should note that by withholding referrer data except for Google’s advertisers, it’s potentially harming competing retargeting services that publishers might prefer to use. Anti-trust allegations seem to really get Google’s attention, so make that wheel squeak.

Second, question why Google is deliberately leaving a privacy hole open for the searchers it’s supposedly trying to protect. If Google’s really worried about what search terms reveal, the company needs a systematic way to scrub potentially revealing queries from everything: suggested searches, reporting in Google Webmaster Central, AdWords reporting as well as referrer data.

Finally, withhold your own data. Are you opted-in to the data sharing on Google Analytics that launched back in 2008? Consider opting-out, if so:

To opt-out, when you log in, select an account, then select “Edit Analytics Account” next to the name of the account in the Overview window, then you’ll see options as shown above and as explained on this help page.

Opting out means you can’t use the benchmarking feature (fair enough, and no loss if you don’t use it) and Conversion Optimizer. If you still want Conversion Optimizer, don’t opt-out or alternatively, tell Google that you should have a choice to share data solely for use with that product but not other Google products.

There might be other drawbacks to not sharing that I’m missing. But we haven’t been sharing here at Search Engine Land since the beginning of the year. So far, we’re not having any problems.

Google loves data. Withholding your own is another way for publishers to register their displeasure about having data withheld from them. And it’s the type of thing that Google just might notice.

Related Articles

- What Is SEO / Search Engine Optimization?

- Search Engine Land’s Guide To SEO

- The Periodic Table Of SEO Ranking Factors

- What Is Google PageRank? A Guide For Searchers & Webmasters

- How The “Focus On First” Helps Hide Google’s Relevancy Problems

- Yahoo Completes Global Organic Transition To Bing

- Yahoo Site Explorer Closing Down Monday, November 21st

- The Microsoft-Yahoo Search Deal, In Simple Terms

- Google Releases New Link Reporting Tools

- Google Now Reporting Anchor Text Phrases

- Yahoo Adds Link Badge In Site Explorer

- Link Building Tool Review: Link Research Tool Set By CEMPER

- Link Building Tool Review: SEOmoz PRO

- Link Building Tool Review: SEO Book

- Link Building Tool Review: Raven Tools

- Link Building Tool Review: Majestic SEO

- Bing Webmaster Tools Launches New Link Reports; Google Webmaster Tools Changes Theirs

- Blekko’s SEO Tools: What Information Do They Provide?

- Blekko Offers New Linkroll Widget & More Publisher Tools

- Google’s Chrome Page No Longer Ranks For “Browser” After Sponsored Post Penalty

- Should Rick Santorum’s “Google Problem” Be Fixed?

- How Rick Santorum Is Making His “Google Problem” Worse

- For “Define An English Person,” Google Suggests The C-Word

- Google’s Spam Report Page Gets “Biggest Refresh” In Years

- Google: As Open As It Wants To Be

- Google’s Facebook Competitor, The Google+ Social Network, Finally Arrives

- How Google Instant’s Autocomplete Suggestions Work

- Google Webmaster Tools Adds Page-Level Query Data

- Google Webmaster Tools Search Queries Report Now Less Accurate

- Google Analytics Benchmarking Feature, Data Sharing & Audio Ad Charting

- Guest Opinion: Is Google’s Privacy Move Really An Anti-Competitive Practice?

- Dear Congress: It’s Not OK Not To Know How Search Engines Work, Either

- Googleopoly: The Definitive Guide To Antitrust Investigations Against Google

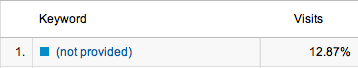

- Keyword “Not Provided” By Google Spikes, Now 7-14% In Cases

- Google Puts A Price On Privacy

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories