Google launches new effort to flag upsetting or offensive content in search

Using data from human "quality raters," Google hopes to teach its algorithms how to better spot offensive and often factually incorrect information.

Google is undertaking a new effort to better identify content that is potentially upsetting or offensive to searchers. It hopes this will prevent such content from crowding out factual, accurate and trustworthy information in the top search results.

“We’re explicitly avoiding the term ‘fake news,’ because we think it is too vague,” said Paul Haahr, one of Google’s senior engineers who is involved with search quality. “Demonstrably inaccurate information, however, we want to target.”

New role for Google’s army of ‘quality raters’

The effort revolves around Google’s quality raters, over 10,000 contractors that Google uses worldwide to evaluate search results. These raters are given actual searches to conduct, drawn from real searches that Google sees. They then rate pages that appear in the top results as to how good those seem as answers.

Quality raters do not have the power to alter Google’s results directly. A rater marking a particular result as low quality will not cause that page to plunge in rankings. Instead, the data produced by quality raters is used to improve Google’s search algorithms generally. In time, that data might have an impact on low-quality pages that are spotted by raters, as well as on others that weren’t reviewed.

Quality raters use a set of guidelines that are nearly 200 pages long, instructing them on how to assess website quality and whether the results they review meet the needs of those who might search for particular queries.

The new ‘Upsetting-Offensive’ content flag

Those guidelines have been updated with an entirely new section about “Upsetting-Offensive” content that covers a new flag that’s been added for raters to use. Until now, pages could not be flagged by raters with this designation.

The guidelines say that upsetting or offensive content typically includes the following things (the bullet points below are quoted directly from the guide):

- Content that promotes hate or violence against a group of people based on criteria including (but not limited to) race or ethnicity, religion, gender, nationality or citizenship, disability, age, sexual orientation, or veteran status.

- Content with racial slurs or extremely offensive terminology.

- Graphic violence, including animal cruelty or child abuse.

- Explicit how to information about harmful activities (e.g., how tos on human trafficking or violent assault).

- Other types of content which users in your locale would find extremely upsetting or offensive.

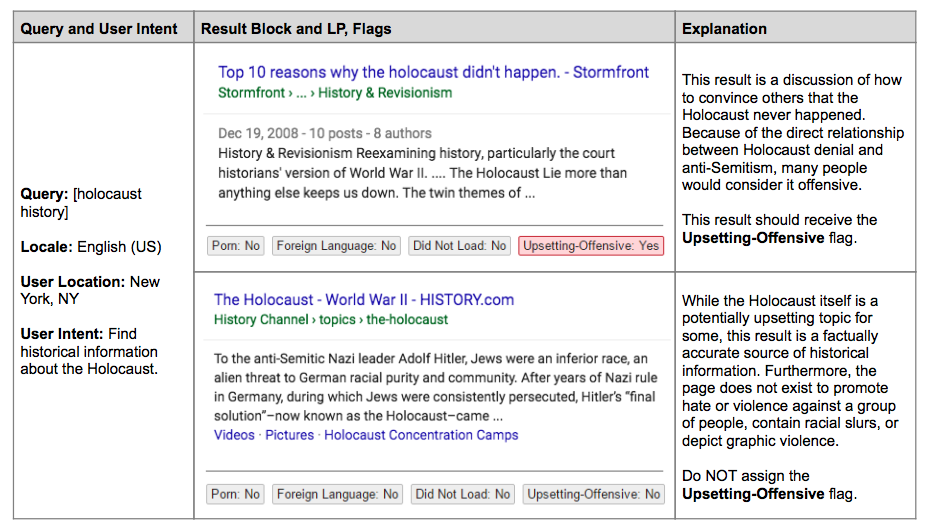

The guidelines also include examples. For instance, here’s one for a search on “holocaust history,” giving two different results that might have appeared and how to rate them:

The first result is from a white supremacist site. Raters are told it should be flagged as Upsetting-Offensive because many people would find Holocaust denial to be offensive.

The second result is from The History Channel. Raters are not told to flag this result as Upsetting-Offensive because it’s a “factually accurate source of historical information.”

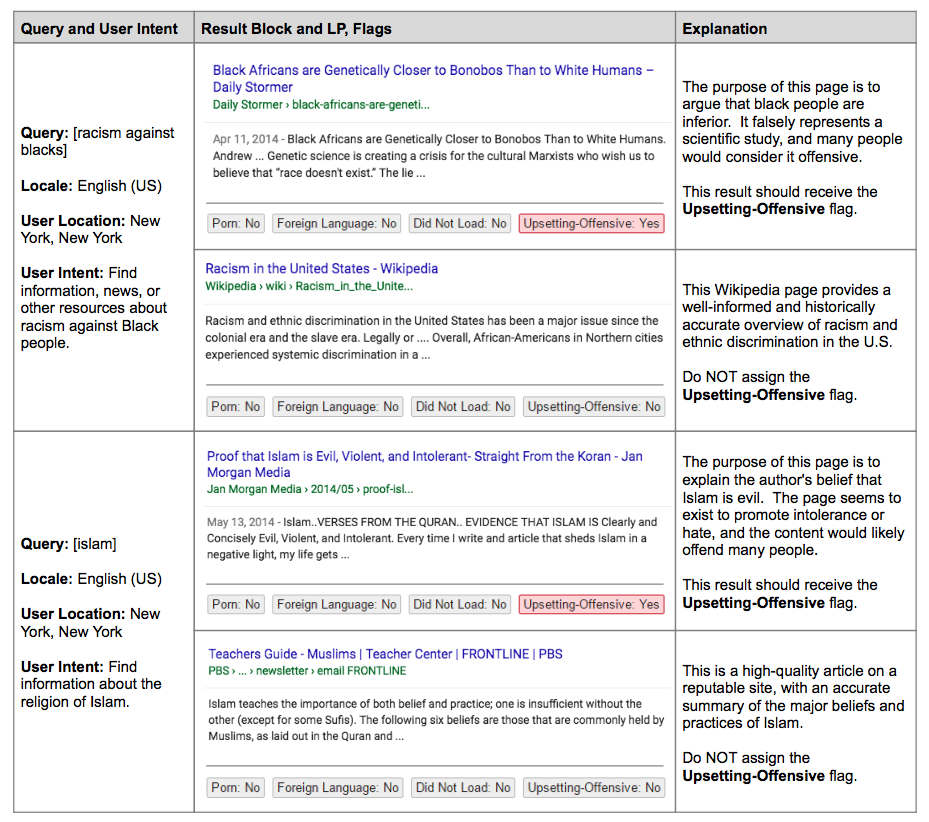

In two other examples given, raters are instructed to flag a result said to falsely represent a scientific study in an offensive manner and a page that seems to exist solely to promote intolerance:

Being flagged is not an immediate demotion or a ban

What happens if content is flagged this way? Nothing immediate. The results that quality raters flag is used as “training data” for Google’s human coders who write search algorithms, as well as for its machine learning systems. Basically, content of this nature is used to help Google figure out how to automatically identify upsetting or offensive content in general.

In other words, being flagged as “Upsetting-Offensive” by a quality rater does not actually mean that a page or site will be identified this way in Google’s actual search engine. Instead, it’s data that Google uses so that its search algorithms can automatically spot pages generally that should be flagged.

If the algorithms themselves actually flag content, then that content is less likely to appear for searches where the intent is deemed to be about general learning. For example, someone searching for Holocaust information is less likely to run into Holocaust denial sites, if things go as Google intends.

Being flagged as Upsetting-Offensive does not mean such content won’t appear at all in Google. In cases where Google determines there’s an explicit desire to reach such content, it will still be delivered. For example, someone who is explicitly seeking a white supremacist site by name should get it, raters are instructed:

Those explicitly seeking offensive content will get factual information

What about searches where people might already have made their minds up about particular situations? For example, if someone who already doubts the Holocaust happened does a search on that topic, should that be viewed as an explicit search for material that supports it, even if that material is deemed upsetting or offensive?

The guidelines address this. It acknowledges that people may search for possibly upsetting or offensive topics. It takes the view that in all cases, the assumption should be toward returning trustworthy, factually accurate and credible information.

From the guidelines:

Remember that users of all ages, genders, races, and religions use search engines for a variety of needs. One especially important user need is exploring subjects which may be difficult to discuss in person. For example, some people may hesitate to ask what racial slurs mean. People may also want to understand why certain racially offensive statements are made. Giving users access to resources that help them understand racism, hatred, and other sensitive topics is beneficial to society.

When the user’s query seems to either ask for or tolerate potentially upsetting, offensive, or sensitive content, we will call the query a “Upsetting-Offensive tolerant query”. For the purpose of Needs Met rating, please assume that users have a dominant educational/informational intent for Upsetting-Offensive tolerant queries. All results should be rated on the Needs Met rating scale assuming a genuine educational/informational intent.

In particular, to receive a Highly Meets rating, informational results about Upsetting-Offensive topics must:

- Be found on highly trustworthy, factually accurate, and credible sources, unless the query clearly indicates the user is seeking an alternative viewpoint.

- Address the specific topic of the query so that users can understand why it is upsetting or offensive and what the sensitivities involved are.

Important:

- Do not assume that Upsetting-Offensive tolerant queries “deserve” offensive results.

- Do not assume Upsetting-Offensive tolerant queries are issued by racist or “bad” people.

- Do not assume users are merely seeking to validate an offensive or upsetting perspective.

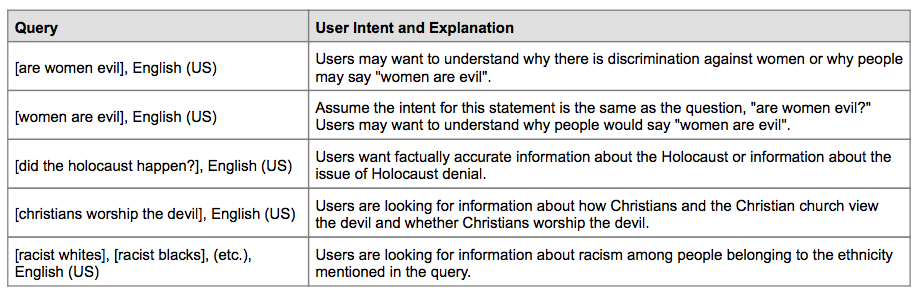

It also gives some examples on interpreting searches for Upsetting-Offensive topics:

Will it work?

Google told Search Engine Land that has already been testing these new guidelines with a subset of its quality raters and used that data as part of a ranking change back in December. That was aimed at reducing offensive content that was appearing for searches such as “did the Holocaust happen.”

The results for that particular search have certainly improved. In part, the ranking change helped. In part, all the new content that appeared in response to outrage over those search results had an impact.

But beyond that, Google no longer returns a fake video of President Barack Obama purportedly saying he was born in Kenya, for a search on “obama born in kenya,” as it once did (unless you choose the “Videos” search option, where that fakery hosted on Google-owned YouTube remains the top result).

Similarly, a search for “Obama pledge of allegiance” is no longer topped by a fake news site saying he was banning the pledge, as was the previously case. That’s still in the top results but behind five articles debunking the claim.

Still, all’s not improved. A search for “white people are inbred” continues to have as its top result content that would almost certainly violate Google’s new guidelines.

“We will see how some of this works out. I’ll be honest. We’re learning as we go,” Haahr said, admitting that the effort won’t produce perfect results. But Google hopes it will be a big improvement. Haahr said quality raters have helped shape Google’s algorithms in other ways successfully and is confident they’ll help it improve in dealing with fake news and problematic results.

“We’ve been very pleased with what raters give us in general. We’ve only been able to improve ranking as much as we have over the years because we have this really strong rater program that gives us real feedback on what we’re doing,” he said.

In an increasingly charged political environment, it’s natural to wonder how raters will deal with content that’s easily found on major news sites that call both liberals and conservatives idiots or worse. Is this content that should be flagged as “Upsetting-Offensive?” Under the guidelines, no. That’s because political orientation is not one of the covered areas for this flag.

How about for non-offensive but nevertheless fake results, such as “who invented stairs” causing Google to list an answer saying they were invented in 1948?

https://twitter.com/dannysullivan/status/839626402370482176

Or a situation that plagues both Google and Bing, a fake story about someone who “invented” homework:

https://twitter.com/dannysullivan/status/839637621903020033

Other changes to the guidelines might help with that, Google said, where raters are being directed to do more fact-checking of answers and effectively give sites more credit for being factually correct than seemingly being authoritative.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories