Google’s “One True Answer” problem — when featured snippets go bad

Obama's planning a coup? Women are evil? Several presidents were in the KKK? Republicans are Nazis? Google can go spectacularly wrong with some of its direct answers.

Here we are again. Google’s in hot water because of what I call its “One True Answer” feature, where it especially highlights one search listing over all others as if that’s the very best answer. It’s a problem because sometimes these answers are terribly wrong.

When Google gets facts wrong

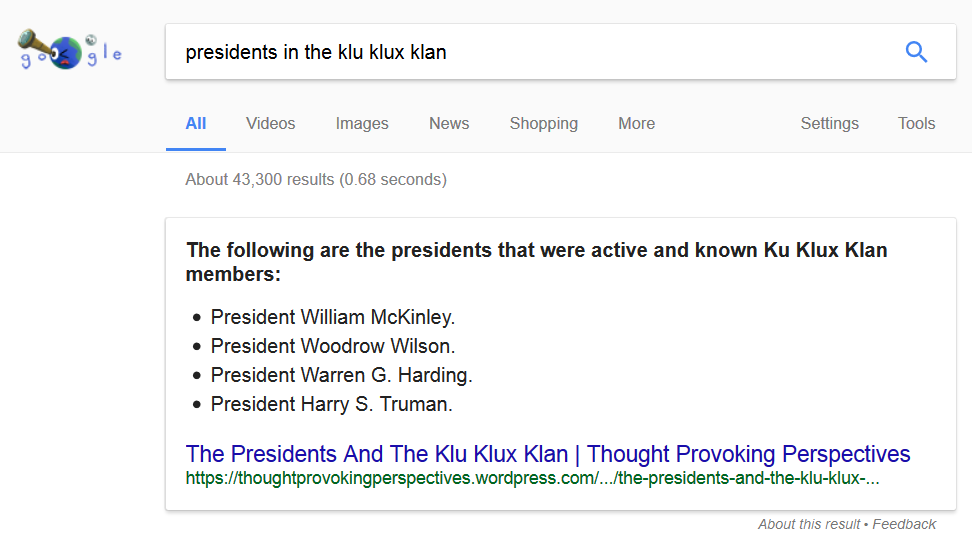

At the end of last month, Google was spotted listing several US presidents as being members of the Ku Klux Klan, even though there’s no conclusive evidence of any of this:

What’s happening there is called a “featured snippet,” where Google has taken one of the 10 web listings it normally displays and put it into a special box, to highlight it as seemingly the best of all the results, the listing that may fully answer your question.

I’ve taken to calling this Google’s “One True Answer” feature, because that’s effectively what it is to me. Google is expressing so much faith in this answer that it elevates it above all others. Google’s faith in featured snippets is especially profound when they emerge through its Google Home voice assistant:

https://twitter.com/dannysullivan/status/838482074155659264

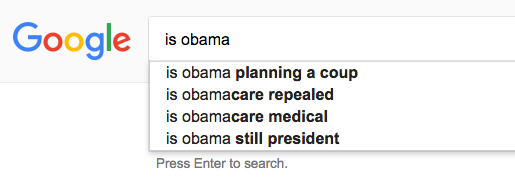

Yesterday, President Donald Trump accused former President Barack Obama of wiretapping him, causing paranoia in some quarters that Obama was plotting a coup. In fact, it’s one of the top things Google currently suggests if you type in “Is Obama….” into Google’s search box, indicating it’s a search with some degree of popularity:

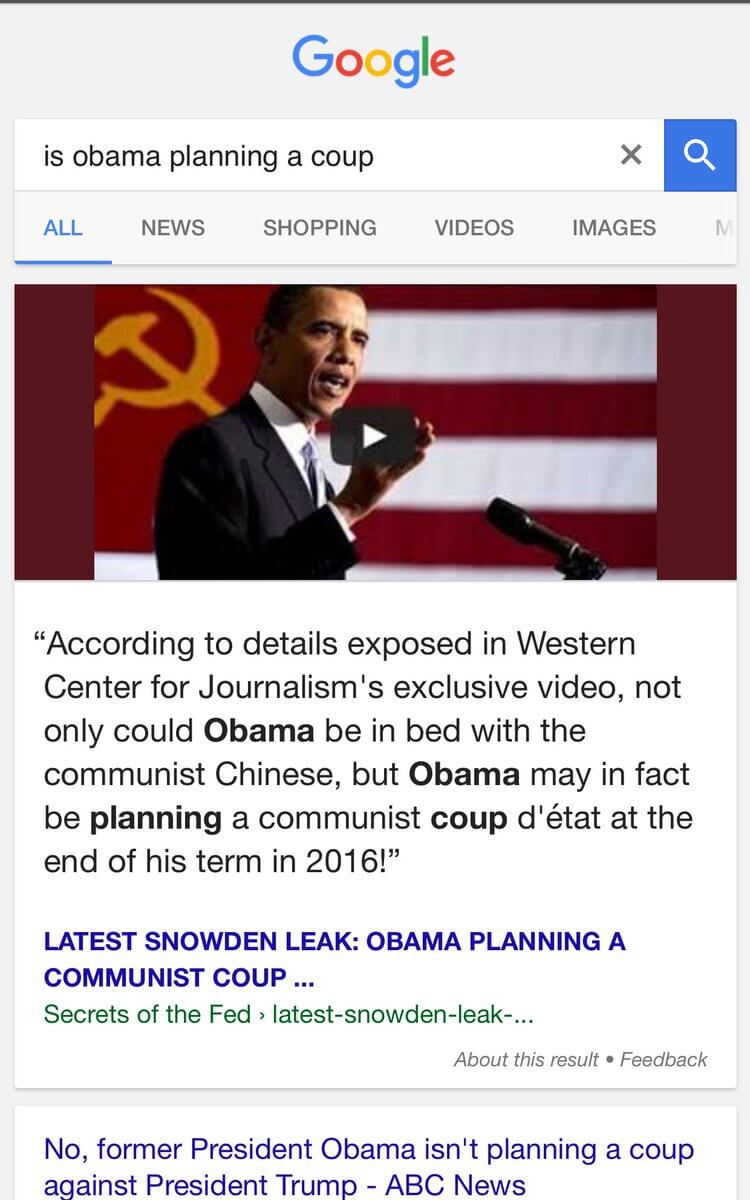

Curious as to the result, I tried it yesterday and discovered that Google’s One True Answer was telling me that indeed, Obama was planning a coup, though he appears to be late in making it happen:

Sure, if you scroll a bit further down, you’ll see an ABC News story debunking the rumor. That doesn’t take away from the fact that with all of Google’s search algorithms and machine learning, it decided that some ridiculous conspiracy mongering should be given an enhanced spot. It’s even worse when you listen to it on Google Home:

https://twitter.com/dannysullivan/status/838479395459256320

By the way, many often assume that these problematic answers are the result of overt actions by right-wing people that somehow know how to manipulate Google into doing whatever they want.

That’s not the case. There are indeed things people can do to increase the chances of being a featured snippet, but most of the problematic examples I review don’t appear to have been deliberate attempts. Rather, they seem to be the result of Google’s algorithms and machine learning making bad selections. Those bad guesses are also equal opportunity offenders, as this example telling people that Republicans are the same as Nazis:

Google Home:

"Yes, republicans = nazis" pic.twitter.com/7HVQjyjbEq— Danny Sullivan (@dannysullivan) March 5, 2017

For any example of Google appearing to favor a political stance, trust me, you can come up with another example where it appears to favor the opposite. The reason most people don’t know this is because they generally try searches that support one view without testing to see what happens when they do another.

A tipping point for One True Answers?

The KKK example last month was kind of a tipping point for me, with Google getting it wrong with One True Answers. This isn’t a new problem. It’s one that has been allowed to fester over time.

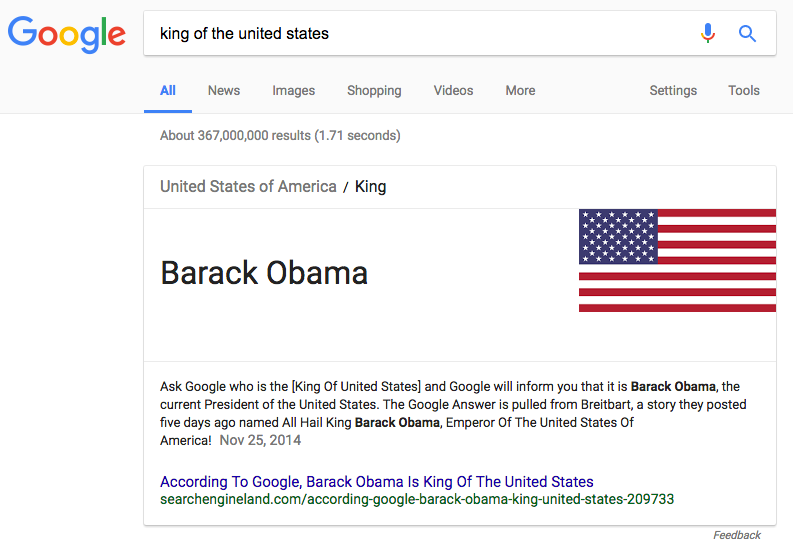

We’ve had not-safe-for-work answers on how to eat sushi in 2014. That same year, you could find Google declaring that Barack Obama was King of the United States. Incredibly, after our report on that, our own article became the new source declaring Obama king, as it remains to this day:

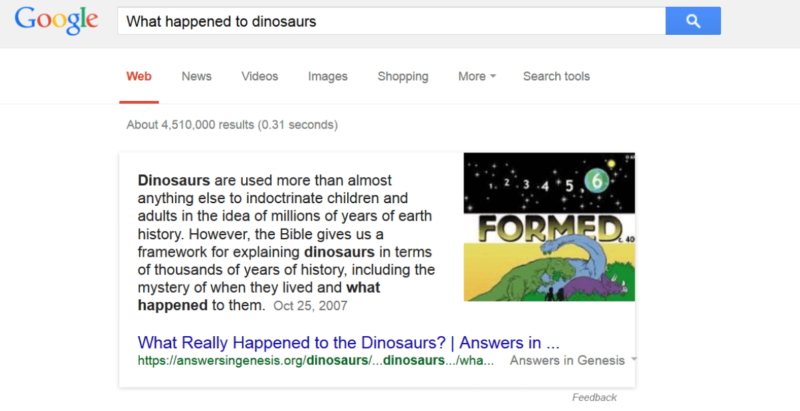

In 2015, we had a religious answer provided above all others for what happened to the dinosaurs:

It was one of several examples we covered in our When Google Gets It Wrong article that year. But that was hardly the end of such embarrassments. Last year’s terrible highlight was when you could listen to Google’s One True Answer about why women were evil:

Google Home giving that horrible answer to "are women evil" on Friday. Good article on issues; I'll have more later https://t.co/EUtrx4ZFul pic.twitter.com/Ec8mEqx8Am

— Danny Sullivan (@dannysullivan) December 4, 2016

After the KKK example came up, I was planning to write the article I’m doing now in a week or so, basically, a “Is it time for One True Answers to go?” story. But last week, Adrianne Jeffries from The Outline called to talk about the exact same issue, the increasing problems with Google’s featured snippets.

Google’s featured snippets are worse than fake news is her article, and I strongly encourage anyone to read it. She covers some of the examples I’ve shared above, along with many other problematic ones. There’s plenty of discussion about what, if anything, can and should be done.

Turn off One True Answers?

The easy solution would be for Google to stop using featured snippets, to give up on the idea that it will provide One True Answers for some searches.

That doesn’t mean that Google wouldn’t come under criticism for bad results. After all, featured snippets come from one of the 10 web listings that are presented. One or more of those listings might still be problematic. But at least a problematic result wouldn’t get elevated to such exalted status, subjecting Google to greater criticism.

On the downside, turning them off means that Google can’t provide direct answers for many other cases where it’s very helpful to have them. And doing that goes right to Google’s bottom line.

The financial loss to Google of losing One True Answers

Arguably, the next big growth area in search is through voice-activated assistants, the ability to ask devices in our homes for answers. Amazon Echo and Google Home are going head-to-head here. As I’ve covered, Google Home easily beat the Amazon Echo when it comes to the range of questions it can answer.

Consider this example below, where I asked both Amazon Echo and Google Home if guinea pigs can eat grapes:

I remember distinctly when this question first came to my mind. I had my refrigerator open. My guinea pig, hearing me in the kitchen, started squeaking for a treat. I saw the grapes in the fridge and wondering if he could eat them. Normally, that would mean shutting the fridge and finding my phone or computer to type a query. But I called out this question to the Google Home in my kitchen and got an immediate answer.

That is an incredible competitive advantage that Google has over Amazon, as well as Apple and Microsoft, when it comes to providing answers. The others are far more tightly curtailed in providing direct answers from databases and vetted resources. That makes them less prone to problematic results but also less helpful for a wide range of queries that people have.

Turning off featured snippets means Google will lose its competitive advantage with Google Home, as well as with spoken queries to smartphones. That’s why I think it’s unlikely this will happen. Google will likely tolerate the occasional bad attention for its problematic One True Answers for what it considers the greater good to its users and its competitive standing in keeping them.

Ways to mitigate problems

Is there a way for Google to keep the good that featured snippets provide without causing problematic results? Not perfectly. Google processes over 5 billion queries per day, and even if featured snippets appear in only 15% of those at the moment (according to the Moz SERP features tracker), that’s nearly a billion One True Answers per day. Humans can’t vet all those.

But Google could consider not showing featured snippets in its web search results, when queries are typed. There’s no particular need for it to elevate one answer over the others in this way. By losing this display, it might force users to better use their own critical thinking skills in reviewing 10 possible answers that they are provided.

For spoken queries, having a One True Answer repeated — when it’s correct — is undoubtably helpful. To better improve there, Google might revisit the sites it allows to appear as resources. This could include vetting them, as it does with Google News. Or, it could make use of some algorithm system to determine if a site is deemed to have enough authority to be featured.

Even then, it still wouldn’t be perfect. Slate is a widely-respected site, one that I think would pass both any vetting Google might do or any algorithmic authority check Google might put in place. That means if you asked if Trump is paranoid, Google’s One True Answer from Slate would remain:

https://twitter.com/dannysullivan/status/838477506080485376

Not only does this answer assert Trump’s paranoid but also that he’s mentally ill, based on a political opinion piece that Slate ran, not any medical diagnosis.

Perhaps a further mitigation would be for Google to better explain that these are actual guesses. In the example above, as with when Google reads all featured snippets, it begins with the source, saying “According to….” Rather than that, perhaps Google should be more honest. “Here’s our best guess. According to….” Or maybe, “We’re not sure if this is correct, but according to….”

Maybe there’s better wording. But simply saying “According to….” doesn’t feel like disclaimer enough, at the moment.

On the web, Google provides an “About this result” link that leads to a page explaining what featured snippets are. Next to is, there’s also a “Feedback” link that allows people to suggest if a featured snippet is bad. In The Outline’s story, it found that the feedback link can indeed work in a few days. But that depends on people doing searches reporting problematic featured snippets — and there are good reasons why this might not happen.

After all, someone who is searching for “are republicans fascists” or “is obama planning a coup” might be happy with answers that assert both of these are correct, regardless if those answers are true, because they reinforce existing preconceptions. Those who are most likely to object to such answers probably never do such searches at all.

What’s Google think about all this? Here’s the comment the company gave me today:

Featured Snippets in Search provide an automatic and algorithmic match to a given search query, and the content comes from third-party sites. Unfortunately, there are instances when we feature a site with inappropriate or misleading content. When we are alerted to a Featured Snippet that violates our policies, we work quickly to remove them, which we have done in this instance. We apologize for any offense this may have caused.

In the end, I don’t know that there’s a perfect answer. But Google clearly needs to do something. This problem is going into its third year and becoming more profound as the “post-truth” era grows, as does our home assistants and devices that profess to give us the truth.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories