The Latest In Advanced Technical SEO: SMX East Recap

Columnist Tony Edward summarizes presentations from an SMX East session on how to tackle complex technical website issues that impact search engine optimization.

For experienced SEO practitioners looking for advice on how to tackle complex technical issues, “The Latest In Advanced Technical SEO” was the panel to attend at SMX East. This session brought together seasoned industry veterans to discuss the challenges and opportunities that come with advanced technical search engine optimization, and the panelists had some great insights into solving and preventing technical SEO issues.

Thousands Of Pages Missing From Google SERPs… And How To Prevent The Problem

Christine Smith from IBM presented an interesting case study about an indexation issue IBM faced with its self-support site. Thousands of pages became deindexed somehow, meaning they weren’t showing up in search results. This led to a significant drop in Google referrals and, consequently, a major loss of traffic.

The folks at IBM were puzzled by this, and they ran through the following SEO technical checks to try to find the problem:

- Pages/URLs resolved correctly.

- Redirects working properly.

- Canonical tags and URLs were correct.

- Robots.txt not blocking anything.

Upon checking and completing the Sitemap review, they noticed that only 10 percent of the sitemap URLs were indexed. Smith and her team then regenerated the sitemaps, which helped improved the Sitemap URL indexation to 60 percent. However, there was still no Google referral traffic improvement.

They then approached Google via a site search ticket to get some help. Google checked a sample of the missing URLs and found they were marked as duplicates and were last crawled five months before the issue occurred. Google did not provide a full list of the URLs affected, so the IBM team was left trying to figure out why these URLs were flagged as duplicate when they were actually unique.

Smith and her team then filed a reconsideration request in Google Search Console, and they also requested an increased crawl rate for the domain in hopes that the pages would soon be revisited.

A month later, traffic bounced back, but it was hard to tell what had resolved the issue. Was it the reconsideration request they filed? Was it the increased crawl rate, or perhaps a normal re-crawl of the site? Was it the improved sitemaps? Was it the Panda update that took place just weeks before traffic bounced back? Or was it some combination of factors?

Also what caused the issue in the first place? Smith and her team believe it was possibly the following:

- Faulty redirect or bad site maintenance redirect

- Typical server response during outage:

- 404 or 500 or 504 HTTP response

- Or 302 redirect to a maintenance page

To prevent similar problems in the future, Smith recommends doing site maintenance the right way. When the site is unavailable, serve a 503 Service Unavailable HTTP response. Do this only during planned outages, and be sure not to serve a 200 or 301 HTTP response when the site is down, as this may confuse the crawlers and cause pages to be deindexed.

https://www.slideshare.net/slideshow/embed_code/key/nCMqKPtjQRYIoz

Advanced SEO Visualization: Force-Directed Diagrams For Teaching & Analysis

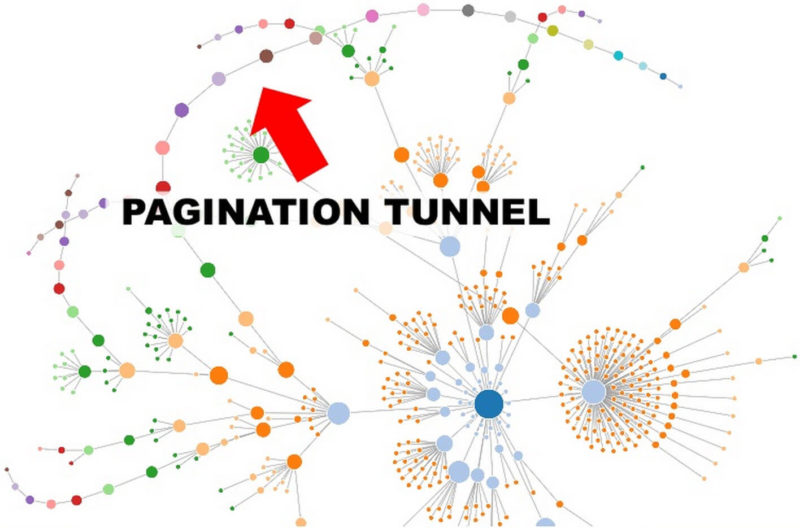

Ian Lurie presented a great solution for presenting technical SEO issues to clients, which he has personally had success with. He recommended using data visualization such as Forced-Directed Diagrams. The data visualization would be understandable to C-level stakeholders or other high-level personnel within an organization.

This is actually a unique style of presenting SEO data. Lurie’s Forced-Directed Diagram examples included pagination, orphaned content and canonical issues. Essentially, he used the diagrams as both a teaching and a diagnostic tool. Here is an example:

One of the key takeaways about creating the diagrams was the CPU abuse that it would cause. It can definitely put a strain on your computer, so be sure you have a high-powered computer and no other applications are running when you attempt this.

In his presentation, he provided a step-by-step guide, which involves getting the data from Screaming Frog and then using Gephi to create the visualization.

https://www.slideshare.net/slideshow/embed_code/key/4AUhZeER9UZsH2

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories