Search Engines + Newspapers: Perfect Market’s Delivery System Aims To Please Both

Last year, there was a seemingly endless parade of stories on how aggregators, search engines and news blogs were apparently killing newspapers that publish original content. This year, add the rise of “content farms” to the list. Riding to the rescue, or so it hopes, comes Perfect Market and its new search marketing tool, “The […]

Last year, there was a seemingly endless parade of stories on how aggregators, search engines and news blogs were apparently killing newspapers that publish original content. This year, add the rise of “content farms” to the list. Riding to the rescue, or so it hopes, comes Perfect Market and its new search marketing tool, “The Vault.”

Perfect Market has been working with newspapers publishers such as the Tribune Company to generate more revenue for them from search engine visitors. Tribune Company is also one of Perfect Market’s major investors, along with Trinity Ventures, Rustic Canyon Partners and Idealab.

Earning More Off The “Drive Bys”

The concept behind The Vault is simple. Newspapers get plenty of traffic from search engines but often do a poor job of monetizing that traffic. Indeed, last year News Corp’s chief digital officer Jonathan Miller called visitors from Google as “the least valuable traffic to us.” The Vault aims to change all that, by making newspaper content more attractive to “drive by” visitors, especially through better ad positioning and targeting.

“We’re arming newsrooms with the technology and performance intelligence they need to compete, with the content they’re writing today, and we know it’s working,” said Julie Schoenfeld, president and CEO of Perfect Market. “We’ve partnered with some of the best known publications in the world, including The Los Angeles Times and SFGate, and the results speak for themselves. Some of our partners have seen an ad revenue increase by 20X.”

The service is offered on revenue sharing basis, with Perfect Market getting a share of any increase in advertising revenues it generates from the stories.

Clearing The Clutter

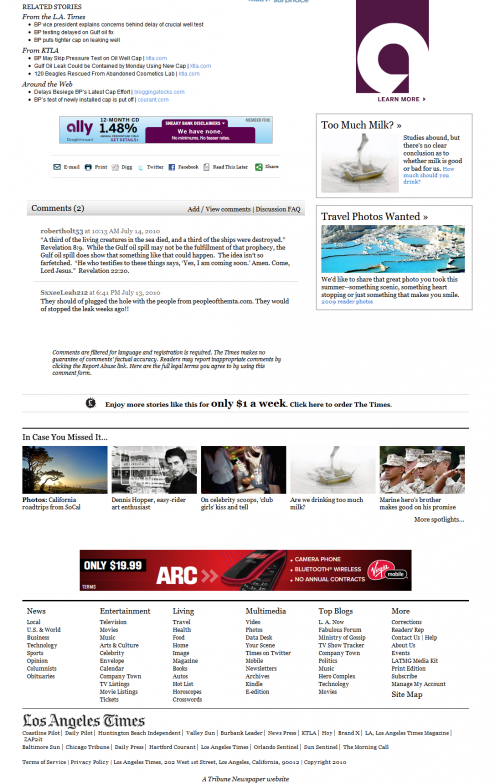

Vault pages pretty much strip everything off a “regular” news story except for the news article itself and ads. Consider this side-by-side comparison:

On the left is an article from the Los Angeles Times about BP delaying a test for capping the Gulf oil spill. This is what someone would see if they found the article by clicking around at the LA Times web site. On the right is the same article but formatted differently. This is what someone would see if they came to it by doing a search at Google or other search engines.

The search engine version is much simpler. Gone are things like:

- Social sharing and navigation options at the top of the page

- The big Los Angeles Times logo and the big “Nation” section heading

- More sharing options next to the start of the story, along with related stories

- Links that were in the original story that led to more information within the LA Times site

- “The Latest” news headlines box on the right-side of the page

- A house ad on the right side of the page

- The “Most Viewed | Most Commented” box

That’s just what’s been removed from the near the top of the original story. At the end, there are related story links, comments and more navigational links:

All of those also get removed. Meanwhile, the search engine version, unlike the original, gets paginated. The story is divided across two pages, so you have to click to read the second part. That’s intended to drive page views and increase the odds of showing ads that the reader will like, assuming they weren’t attracted to any on the first page.

Overall, the aim is to help give users a clearer route to what they want, the news story, and increase the odds that the post-reading click will be on an ad. The means removing elements designed to encourage the more brand-aware reader, someone who comes to the publication regularly, from sticking around.

“You can’t transform those who come in from search into ‘name brand’ users,” said Tim Ruder, Perfect Market’s chief revenue officer, a former Washington Post digital media exec.

The Content Farm Alternative?

It’s more than getting ad click, however. Perfect Market says its product is also designed to help improve the type of ads shown from ad networks such as Google AdSense, so that they’re better targeted to visitors and producing more revenue per click.

Beyond that, the product provides a “dashboard” designed to show how much particular stories are earning and which keywords driving traffic to those stories from search engines are paying off the most.

Of course, writing content to match high-paying keywords is the hallmark of “content farms,” sites run by companies such as Demand Media or Associated Content, recently acquired by Yahoo. Potentially, Perfect Market’s system could allow traditional publishers to keep up with these new demand-driven publishers. However, that’s not Perfect Market’s main aim. Instead, it sees its tool as allowing publishers to keep writing as they’ve normally done but to earn more for their stories.

“The best antidote to fighting content farm spam in the engines is arming journalists with the tools they need to engage in the web economy,” said Schoenfeld. “When a news organization’s first-rate content is optimized for search, everyone should win. Users have a better search experience when quality journalism appears at the top of the search results. Search advertisers benefit from appearing in well researched, information-rich articles. And publishers benefit from previously untapped revenue streams.”

Removing Clutter Or Context?

What’s not to love? Well, former San Francisco Chronicle sex columnist Violet Blue had a lot of issues, when she stumbled upon her columns being Vaultized, as it were, on the San Francisco Chronicle’s SF Gate web site

In March, she wrote about how Google searches began sending her to her published columns that were missing her bio, links in the content, lacking punctuation and one case, given a keyword-rich URL that had the opposite meaning of the actual story.

Much of that has now been corrected. When I followed up with Perfect Market, soon after the issues came up, I was told the decision on whether to include links was down to the individual publications. As for typos, those were attributed to to import glitches.

I also talked with Blue soon after her post and found myself struggling. On the one hand, Perfect Market is doing some of the things that I’ve also written that newspapers should do to survive (see If Newspapers Were Stores, Would Visitors Be “Worthless” Then?). If they can’t earn more for their stories, then worrying about how the stories are presented becomes a moot point. There won’t be stories at all.

But Blue makes a valid point that The Vault’s supposed content optimization process actually “decontextualizes” an article in ways that can be harmful to readers, even those “drive by” readers. Comments often add additional value and content to an article. An author’s bio or links to their past work give context about who they are and why a reader might care about what they’ve written.

“Now my articles are on a site that doesn’t have any of the context,” she said.

Link Love’s Labor Lost

Blue also is concerned that such changes will turn people off from linking to her stories, or stories published by others.

“I’ve worked really hard as a blogger for 10 years now to understand the value of people who arrive at my site,” she said. “Having multiple stories across multiple pages, that turns into ‘Does your reader feel tricked?’ For me, if something’s broken into 8 pages, I won’t link to it.”

Potentially, the linking situation gets even worse. Links are a key factor in helping content rank better in search engines. Getting good links from across the web play a huge role. Strike one for some of these articles could be that if they’re less attractive to some people, they’ll not attract links. Strike two is that they compete for links against the “original” articles.

The author, the publication’s social media person or regular brand-driven readers are likely to promote the “original” story, linking to it. But all that “link credit” is wasted, since that version of the page doesn’t show in search engines as well.

Not Cloaking, Says Perfect Market

Perfect Market says this isn’t an issue. That’s because it redirects Google and other search engines if they try to visit the “regular” version. This keeps all the link credit flowing to the page they are allowed to record.

In other words, here are those two articles from the LA Times that I mentioned earlier. Here’s the “normal version” for regular visitors to the site:

https://www.latimes.com/news/nationworld/nation/la-na-oil-spill-20100714,0,1234918.story

The version is for “search engine visitors,” people who will come to the article from search engines like Google:

If Google tries to visit the regular version, in order to record it for its searchable index of documents, it’s redirected to the search engine version. However, humans going directly to the normal version, say from a link shared on Twitter, still see it.

Isn’t that cloaking, something in particular against Google’s rules?

Traditionally, cloaking means showing search engines content that humans never see. For example, Google gets shown a special page that it records, but people clicking on that page when it’s listed in Google’s results get redirected to the “normal” page.

In Perfect Market’s system, humans do see the “search engine” version, when they find it within a search engine. If Google has recorded a special page, people clicking from Google see exactly what Google saw.

Perfect Market said it has explained the situation to its Google AdSense representative, who in turn consulted with a “search specialist” at Google, and things are apparently fine. From the email Perfect Market received:

What you want to do is acceptable and is in line with Google’s guidelines. You can redirect Googlebot (and visitors from organic search) to articles.latimes.com and continue to send direct traffic to www.latimes.com. This isn’t considered deceptive, since Googlebot and users have the same experience.

I can’t tell if that search specialist was with Google’s actual web spam team or instead someone within the AdSense (Google’s division for publishers who carry ads) who is knowledgable about Google’s rules. Matt Cutts, who’s the head of Google’s spam team, was away on vacation when I wrote this and so unable to look at it in more depth. When I get an update from him, I’ll postscript.

Perfect Market also said that should Google determine there’s an issue in the future, it can still managed to preserve the link credit from “normal” versions to support “search engine version” in another way.

The company also said that should a client stop using its system, it’s easy to redirect all the indexed stories back to their original versions (the articles typically live on on their own web site, such as articles.latimes.com rather than latimes.com).

Finding The Balance

Clearly, Perfect Market’s system offers some advantages to publishers, but it also comes with some decisions. Do you really assume that all “drive by” visitors can’t be converted, or should you do more balancing of other contextual elements. If you’re excluding links to minimize non-ad clicks, are you gaining that much more for the potential loss of context to a story that links can provide?

These and other questions are true for anyone who embarks on a strategy of effectively maintaining two sites, one designed for search visitors and one for all others, regardless of whether they use Perfect Market’s system. And as Blue notes, implementation shouldn’t be seen as solely a business issue.

“While links within my articles were restored, I think it’s important to remember that when Perfect Market was implemented on all SFGate content, the value of keeping links in articles was either not important or not understood to the decision makers at Hearst. This shows a big disconnect between business and editorial; it’s safe to say that the brands buying the Perfect Market product are either not aware or are unconcerned about online content’s fundamentals,” said Blue.

For its part, Perfect Market agrees that there’s more that can be done.

“Perfect Market helps forward-thinking publishers generate new revenue from search traffic, something they haven’t done very well to date. Our approach is designed to evolve user experience and grow revenues. We continue to innovate in both areas based on actionable data and feedback,” Schoenfeld said.

One thing has been to further evolve its story templates since earlier this year. Sometimes social sharing buttons are part of what’s shown within a story. The company is also open to more customized formatting.

As for comments:

“We would be happy to include comments. We just have hard time getting comment sinto our system now. But comments will be coming,” Schoenfeld said.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land