The EMD Update: Like Panda & Penguin, Expect Further Refreshes To Come

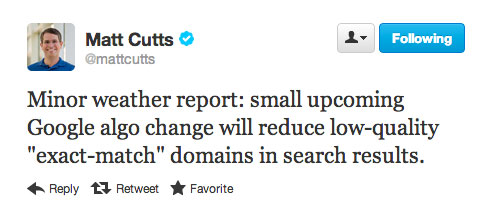

Last week, Google announced the EMD Update, a new filter that tries to ensure that low-quality sites don’t rise high in Google’s search results simply because they have search terms in their domain names. Similar to other filters like Panda, Google says EMD will be updated on a periodic basis. Those hit by it may […]

Last week, Google announced the EMD Update, a new filter that tries to ensure that low-quality sites don’t rise high in Google’s search results simply because they have search terms in their domain names. Similar to other filters like Panda, Google says EMD will be updated on a periodic basis. Those hit by it may escape the next EMD update, while others not hit this time could get caught up in the future.

How Periodic Updates Work: The Panda Example

How Periodic Updates Work: The Panda Example

Google has several filters that it updates periodically, that is from time-to-time. The Panda Update is the best example of this periodic nature and the impact it can have on publishers.

Panda works by effectively sifting all the sites that Google knows about on the web through a filter. Those deemed having too much poor quality content get trapped by Panda, which in turn means they may no longer rank as well as in the past. Those that slip through the filter have “escaped” Panda and see no ranking decrease. In fact, they might gain as they move higher into spots vacated by those Panda has dropped.

Since the filter isn’t perfect, Google keeps trying to improve it. Roughly each month, it sifts all the pages its knows about through an updated Panda filter. This might catch pages that weren’t caught before. It might also free pages that may have been caught by mistake.

Importantly, sites themselves get a chance to escape Panda each time the filter is used based on their own attempts to improve. Those that have dropped much poor quality content might find themselves no longer being trapped. Each new release of Panda is chance for a fresh start.

There are two articles from the past that I highly recommend reading to understand this more. One’s even a picture, an infographic:

- Infographic: The Google Panda Update, One Year Later

- Why Google Panda Is More A Ranking Factor Than Algorithm Update

How The EMD Update Works

How does this apply to the EMD Update? First, EMD gets its name because it targets “exact match domains,” which are domains that exactly match the search terms that they hope to be found for.

One common misconception is that EMD means that sites with search terms in their domain names no longer will rank as well as in the past. I’ve not seen evidence of this so far, and it’s certainly not what Google said.

Google specifically said EMD was designed to go after poor quality sites that also have exact match domain names. If you do a search for “google,” you still find plenty of Google web sites that all have “google” in the domain name. EMD didn’t wipe them out because those sites are deemed to have quality content.

Is that Google just favoring itself? I wouldn’t say so. After all, it didn’t wipe out:

- Cars.com for “cars”

- Usedcars.com for “used cars”

- Cheaptickets.com for “cheap tickets”

- Movies.com for “movies”

- Skylightbooks.com for “books”

Instead, EMD is more likely hitting domains like online-computer-training-schools.com, which is a made-up example but hopefully gets the point across. It’s a fairly generic name with lots of keywords in it but no real brand recognition.

Domains like this are often purchased by someone hoping that just having all the words they want to be found for (“online computer training schools”) will help them rank well. It’s true that there’s a small degree of boost to sites for having search terms in their domains with Google, in general. A very small degree.

But such sites also often lacked any really quality content. They were purchased or created in hopes of an easy win, and there’s often no real investment in building them up with decent information or into an actual destination, a site that people would go to directly, not a site they’d just happen upon through a search result.

Some of them lack content at all (are “parked”) or have content that’s taken from other sites (“scraped”). Google already went after parked domains last December (and made a mistake in classifying some sites as parked in April). It’s already been going after scrapers with Panda and other efforts.

EMD seems targeted after low-quality sites that are “in between” these two things, perhaps sites that have content that doesn’t appear scraped because it has been “spun” using software to rewrite the material automatically.

It’s really important to understand that plenty of people have purchased exact match domains in hopes of a ranking boost and have also put in the time and effort to populate these sites with quality content. I’ve already listed some examples of this above, and there are smart “domainers” beyond this who do not park, scrape or spin but instead build a domain with a nice name into a destination, making it more valuable for a future sale.

In short, EMD domains aren’t being targeted; EMD domains with bad content are.

The Many Filters Google Uses

A mystery in all this is that Panda was already designed to punish sites for having bad content. Clearly, Panda wasn’t doing the job in the case of EMD domains, to the degree that Google had to build a completely separate EMD filter.

That means, metaphorically speaking, Google pours all the sites it knows about through a Panda strainer. After that, it pours what didn’t get caught in that strainer through the EMD filter.

In reality, it’s not a case of pouring everything through a variety of different filters all at once. Google’s running different filters at different times, such as:

There are more we don’t even know about, and Google doesn’t announce most of these. But what we’ve learned more and more through Panda is the periodic nature of Google’s filters, the idea that once a filter is introduced, at some point in a few weeks or month, Google will improve that filter and sift content through it again.

To better understand how all these filters can keep the Google results “dancing,” I highly recommend reading my article from last month:

Recovering From EMD

Google confirmed for me this week that EMD is a periodic filter. It isn’t constantly running and looking for bad EMD domains to filter. It’s designed to be used from time-to-time to ensure that what was filtered out before should continue to be filtered. It also works to catch new things that may have been missed before.

If you were hit by EMD, and hope to recover, the advice seems to be very similar to Panda — get rid of the poor quality content. In particular, these articles below might help:

- Your Site’s Traffic Has Plummeted Since Google’s Panda Update. Now What?

- Hit By Panda Update? Google Has 23 Questions To Ask Yourself To Improve

- Lessons Learned at SMX West: Google’s Panda Update, White Hat Cloaking & Link Building

- 5 New Tactics For SEO Post-Panda

- Can You Dig Out Of Your Google Panda Hole By Offloading To Subdomains?

- Yet More Tips For Diagnosing & Fixing Panda Problems

- Google: Low PageRank & Bad Spelling May Go Hand-In-Hand; Panda, Too?

You can find more in the Panda Update section of our Search Engine Land Library. After you’ve removed the poor quality content, it’s waiting time. You’ll only see a change the next time the EMD filter is run.

When will that be? Google’s not saying, but based on the history of Panda, it’s likely to be within the next three months, and eventually it might move to a monthly basis. But it could take longer until EMD 2 hits, nor is there any guarantee it’ll ever ramp-up to a monthly refresh like Panda, nor that Google will even announce when they happen.

To complicate matters, many sites that may have thought they were hit by EMD instead might have been hit by the far bigger Panda Update 20. Google belatedly acknowledged releasing a fresh Panda update the day before EMD was launched.

My advice is that if you were never hit by Panda before — and you have a domain name you purchased in hopes of an “exact match” success — then it’s probably EMD that hit you.

Postscript: Related, a few hours after this was posted, a new Penguin Update was released. See our story, Google Penguin Update 3 Released, Impacts 0.3% Of English-Language Queries.

Related Articles

- The EMD Update: Google Issues “Weather Report” Of Crack Down On Low Quality Exact Match Domains

- Google: Parked Domains, Scraper Sites Targeted Among New Search Changes

- Dropped In Rankings? Google’s Mistake Over Parked Domains Might Be To Blame

- Google: Further Penguin Update “Jolts” To Come; Panda Is Smoother & Monthly

- Infographic: The Google Panda Update, One Year Later

- Google Panda Update 20 Released, 2.4% Of English Queries Impacted

- Periodic Table Of SEO Ranking Factors

- The Return Of The Google Dance

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories