Study: 80% of Google Home results come from snippets

But they aren't always the source of answers for Google Home.

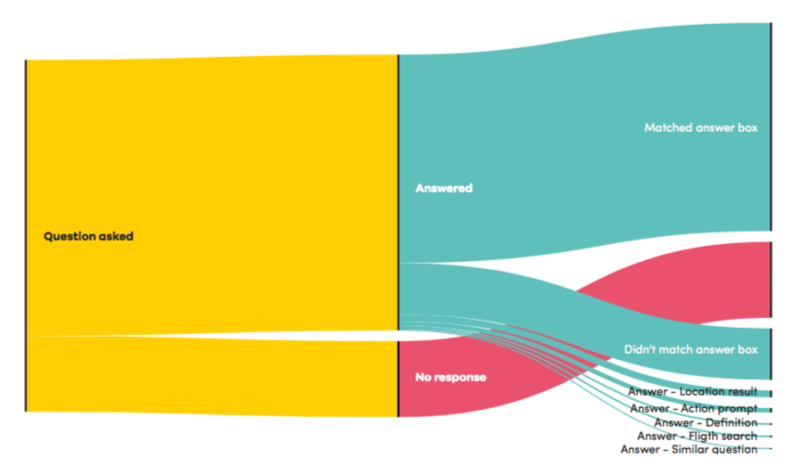

Digital agency ROAST has released a Voice Search Ranking Report (registration required), which seeks to categorize and understand how Google processes and responds to voice queries. It also tries to determine when Google Home uses featured snippets/Answer Box results and when it does not.

The company used keyword analytics to compile a list of “616 key phrases in the UK featuring snippet answer boxes.” It then determined the top phrases by query volume across a range of verticals (e.g., medical, retail, travel, finance). The tests were run in November and compared Google Home and traditional search results.

The study sought to answer the following questions:

- How many of the key phrases were answered [on Google Home]?

- Do the answers given match the answer boxes’ results?

- Which key phrases prompt Google not to user answer boxes?

- Can we compare visibility on voice search to answer boxes? Is there a difference?

In the majority of cases, the Google Home result mirrored the snippet/Answer Box, according to the study. But in a number of cases, when there was a snippet, Home provided no answer or a different answer.

Comparing Desktop Search Results with Google Home

I didn’t do any testing of ROAST’s findings, but in two ad hoc cases where the company said there was no answer, my questions received the same answer featured in the snippet. It’s also the case that rephrasing questions can yield results that initially received an “I can’t help with that yet” response.

The report grouped Google Home answers into six different categories:

- Standard answer (referencing a source/domain, the most common type of response).

- Location result.

- Action prompt (suggestion).

- Definition.

- Flight search.

- Similar question (“I’m not sure, but I can tell you the answer to a similar question”).

Google Home answered just under 75 percent of the queries in the test. When Home provided an answer, roughly 80 percent of answers were the same as the Answer Box, according to ROAST. In 20 percent of those cases, however, answers came from different data sources (e.g., local, flight search).

There’s more detailed discussion of these findings in the report. However, here are ROAST’s verbatim conclusions:

- Just because you occupy the featured snippet answer box result, doesn’t mean you own the voice search result. Sometimes the assistant won’t give any answer or it references a different domain.

- Watch out for key phrases where Google doesn’t use answer box information for results — for example, fights, locations, actions.

- Google My Business is key to any local related searches.

- You need to start to develop a key phrase list specifically for tracking in voice search reports — this list will be different to your normal key phrase list for search.

ROAST says it intends to produce more such reports in different verticals in 2018. I would also encourage others to do similar testing to add more insight to the conversation.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories