A Data-Centric Approach To Identifying 404 Pages Worth Saving

A critical part of doing a site or link audit is checking to see how many 404 (page not found) pages there are in a site. I can’t tell you how many times I’ve seen an audit that lists the total number of 404 pages and advises developers to find appropriate pages to redirect these […]

A critical part of doing a site or link audit is checking to see how many 404 (page not found) pages there are in a site. I can’t tell you how many times I’ve seen an audit that lists the total number of 404 pages and advises developers to find appropriate pages to redirect these 404 pages to.

That’s no big deal if we’re talking about just 20 to 30 pages. But, when a site has 404 pages in the thousands, and you tell the developers to fix these pages, you’re going to look more than a little ridiculous. So, how can you find out which of those 404 pages are actually important?

Two of the most important metrics to look at are backlinks to make sure you don’t lose the most valuable links and total landing page visits in your analytics software. You may have others, like looking at social metrics. Whatever you decide those metrics to be, you want to export them all from your tools du jour and wed them in Excel.

Gather 404 Pages

There are several different sources you can use to find your site’s 404 pages. My two favorites are Screaming Frog and Google Webmaster Tools (GWT).

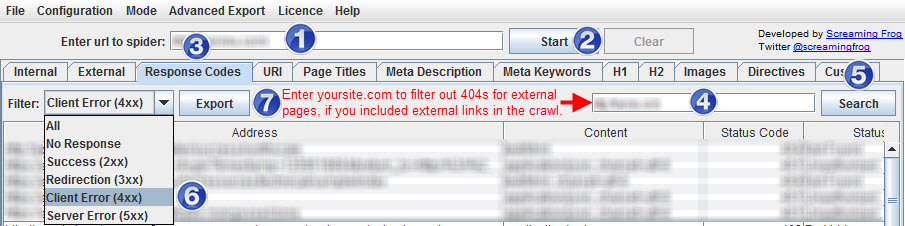

To find your site’s 404s with Screaming Frog, after running a scan of the site, go to Response Codes > Filter: Client Error (4xx) > Export.

To flesh out your site’s 404 pages with Google Webmaster Tools, go to Health > Crawl Errors > URL Errors > Web or Mobile: Not found > Download.

Strip your csv download of everything but the list of 404 URLs, and save the file as an xlsx file.

Pull Landing Page Data

If you’re only responsible for SEO, you may want to restrict your export to organic traffic. In Google Analytics (GA), you’d navigate to Traffic Sources > Sources > Search > Organic > Primary Dimension: Landing Page.

But, I think that approach is a bit shortsighted. I much prefer looking for all important landing pages, which you get to by navigating to Content > Site Content > Landing Pages.

To pull all of them, you need to look at the total number of landing pages in the bottom-right corner of the report (e.g., 1 – 10 of 441). If you have more than 5oo landing pages, you’ll need to use this trick to get them all.

To get the full URL of your landing pages, you’ll need to use this technique in the third section of the post, especially if your site uses subdomains and you’re not including hostname in your content reports.

Get Your Backlink Data

First, pull backlinks from your favorite tool. It’s outside of the scope of this post for how to do that; but, here are links to learn more about how to use each of the tools:

- Open Site Explorer

- Majestic

- Ahrefs

- Google Webmaster Tools (Traffic > Links to Your Site > Your Most Linked Content > More)

Pull It All Together

Once you know that all of your URLs follow the same syntax (by either having them all start with https:// or removing the https:// from all of the URLs), you’re ready to stitch all of these metrics together using VLOOKUPs in Excel. If you’re new to VLOOKUPs, check out this introduction on the Microsoft site.

Make sure you format your dataset as a table so that you can sort the data by the number of landing page visits, backlinks, or page authority — or whatever else you want to pull into the dataset.

By taking this kind of data-centric approach, you can fairly easily identify the backlinks you actually need to address and fix.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land